mirror of

https://github.com/mudler/LocalAI.git

synced 2026-02-03 03:02:38 -05:00

Compare commits

62 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

2f5feb4841 | ||

|

|

4e3c319e83 | ||

|

|

d0025a7483 | ||

|

|

db0b29be51 | ||

|

|

7da07e8af9 | ||

|

|

6da892758b | ||

|

|

5e88930475 | ||

|

|

97b02f9765 | ||

|

|

7ee1b10dfb | ||

|

|

3932c15823 | ||

|

|

618fd1d417 | ||

|

|

151a6cf4c2 | ||

|

|

1766de814c | ||

|

|

0b351d6da2 | ||

|

|

6623ce9942 | ||

|

|

1dbc190fa6 | ||

|

|

46b9445fa6 | ||

|

|

d3d3187e51 | ||

|

|

6c94f3cd67 | ||

|

|

295f3030a9 | ||

|

|

1ba88258a9 | ||

|

|

10ddd72b58 | ||

|

|

1b7990d5d9 | ||

|

|

9f50b8024d | ||

|

|

7b9dcb05d4 | ||

|

|

e37361985c | ||

|

|

467e88d305 | ||

|

|

fe4a8fbc74 | ||

|

|

2328bbaea1 | ||

|

|

4cc834adcd | ||

|

|

5e49ff5072 | ||

|

|

f98680a18a | ||

|

|

2880221bb3 | ||

|

|

27887c74d8 | ||

|

|

6306885fe7 | ||

|

|

2a11f16c0f | ||

|

|

2297504fb3 | ||

|

|

897ac6e4e5 | ||

|

|

f20c12a1c0 | ||

|

|

5dea31385c | ||

|

|

58f0f63926 | ||

|

|

ed2bf48a6d | ||

|

|

e6c8ebb65c | ||

|

|

119733892e | ||

|

|

437f563128 | ||

|

|

ecad2261c8 | ||

|

|

182323a7fb | ||

|

|

30d06f9b12 | ||

|

|

6bb562272d | ||

|

|

3b3164b039 | ||

|

|

6f0bdbd01c | ||

|

|

ce2a1799ab | ||

|

|

d088bd3034 | ||

|

|

806e4c3a63 | ||

|

|

8532ce2002 | ||

|

|

84946e9275 | ||

|

|

c9bbba4872 | ||

|

|

ea9a651573 | ||

|

|

5abbb134d9 | ||

|

|

694dd4ad9e | ||

|

|

4af48e548a | ||

|

|

079dc197c7 |

5

.github/workflows/release.yaml

vendored

5

.github/workflows/release.yaml

vendored

@@ -60,11 +60,6 @@ jobs:

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

submodules: true

|

||||

|

||||

- name: Dependencies

|

||||

run: |

|

||||

brew update

|

||||

brew install sdl2 ffmpeg

|

||||

- name: Build

|

||||

id: build

|

||||

env:

|

||||

|

||||

6

.github/workflows/test.yml

vendored

6

.github/workflows/test.yml

vendored

@@ -39,10 +39,6 @@ jobs:

|

||||

with:

|

||||

submodules: true

|

||||

|

||||

- name: Dependencies

|

||||

run: |

|

||||

brew update

|

||||

brew install sdl2 ffmpeg

|

||||

- name: Test

|

||||

run: |

|

||||

make test

|

||||

CMAKE_ARGS="-DLLAMA_F16C=OFF -DLLAMA_AVX512=OFF -DLLAMA_AVX2=OFF -DLLAMA_FMA=OFF" make test

|

||||

4

.gitignore

vendored

4

.gitignore

vendored

@@ -27,4 +27,6 @@ release/

|

||||

.idea

|

||||

|

||||

# Generated during build

|

||||

backend-assets/

|

||||

backend-assets/

|

||||

|

||||

/ggml-metal.metal

|

||||

61

Dockerfile

61

Dockerfile

@@ -1,24 +1,15 @@

|

||||

ARG GO_VERSION=1.20

|

||||

ARG GO_VERSION=1.20-bullseye

|

||||

|

||||

FROM golang:$GO_VERSION as builder

|

||||

FROM golang:$GO_VERSION as requirements

|

||||

|

||||

ARG BUILD_TYPE=

|

||||

ARG GO_TAGS=stablediffusion

|

||||

ARG BUILD_TYPE

|

||||

ARG CUDA_MAJOR_VERSION=11

|

||||

ARG CUDA_MINOR_VERSION=7

|

||||

|

||||

ENV BUILD_TYPE=${BUILD_TYPE}

|

||||

ENV GO_TAGS=${GO_TAGS}

|

||||

ENV NVIDIA_DRIVER_CAPABILITIES=compute,utility

|

||||

ENV NVIDIA_REQUIRE_CUDA="cuda>=${CUDA_MAJOR_VERSION}.0"

|

||||

ENV NVIDIA_VISIBLE_DEVICES=all

|

||||

ENV HEALTHCHECK_ENDPOINT=http://localhost:8080/readyz

|

||||

ENV REBUILD=true

|

||||

|

||||

WORKDIR /build

|

||||

|

||||

RUN apt-get update && \

|

||||

apt-get install -y ca-certificates cmake curl

|

||||

apt-get install -y ca-certificates cmake curl patch

|

||||

|

||||

# CuBLAS requirements

|

||||

RUN if [ "${BUILD_TYPE}" = "cublas" ]; then \

|

||||

@@ -39,55 +30,33 @@ RUN apt-get install -y libopenblas-dev

|

||||

RUN apt-get install -y libopencv-dev && \

|

||||

ln -s /usr/include/opencv4/opencv2 /usr/include/opencv2

|

||||

|

||||

COPY . .

|

||||

RUN make build

|

||||

FROM requirements as builder

|

||||

|

||||

FROM golang:$GO_VERSION

|

||||

|

||||

ARG BUILD_TYPE=

|

||||

ARG GO_TAGS=stablediffusion

|

||||

ARG CUDA_MAJOR_VERSION=11

|

||||

ARG CUDA_MINOR_VERSION=7

|

||||

ARG FFMPEG=

|

||||

|

||||

ENV BUILD_TYPE=${BUILD_TYPE}

|

||||

ENV GO_TAGS=${GO_TAGS}

|

||||

ENV NVIDIA_DRIVER_CAPABILITIES=compute,utility

|

||||

ENV NVIDIA_REQUIRE_CUDA="cuda>=${CUDA_MAJOR_VERSION}.0"

|

||||

ENV NVIDIA_VISIBLE_DEVICES=all

|

||||

ENV HEALTHCHECK_ENDPOINT=http://localhost:8080/readyz

|

||||

|

||||

ENV REBUILD=true

|

||||

|

||||

WORKDIR /build

|

||||

|

||||

RUN apt-get update && \

|

||||

apt-get install -y ca-certificates cmake curl

|

||||

COPY . .

|

||||

RUN make build

|

||||

|

||||

# CuBLAS requirements

|

||||

RUN if [ "${BUILD_TYPE}" = "cublas" ]; then \

|

||||

apt-get install -y software-properties-common && \

|

||||

apt-add-repository contrib && \

|

||||

curl -O https://developer.download.nvidia.com/compute/cuda/repos/debian11/x86_64/cuda-keyring_1.0-1_all.deb && \

|

||||

dpkg -i cuda-keyring_1.0-1_all.deb && \

|

||||

rm -f cuda-keyring_1.0-1_all.deb && \

|

||||

apt-get update && \

|

||||

apt-get install -y cuda-nvcc-${CUDA_MAJOR_VERSION}-${CUDA_MINOR_VERSION} libcublas-dev-${CUDA_MAJOR_VERSION}-${CUDA_MINOR_VERSION} \

|

||||

; fi

|

||||

FROM requirements

|

||||

|

||||

ARG FFMPEG

|

||||

|

||||

ENV REBUILD=true

|

||||

ENV HEALTHCHECK_ENDPOINT=http://localhost:8080/readyz

|

||||

|

||||

# Add FFmpeg

|

||||

RUN if [ "${FFMPEG}" = "true" ]; then \

|

||||

apt-get install -y ffmpeg \

|

||||

; fi

|

||||

|

||||

ENV PATH /usr/local/cuda/bin:${PATH}

|

||||

|

||||

# OpenBLAS requirements

|

||||

RUN apt-get install -y libopenblas-dev

|

||||

|

||||

# Stable Diffusion requirements

|

||||

RUN apt-get install -y libopencv-dev && \

|

||||

ln -s /usr/include/opencv4/opencv2 /usr/include/opencv2

|

||||

WORKDIR /build

|

||||

|

||||

COPY . .

|

||||

RUN make prepare-sources

|

||||

@@ -98,4 +67,4 @@ HEALTHCHECK --interval=1m --timeout=10m --retries=10 \

|

||||

CMD curl -f $HEALTHCHECK_ENDPOINT || exit 1

|

||||

|

||||

EXPOSE 8080

|

||||

ENTRYPOINT [ "/build/entrypoint.sh" ]

|

||||

ENTRYPOINT [ "/build/entrypoint.sh" ]

|

||||

19

Makefile

19

Makefile

@@ -3,14 +3,14 @@ GOTEST=$(GOCMD) test

|

||||

GOVET=$(GOCMD) vet

|

||||

BINARY_NAME=local-ai

|

||||

|

||||

GOLLAMA_VERSION?=37ef81d01ae0848575e416e48b41d112ef0d520e

|

||||

GPT4ALL_REPO?=https://github.com/go-skynet/gpt4all

|

||||

GPT4ALL_VERSION?=f7498c9

|

||||

GOGGMLTRANSFORMERS_VERSION?=bd765bb6f3b38a63f915f3725e488aad492eedd4

|

||||

GOLLAMA_VERSION?=7ad833b67070fd3ec46d838f5e38d21111013f98

|

||||

GPT4ALL_REPO?=https://github.com/nomic-ai/gpt4all

|

||||

GPT4ALL_VERSION?=2b6cc99a31a124f1f27f2dc6515b94b84d35b254

|

||||

GOGGMLTRANSFORMERS_VERSION?=661669258dd0a752f3f3607358b168bc1d928135

|

||||

RWKV_REPO?=https://github.com/donomii/go-rwkv.cpp

|

||||

RWKV_VERSION?=1e18b2490e7e32f6b00e16f6a9ec0dd3a3d09266

|

||||

RWKV_VERSION?=930a774fa0152426ed2279cb1005b3490bb0eba6

|

||||

WHISPER_CPP_VERSION?=57543c169e27312e7546d07ed0d8c6eb806ebc36

|

||||

BERT_VERSION?=0548994371f7081e45fcf8d472f3941a12f179aa

|

||||

BERT_VERSION?=6069103f54b9969c02e789d0fb12a23bd614285f

|

||||

BLOOMZ_VERSION?=1834e77b83faafe912ad4092ccf7f77937349e2f

|

||||

export BUILD_TYPE?=

|

||||

CGO_LDFLAGS?=

|

||||

@@ -70,6 +70,7 @@ gpt4all:

|

||||

# This is hackish, but needed as both go-llama and go-gpt4allj have their own version of ggml..

|

||||

@find ./gpt4all -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_gpt4all_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_gpt4all_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.m" -exec sed -i'' -e 's/ggml_/ggml_gpt4all_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_gpt4all_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.c" -exec sed -i'' -e 's/llama_/llama_gpt4all_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/llama_/llama_gpt4all_/g' {} +

|

||||

@@ -219,6 +220,9 @@ build: prepare ## Build the project

|

||||

$(info ${GREEN}I BUILD_TYPE: ${YELLOW}$(BUILD_TYPE)${RESET})

|

||||

$(info ${GREEN}I GO_TAGS: ${YELLOW}$(GO_TAGS)${RESET})

|

||||

CGO_LDFLAGS="$(CGO_LDFLAGS)" C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} $(GOCMD) build -ldflags "$(LD_FLAGS)" -tags "$(GO_TAGS)" -o $(BINARY_NAME) ./

|

||||

ifeq ($(BUILD_TYPE),metal)

|

||||

cp go-llama/build/bin/ggml-metal.metal .

|

||||

endif

|

||||

|

||||

dist: build

|

||||

mkdir -p release

|

||||

@@ -245,8 +249,9 @@ test-models/testmodel:

|

||||

test: prepare test-models/testmodel

|

||||

cp -r backend-assets api

|

||||

cp tests/models_fixtures/* test-models

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} TEST_DIR=$(abspath ./)/test-dir/ FIXTURES=$(abspath ./)/tests/fixtures CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) run github.com/onsi/ginkgo/v2/ginkgo --label-filter="!gpt4all" --flake-attempts 5 -v -r ./api ./pkg

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} TEST_DIR=$(abspath ./)/test-dir/ FIXTURES=$(abspath ./)/tests/fixtures CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) run github.com/onsi/ginkgo/v2/ginkgo --label-filter="!gpt4all && !llama" --flake-attempts 5 -v -r ./api ./pkg

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} TEST_DIR=$(abspath ./)/test-dir/ FIXTURES=$(abspath ./)/tests/fixtures CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) run github.com/onsi/ginkgo/v2/ginkgo --label-filter="gpt4all" --flake-attempts 5 -v -r ./api ./pkg

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} TEST_DIR=$(abspath ./)/test-dir/ FIXTURES=$(abspath ./)/tests/fixtures CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) run github.com/onsi/ginkgo/v2/ginkgo --label-filter="llama" --flake-attempts 5 -v -r ./api ./pkg

|

||||

|

||||

## Help:

|

||||

help: ## Show this help.

|

||||

|

||||

@@ -32,6 +32,7 @@ See the [Getting started](https://localai.io/basics/getting_started/index.html)

|

||||

|

||||

## News

|

||||

|

||||

- 🔥🔥🔥 06-06-2023: **v1.18.0**: Many updates, new features, and much more 🚀, check out the [Changelog](https://localai.io/basics/news/index.html#-06-06-2023-__v1180__-)!

|

||||

- 29-05-2023: LocalAI now has a website, [https://localai.io](https://localai.io)! check the news in the [dedicated section](https://localai.io/basics/news/index.html)!

|

||||

|

||||

For latest news, follow also on Twitter [@LocalAI_API](https://twitter.com/LocalAI_API) and [@mudler_it](https://twitter.com/mudler_it)

|

||||

|

||||

@@ -3,6 +3,7 @@ package api

|

||||

import (

|

||||

"errors"

|

||||

|

||||

"github.com/go-skynet/LocalAI/pkg/assets"

|

||||

"github.com/gofiber/fiber/v2"

|

||||

"github.com/gofiber/fiber/v2/middleware/cors"

|

||||

"github.com/gofiber/fiber/v2/middleware/logger"

|

||||

@@ -68,7 +69,9 @@ func App(opts ...AppOption) (*fiber.App, error) {

|

||||

}

|

||||

|

||||

if options.assetsDestination != "" {

|

||||

if err := PrepareBackendAssets(options.backendAssets, options.assetsDestination); err != nil {

|

||||

// Extract files from the embedded FS

|

||||

err := assets.ExtractFiles(options.backendAssets, options.assetsDestination)

|

||||

if err != nil {

|

||||

log.Warn().Msgf("Failed extracting backend assets files: %s (might be required for some backends to work properly, like gpt4all)", err)

|

||||

}

|

||||

}

|

||||

|

||||

@@ -195,6 +195,33 @@ var _ = Describe("API test", func() {

|

||||

Expect(err).ToNot(HaveOccurred())

|

||||

Expect(content["backend"]).To(Equal("bert-embeddings"))

|

||||

})

|

||||

|

||||

It("runs openllama", Label("llama"), func() {

|

||||

if runtime.GOOS != "linux" {

|

||||

Skip("test supported only on linux")

|

||||

}

|

||||

response := postModelApplyRequest("http://127.0.0.1:9090/models/apply", modelApplyRequest{

|

||||

URL: "github:go-skynet/model-gallery/openllama_3b.yaml",

|

||||

Name: "openllama_3b",

|

||||

Overrides: map[string]string{},

|

||||

})

|

||||

|

||||

Expect(response["uuid"]).ToNot(BeEmpty(), fmt.Sprint(response))

|

||||

|

||||

uuid := response["uuid"].(string)

|

||||

|

||||

Eventually(func() bool {

|

||||

response := getModelStatus("http://127.0.0.1:9090/models/jobs/" + uuid)

|

||||

fmt.Println(response)

|

||||

return response["processed"].(bool)

|

||||

}, "360s").Should(Equal(true))

|

||||

|

||||

resp, err := client.CreateCompletion(context.TODO(), openai.CompletionRequest{Model: "openllama_3b", Prompt: "Count up to five: one, two, three, four, "})

|

||||

Expect(err).ToNot(HaveOccurred())

|

||||

Expect(len(resp.Choices)).To(Equal(1))

|

||||

Expect(resp.Choices[0].Text).To(ContainSubstring("five"))

|

||||

})

|

||||

|

||||

It("runs gpt4all", Label("gpt4all"), func() {

|

||||

if runtime.GOOS != "linux" {

|

||||

Skip("test supported only on linux")

|

||||

|

||||

@@ -1,27 +0,0 @@

|

||||

package api

|

||||

|

||||

import (

|

||||

"embed"

|

||||

"os"

|

||||

"path/filepath"

|

||||

|

||||

"github.com/go-skynet/LocalAI/pkg/assets"

|

||||

"github.com/rs/zerolog/log"

|

||||

)

|

||||

|

||||

func PrepareBackendAssets(backendAssets embed.FS, dst string) error {

|

||||

|

||||

// Extract files from the embedded FS

|

||||

err := assets.ExtractFiles(backendAssets, dst)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

// Set GPT4ALL libs where we extracted the files

|

||||

// https://github.com/nomic-ai/gpt4all/commit/27e80e1d10985490c9fd4214e4bf458cfcf70896

|

||||

gpt4alldir := filepath.Join(dst, "backend-assets", "gpt4all")

|

||||

os.Setenv("GPT4ALL_IMPLEMENTATIONS_PATH", gpt4alldir)

|

||||

log.Debug().Msgf("GPT4ALL_IMPLEMENTATIONS_PATH: %s", gpt4alldir)

|

||||

|

||||

return nil

|

||||

}

|

||||

@@ -16,27 +16,34 @@ import (

|

||||

)

|

||||

|

||||

type Config struct {

|

||||

OpenAIRequest `yaml:"parameters"`

|

||||

Name string `yaml:"name"`

|

||||

StopWords []string `yaml:"stopwords"`

|

||||

Cutstrings []string `yaml:"cutstrings"`

|

||||

TrimSpace []string `yaml:"trimspace"`

|

||||

ContextSize int `yaml:"context_size"`

|

||||

F16 bool `yaml:"f16"`

|

||||

Threads int `yaml:"threads"`

|

||||

Debug bool `yaml:"debug"`

|

||||

Roles map[string]string `yaml:"roles"`

|

||||

Embeddings bool `yaml:"embeddings"`

|

||||

Backend string `yaml:"backend"`

|

||||

TemplateConfig TemplateConfig `yaml:"template"`

|

||||

MirostatETA float64 `yaml:"mirostat_eta"`

|

||||

MirostatTAU float64 `yaml:"mirostat_tau"`

|

||||

Mirostat int `yaml:"mirostat"`

|

||||

NGPULayers int `yaml:"gpu_layers"`

|

||||

ImageGenerationAssets string `yaml:"asset_dir"`

|

||||

OpenAIRequest `yaml:"parameters"`

|

||||

Name string `yaml:"name"`

|

||||

StopWords []string `yaml:"stopwords"`

|

||||

Cutstrings []string `yaml:"cutstrings"`

|

||||

TrimSpace []string `yaml:"trimspace"`

|

||||

ContextSize int `yaml:"context_size"`

|

||||

F16 bool `yaml:"f16"`

|

||||

Threads int `yaml:"threads"`

|

||||

Debug bool `yaml:"debug"`

|

||||

Roles map[string]string `yaml:"roles"`

|

||||

Embeddings bool `yaml:"embeddings"`

|

||||

Backend string `yaml:"backend"`

|

||||

TemplateConfig TemplateConfig `yaml:"template"`

|

||||

MirostatETA float64 `yaml:"mirostat_eta"`

|

||||

MirostatTAU float64 `yaml:"mirostat_tau"`

|

||||

Mirostat int `yaml:"mirostat"`

|

||||

NGPULayers int `yaml:"gpu_layers"`

|

||||

MMap bool `yaml:"mmap"`

|

||||

MMlock bool `yaml:"mmlock"`

|

||||

LowVRAM bool `yaml:"low_vram"`

|

||||

|

||||

TensorSplit string `yaml:"tensor_split"`

|

||||

MainGPU string `yaml:"main_gpu"`

|

||||

ImageGenerationAssets string `yaml:"asset_dir"`

|

||||

|

||||

PromptCachePath string `yaml:"prompt_cache_path"`

|

||||

PromptCacheAll bool `yaml:"prompt_cache_all"`

|

||||

PromptCacheRO bool `yaml:"prompt_cache_ro"`

|

||||

|

||||

PromptStrings, InputStrings []string

|

||||

InputToken [][]int

|

||||

@@ -53,6 +60,12 @@ type ConfigMerger struct {

|

||||

sync.Mutex

|

||||

}

|

||||

|

||||

func defaultConfig(modelFile string) *Config {

|

||||

return &Config{

|

||||

OpenAIRequest: defaultRequest(modelFile),

|

||||

}

|

||||

}

|

||||

|

||||

func NewConfigMerger() *ConfigMerger {

|

||||

return &ConfigMerger{

|

||||

configs: make(map[string]Config),

|

||||

@@ -224,6 +237,10 @@ func updateConfig(config *Config, input *OpenAIRequest) {

|

||||

config.MirostatTAU = input.MirostatTAU

|

||||

}

|

||||

|

||||

if input.TypicalP != 0 {

|

||||

config.TypicalP = input.TypicalP

|

||||

}

|

||||

|

||||

switch inputs := input.Input.(type) {

|

||||

case string:

|

||||

if inputs != "" {

|

||||

@@ -308,13 +325,11 @@ func readConfig(modelFile string, input *OpenAIRequest, cm *ConfigMerger, loader

|

||||

var config *Config

|

||||

cfg, exists := cm.GetConfig(modelFile)

|

||||

if !exists {

|

||||

config = &Config{

|

||||

OpenAIRequest: defaultRequest(modelFile),

|

||||

ContextSize: ctx,

|

||||

Threads: threads,

|

||||

F16: f16,

|

||||

Debug: debug,

|

||||

}

|

||||

config = defaultConfig(modelFile)

|

||||

config.ContextSize = ctx

|

||||

config.Threads = threads

|

||||

config.F16 = f16

|

||||

config.Debug = debug

|

||||

} else {

|

||||

config = &cfg

|

||||

}

|

||||

|

||||

@@ -10,10 +10,12 @@ import (

|

||||

"os"

|

||||

"strings"

|

||||

"sync"

|

||||

"time"

|

||||

|

||||

"github.com/go-skynet/LocalAI/pkg/gallery"

|

||||

"github.com/gofiber/fiber/v2"

|

||||

"github.com/google/uuid"

|

||||

"github.com/rs/zerolog/log"

|

||||

"gopkg.in/yaml.v3"

|

||||

)

|

||||

|

||||

@@ -23,9 +25,12 @@ type galleryOp struct {

|

||||

}

|

||||

|

||||

type galleryOpStatus struct {

|

||||

Error error `json:"error"`

|

||||

Processed bool `json:"processed"`

|

||||

Message string `json:"message"`

|

||||

Error error `json:"error"`

|

||||

Processed bool `json:"processed"`

|

||||

Message string `json:"message"`

|

||||

Progress float64 `json:"progress"`

|

||||

TotalFileSize string `json:"file_size"`

|

||||

DownloadedFileSize string `json:"downloaded_size"`

|

||||

}

|

||||

|

||||

type galleryApplier struct {

|

||||

@@ -43,7 +48,7 @@ func newGalleryApplier(modelPath string) *galleryApplier {

|

||||

}

|

||||

}

|

||||

|

||||

func applyGallery(modelPath string, req ApplyGalleryModelRequest, cm *ConfigMerger) error {

|

||||

func applyGallery(modelPath string, req ApplyGalleryModelRequest, cm *ConfigMerger, downloadStatus func(string, string, string, float64)) error {

|

||||

url, err := req.DecodeURL()

|

||||

if err != nil {

|

||||

return err

|

||||

@@ -71,7 +76,7 @@ func applyGallery(modelPath string, req ApplyGalleryModelRequest, cm *ConfigMerg

|

||||

|

||||

config.Files = append(config.Files, req.AdditionalFiles...)

|

||||

|

||||

if err := gallery.Apply(modelPath, req.Name, &config, req.Overrides); err != nil {

|

||||

if err := gallery.Apply(modelPath, req.Name, &config, req.Overrides, downloadStatus); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

@@ -99,23 +104,51 @@ func (g *galleryApplier) start(c context.Context, cm *ConfigMerger) {

|

||||

case <-c.Done():

|

||||

return

|

||||

case op := <-g.C:

|

||||

g.updatestatus(op.id, &galleryOpStatus{Message: "processing"})

|

||||

g.updatestatus(op.id, &galleryOpStatus{Message: "processing", Progress: 0})

|

||||

|

||||

updateError := func(e error) {

|

||||

g.updatestatus(op.id, &galleryOpStatus{Error: e, Processed: true})

|

||||

}

|

||||

|

||||

if err := applyGallery(g.modelPath, op.req, cm); err != nil {

|

||||

if err := applyGallery(g.modelPath, op.req, cm, func(fileName string, current string, total string, percentage float64) {

|

||||

g.updatestatus(op.id, &galleryOpStatus{Message: "processing", Progress: percentage, TotalFileSize: total, DownloadedFileSize: current})

|

||||

displayDownload(fileName, current, total, percentage)

|

||||

}); err != nil {

|

||||

updateError(err)

|

||||

continue

|

||||

}

|

||||

|

||||

g.updatestatus(op.id, &galleryOpStatus{Processed: true, Message: "completed"})

|

||||

g.updatestatus(op.id, &galleryOpStatus{Processed: true, Message: "completed", Progress: 100})

|

||||

}

|

||||

}

|

||||

}()

|

||||

}

|

||||

|

||||

var lastProgress time.Time = time.Now()

|

||||

var startTime time.Time = time.Now()

|

||||

|

||||

func displayDownload(fileName string, current string, total string, percentage float64) {

|

||||

currentTime := time.Now()

|

||||

|

||||

if currentTime.Sub(lastProgress) >= 5*time.Second {

|

||||

|

||||

lastProgress = currentTime

|

||||

|

||||

// calculate ETA based on percentage and elapsed time

|

||||

var eta time.Duration

|

||||

if percentage > 0 {

|

||||

elapsed := currentTime.Sub(startTime)

|

||||

eta = time.Duration(float64(elapsed)*(100/percentage) - float64(elapsed))

|

||||

}

|

||||

|

||||

if total != "" {

|

||||

log.Debug().Msgf("Downloading %s: %s/%s (%.2f%%) ETA: %s", fileName, current, total, percentage, eta)

|

||||

} else {

|

||||

log.Debug().Msgf("Downloading: %s", current)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

func ApplyGalleryFromFile(modelPath, s string, cm *ConfigMerger) error {

|

||||

dat, err := os.ReadFile(s)

|

||||

if err != nil {

|

||||

@@ -128,13 +161,14 @@ func ApplyGalleryFromFile(modelPath, s string, cm *ConfigMerger) error {

|

||||

}

|

||||

|

||||

for _, r := range requests {

|

||||

if err := applyGallery(modelPath, r, cm); err != nil {

|

||||

if err := applyGallery(modelPath, r, cm, displayDownload); err != nil {

|

||||

return err

|

||||

}

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

func ApplyGalleryFromString(modelPath, s string, cm *ConfigMerger) error {

|

||||

var requests []ApplyGalleryModelRequest

|

||||

err := json.Unmarshal([]byte(s), &requests)

|

||||

@@ -143,7 +177,7 @@ func ApplyGalleryFromString(modelPath, s string, cm *ConfigMerger) error {

|

||||

}

|

||||

|

||||

for _, r := range requests {

|

||||

if err := applyGallery(modelPath, r, cm); err != nil {

|

||||

if err := applyGallery(modelPath, r, cm, displayDownload); err != nil {

|

||||

return err

|

||||

}

|

||||

}

|

||||

|

||||

@@ -4,8 +4,8 @@ import (

|

||||

"bufio"

|

||||

"bytes"

|

||||

"encoding/base64"

|

||||

"errors"

|

||||

"encoding/json"

|

||||

"errors"

|

||||

"fmt"

|

||||

"io"

|

||||

"io/ioutil"

|

||||

@@ -125,11 +125,16 @@ type OpenAIRequest struct {

|

||||

MirostatTAU float64 `json:"mirostat_tau" yaml:"mirostat_tau"`

|

||||

Mirostat int `json:"mirostat" yaml:"mirostat"`

|

||||

|

||||

FrequencyPenalty float64 `json:"frequency_penalty" yaml:"frequency_penalty"`

|

||||

TFZ float64 `json:"tfz" yaml:"tfz"`

|

||||

|

||||

Seed int `json:"seed" yaml:"seed"`

|

||||

|

||||

// Image (not supported by OpenAI)

|

||||

Mode int `json:"mode"`

|

||||

Step int `json:"step"`

|

||||

|

||||

TypicalP float64 `json:"typical_p" yaml:"typical_p"`

|

||||

}

|

||||

|

||||

func defaultRequest(modelFile string) OpenAIRequest {

|

||||

@@ -145,7 +150,7 @@ func defaultRequest(modelFile string) OpenAIRequest {

|

||||

// https://platform.openai.com/docs/api-reference/completions

|

||||

func completionEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

process := func(s string, req *OpenAIRequest, config *Config, loader *model.ModelLoader, responses chan OpenAIResponse) {

|

||||

ComputeChoices(s, req, config, loader, func(s string, c *[]Choice) {}, func(s string) bool {

|

||||

ComputeChoices(s, req, config, o, loader, func(s string, c *[]Choice) {}, func(s string) bool {

|

||||

resp := OpenAIResponse{

|

||||

Model: req.Model, // we have to return what the user sent here, due to OpenAI spec.

|

||||

Choices: []Choice{{Text: s}},

|

||||

@@ -191,7 +196,7 @@ func completionEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

}

|

||||

|

||||

if input.Stream {

|

||||

if (len(config.PromptStrings) > 1) {

|

||||

if len(config.PromptStrings) > 1 {

|

||||

return errors.New("cannot handle more than 1 `PromptStrings` when `Stream`ing")

|

||||

}

|

||||

|

||||

@@ -246,7 +251,7 @@ func completionEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

log.Debug().Msgf("Template found, input modified to: %s", i)

|

||||

}

|

||||

|

||||

r, err := ComputeChoices(i, input, config, o.loader, func(s string, c *[]Choice) {

|

||||

r, err := ComputeChoices(i, input, config, o, o.loader, func(s string, c *[]Choice) {

|

||||

*c = append(*c, Choice{Text: s})

|

||||

}, nil)

|

||||

if err != nil {

|

||||

@@ -288,7 +293,7 @@ func embeddingsEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

|

||||

for i, s := range config.InputToken {

|

||||

// get the model function to call for the result

|

||||

embedFn, err := ModelEmbedding("", s, o.loader, *config)

|

||||

embedFn, err := ModelEmbedding("", s, o.loader, *config, o)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

@@ -302,7 +307,7 @@ func embeddingsEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

|

||||

for i, s := range config.InputStrings {

|

||||

// get the model function to call for the result

|

||||

embedFn, err := ModelEmbedding(s, []int{}, o.loader, *config)

|

||||

embedFn, err := ModelEmbedding(s, []int{}, o.loader, *config, o)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

@@ -338,7 +343,7 @@ func chatEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

}

|

||||

responses <- initialMessage

|

||||

|

||||

ComputeChoices(s, req, config, loader, func(s string, c *[]Choice) {}, func(s string) bool {

|

||||

ComputeChoices(s, req, config, o, loader, func(s string, c *[]Choice) {}, func(s string) bool {

|

||||

resp := OpenAIResponse{

|

||||

Model: req.Model, // we have to return what the user sent here, due to OpenAI spec.

|

||||

Choices: []Choice{{Delta: &Message{Content: s}}},

|

||||

@@ -436,7 +441,7 @@ func chatEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

return nil

|

||||

}

|

||||

|

||||

result, err := ComputeChoices(predInput, input, config, o.loader, func(s string, c *[]Choice) {

|

||||

result, err := ComputeChoices(predInput, input, config, o, o.loader, func(s string, c *[]Choice) {

|

||||

*c = append(*c, Choice{Message: &Message{Role: "assistant", Content: s}})

|

||||

}, nil)

|

||||

if err != nil {

|

||||

@@ -488,7 +493,7 @@ func editEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

log.Debug().Msgf("Template found, input modified to: %s", i)

|

||||

}

|

||||

|

||||

r, err := ComputeChoices(i, input, config, o.loader, func(s string, c *[]Choice) {

|

||||

r, err := ComputeChoices(i, input, config, o, o.loader, func(s string, c *[]Choice) {

|

||||

*c = append(*c, Choice{Text: s})

|

||||

}, nil)

|

||||

if err != nil {

|

||||

@@ -613,7 +618,7 @@ func imageEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

|

||||

baseURL := c.BaseURL()

|

||||

|

||||

fn, err := ImageGeneration(height, width, mode, step, input.Seed, positive_prompt, negative_prompt, output, o.loader, *config)

|

||||

fn, err := ImageGeneration(height, width, mode, step, input.Seed, positive_prompt, negative_prompt, output, o.loader, *config, o)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

@@ -694,7 +699,7 @@ func transcriptEndpoint(cm *ConfigMerger, o *Option) func(c *fiber.Ctx) error {

|

||||

|

||||

log.Debug().Msgf("Audio file copied to: %+v", dst)

|

||||

|

||||

whisperModel, err := o.loader.BackendLoader(model.WhisperBackend, config.Model, []llama.ModelOption{}, uint32(config.Threads))

|

||||

whisperModel, err := o.loader.BackendLoader(model.WhisperBackend, config.Model, []llama.ModelOption{}, uint32(config.Threads), o.assetsDestination)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

@@ -39,14 +39,27 @@ func defaultLLamaOpts(c Config) []llama.ModelOption {

|

||||

llamaOpts = append(llamaOpts, llama.SetGPULayers(c.NGPULayers))

|

||||

}

|

||||

|

||||

llamaOpts = append(llamaOpts, llama.SetMMap(c.MMap))

|

||||

llamaOpts = append(llamaOpts, llama.SetMainGPU(c.MainGPU))

|

||||

llamaOpts = append(llamaOpts, llama.SetTensorSplit(c.TensorSplit))

|

||||

if c.Batch != 0 {

|

||||

llamaOpts = append(llamaOpts, llama.SetNBatch(c.Batch))

|

||||

} else {

|

||||

llamaOpts = append(llamaOpts, llama.SetNBatch(512))

|

||||

}

|

||||

|

||||

if c.LowVRAM {

|

||||

llamaOpts = append(llamaOpts, llama.EnabelLowVRAM)

|

||||

}

|

||||

|

||||

return llamaOpts

|

||||

}

|

||||

|

||||

func ImageGeneration(height, width, mode, step, seed int, positive_prompt, negative_prompt, dst string, loader *model.ModelLoader, c Config) (func() error, error) {

|

||||

func ImageGeneration(height, width, mode, step, seed int, positive_prompt, negative_prompt, dst string, loader *model.ModelLoader, c Config, o *Option) (func() error, error) {

|

||||

if c.Backend != model.StableDiffusionBackend {

|

||||

return nil, fmt.Errorf("endpoint only working with stablediffusion models")

|

||||

}

|

||||

inferenceModel, err := loader.BackendLoader(c.Backend, c.ImageGenerationAssets, []llama.ModelOption{}, uint32(c.Threads))

|

||||

inferenceModel, err := loader.BackendLoader(c.Backend, c.ImageGenerationAssets, []llama.ModelOption{}, uint32(c.Threads), o.assetsDestination)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

@@ -81,7 +94,7 @@ func ImageGeneration(height, width, mode, step, seed int, positive_prompt, negat

|

||||

}, nil

|

||||

}

|

||||

|

||||

func ModelEmbedding(s string, tokens []int, loader *model.ModelLoader, c Config) (func() ([]float32, error), error) {

|

||||

func ModelEmbedding(s string, tokens []int, loader *model.ModelLoader, c Config, o *Option) (func() ([]float32, error), error) {

|

||||

if !c.Embeddings {

|

||||

return nil, fmt.Errorf("endpoint disabled for this model by API configuration")

|

||||

}

|

||||

@@ -93,9 +106,9 @@ func ModelEmbedding(s string, tokens []int, loader *model.ModelLoader, c Config)

|

||||

var inferenceModel interface{}

|

||||

var err error

|

||||

if c.Backend == "" {

|

||||

inferenceModel, err = loader.GreedyLoader(modelFile, llamaOpts, uint32(c.Threads))

|

||||

inferenceModel, err = loader.GreedyLoader(modelFile, llamaOpts, uint32(c.Threads), o.assetsDestination)

|

||||

} else {

|

||||

inferenceModel, err = loader.BackendLoader(c.Backend, modelFile, llamaOpts, uint32(c.Threads))

|

||||

inferenceModel, err = loader.BackendLoader(c.Backend, modelFile, llamaOpts, uint32(c.Threads), o.assetsDestination)

|

||||

}

|

||||

if err != nil {

|

||||

return nil, err

|

||||

@@ -168,6 +181,10 @@ func buildLLamaPredictOptions(c Config, modelPath string) []llama.PredictOption

|

||||

predictOptions = append(predictOptions, llama.EnablePromptCacheAll)

|

||||

}

|

||||

|

||||

if c.PromptCacheRO {

|

||||

predictOptions = append(predictOptions, llama.EnablePromptCacheRO)

|

||||

}

|

||||

|

||||

if c.PromptCachePath != "" {

|

||||

// Create parent directory

|

||||

p := filepath.Join(modelPath, c.PromptCachePath)

|

||||

@@ -217,10 +234,20 @@ func buildLLamaPredictOptions(c Config, modelPath string) []llama.PredictOption

|

||||

predictOptions = append(predictOptions, llama.SetSeed(c.Seed))

|

||||

}

|

||||

|

||||

//predictOptions = append(predictOptions, llama.SetLogitBias(c.Seed))

|

||||

|

||||

predictOptions = append(predictOptions, llama.SetFrequencyPenalty(c.FrequencyPenalty))

|

||||

predictOptions = append(predictOptions, llama.SetMlock(c.MMlock))

|

||||

predictOptions = append(predictOptions, llama.SetMemoryMap(c.MMap))

|

||||

predictOptions = append(predictOptions, llama.SetPredictionMainGPU(c.MainGPU))

|

||||

predictOptions = append(predictOptions, llama.SetPredictionTensorSplit(c.TensorSplit))

|

||||

predictOptions = append(predictOptions, llama.SetTailFreeSamplingZ(c.TFZ))

|

||||

predictOptions = append(predictOptions, llama.SetTypicalP(c.TypicalP))

|

||||

|

||||

return predictOptions

|

||||

}

|

||||

|

||||

func ModelInference(s string, loader *model.ModelLoader, c Config, tokenCallback func(string) bool) (func() (string, error), error) {

|

||||

func ModelInference(s string, loader *model.ModelLoader, c Config, o *Option, tokenCallback func(string) bool) (func() (string, error), error) {

|

||||

supportStreams := false

|

||||

modelFile := c.Model

|

||||

|

||||

@@ -229,9 +256,9 @@ func ModelInference(s string, loader *model.ModelLoader, c Config, tokenCallback

|

||||

var inferenceModel interface{}

|

||||

var err error

|

||||

if c.Backend == "" {

|

||||

inferenceModel, err = loader.GreedyLoader(modelFile, llamaOpts, uint32(c.Threads))

|

||||

inferenceModel, err = loader.GreedyLoader(modelFile, llamaOpts, uint32(c.Threads), o.assetsDestination)

|

||||

} else {

|

||||

inferenceModel, err = loader.BackendLoader(c.Backend, modelFile, llamaOpts, uint32(c.Threads))

|

||||

inferenceModel, err = loader.BackendLoader(c.Backend, modelFile, llamaOpts, uint32(c.Threads), o.assetsDestination)

|

||||

}

|

||||

if err != nil {

|

||||

return nil, err

|

||||

@@ -559,7 +586,7 @@ func ModelInference(s string, loader *model.ModelLoader, c Config, tokenCallback

|

||||

}, nil

|

||||

}

|

||||

|

||||

func ComputeChoices(predInput string, input *OpenAIRequest, config *Config, loader *model.ModelLoader, cb func(string, *[]Choice), tokenCallback func(string) bool) ([]Choice, error) {

|

||||

func ComputeChoices(predInput string, input *OpenAIRequest, config *Config, o *Option, loader *model.ModelLoader, cb func(string, *[]Choice), tokenCallback func(string) bool) ([]Choice, error) {

|

||||

result := []Choice{}

|

||||

|

||||

n := input.N

|

||||

@@ -569,7 +596,7 @@ func ComputeChoices(predInput string, input *OpenAIRequest, config *Config, load

|

||||

}

|

||||

|

||||

// get the model function to call for the result

|

||||

predFunc, err := ModelInference(predInput, loader, *config, tokenCallback)

|

||||

predFunc, err := ModelInference(predInput, loader, *config, o, tokenCallback)

|

||||

if err != nil {

|

||||

return result, err

|

||||

}

|

||||

|

||||

@@ -24,6 +24,14 @@ This integration shows how to use LocalAI with [mckaywrigley/chatbot-ui](https:/

|

||||

|

||||

There is also a separate example to show how to manually setup a model: [example](https://github.com/go-skynet/LocalAI/tree/master/examples/chatbot-ui-manual/)

|

||||

|

||||

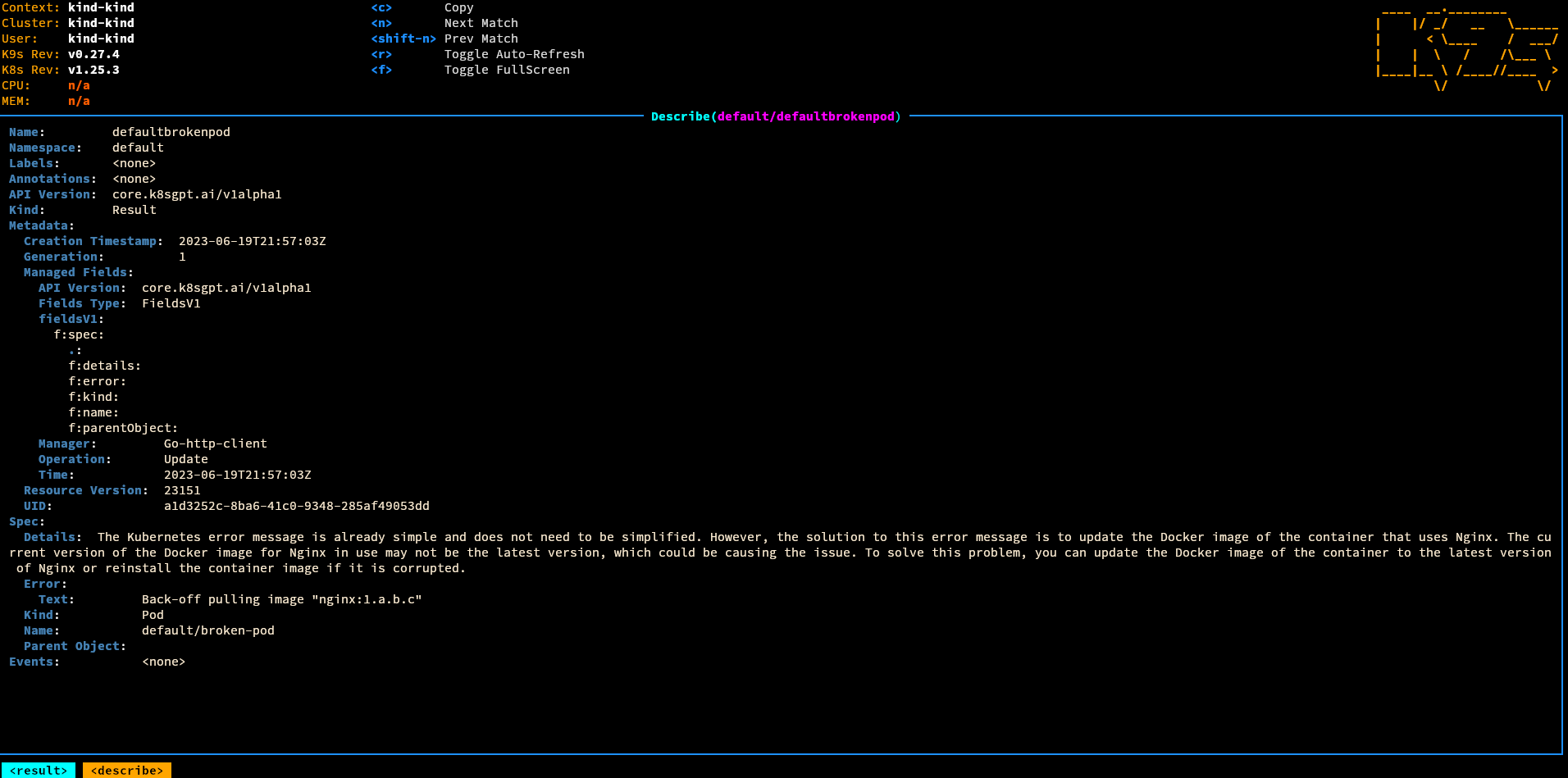

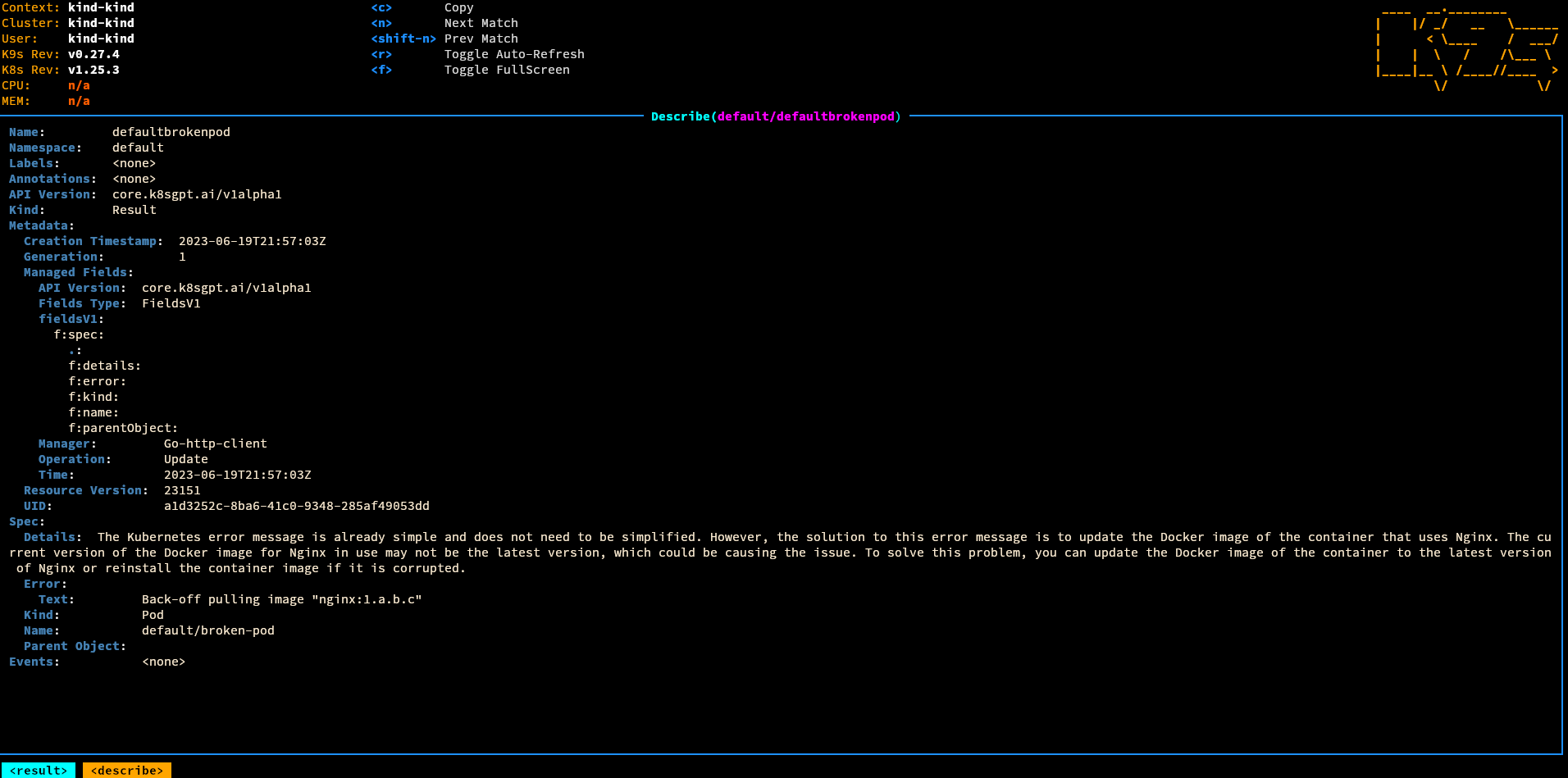

### K8sGPT

|

||||

|

||||

_by [@mudler](https://github.com/mudler)_

|

||||

|

||||

This example show how to use LocalAI inside Kubernetes with [k8sgpt](https://k8sgpt.ai).

|

||||

|

||||

|

||||

|

||||

### Flowise

|

||||

|

||||

_by [@mudler](https://github.com/mudler)_

|

||||

@@ -106,6 +114,16 @@ Shows how to integrate with `Langchain` and `Chroma` to enable question answerin

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain-chroma/)

|

||||

|

||||

### Telegram bot

|

||||

|

||||

_by [@mudler](https://github.com/mudler)

|

||||

|

||||

|

||||

|

||||

Use LocalAI to power a Telegram bot assistant, with Image generation and audio support!

|

||||

|

||||

[Check it out here](https://github.com/go-skynet/LocalAI/tree/master/examples/telegram-bot/)

|

||||

|

||||

### Template for Runpod.io

|

||||

|

||||

_by [@fHachenberg](https://github.com/fHachenberg)_

|

||||

|

||||

@@ -24,3 +24,7 @@ docker-compose up --pull always

|

||||

|

||||

Open http://localhost:3000.

|

||||

|

||||

## Using LocalAI

|

||||

|

||||

Search for LocalAI in the integration, and use the `http://api:8080/` as URL.

|

||||

|

||||

|

||||

70

examples/k8sgpt/README.md

Normal file

70

examples/k8sgpt/README.md

Normal file

@@ -0,0 +1,70 @@

|

||||

# k8sgpt example

|

||||

|

||||

This example show how to use LocalAI with k8sgpt

|

||||

|

||||

|

||||

|

||||

## Create the cluster locally with Kind (optional)

|

||||

|

||||

If you want to test this locally without a remote Kubernetes cluster, you can use kind.

|

||||

|

||||

Install [kind](https://kind.sigs.k8s.io/) and create a cluster:

|

||||

|

||||

```

|

||||

kind create cluster

|

||||

```

|

||||

|

||||

## Setup LocalAI

|

||||

|

||||

We will use [helm](https://helm.sh/docs/intro/install/):

|

||||

|

||||

```

|

||||

helm repo add go-skynet https://go-skynet.github.io/helm-charts/

|

||||

helm repo update

|

||||

|

||||

# Clone LocalAI

|

||||

git clone https://github.com/go-skynet/LocalAI

|

||||

|

||||

cd LocalAI/examples/k8sgpt

|

||||

|

||||

# modify values.yaml preload_models with the models you want to install.

|

||||

# CHANGE the URL to a model in huggingface.

|

||||

helm install local-ai go-skynet/local-ai --create-namespace --namespace local-ai --values values.yaml

|

||||

```

|

||||

|

||||

## Setup K8sGPT

|

||||

|

||||

```

|

||||

# Install k8sgpt

|

||||

helm repo add k8sgpt https://charts.k8sgpt.ai/

|

||||

helm repo update

|

||||

helm install release k8sgpt/k8sgpt-operator -n k8sgpt-operator-system --create-namespace

|

||||

```

|

||||

|

||||

Apply the k8sgpt-operator configuration:

|

||||

|

||||

```

|

||||

kubectl apply -f - << EOF

|

||||

apiVersion: core.k8sgpt.ai/v1alpha1

|

||||

kind: K8sGPT

|

||||

metadata:

|

||||

name: k8sgpt-local-ai

|

||||

namespace: default

|

||||

spec:

|

||||

backend: localai

|

||||

baseUrl: http://local-ai.local-ai.svc.cluster.local:8080/v1

|

||||

noCache: false

|

||||

model: gpt-3.5-turbo

|

||||

noCache: false

|

||||

version: v0.3.0

|

||||

enableAI: true

|

||||

EOF

|

||||

```

|

||||

|

||||

## Test

|

||||

|

||||

Apply a broken pod:

|

||||

|

||||

```

|

||||

kubectl apply -f broken-pod.yaml

|

||||

```

|

||||

14

examples/k8sgpt/broken-pod.yaml

Normal file

14

examples/k8sgpt/broken-pod.yaml

Normal file

@@ -0,0 +1,14 @@

|

||||

apiVersion: v1

|

||||

kind: Pod

|

||||

metadata:

|

||||

name: broken-pod

|

||||

spec:

|

||||

containers:

|

||||

- name: broken-pod

|

||||

image: nginx:1.a.b.c

|

||||

livenessProbe:

|

||||

httpGet:

|

||||

path: /

|

||||

port: 90

|

||||

initialDelaySeconds: 3

|

||||

periodSeconds: 3

|

||||

95

examples/k8sgpt/values.yaml

Normal file

95

examples/k8sgpt/values.yaml

Normal file

@@ -0,0 +1,95 @@

|

||||

replicaCount: 1

|

||||

|

||||

deployment:

|

||||

# https://quay.io/repository/go-skynet/local-ai?tab=tags

|

||||

image: quay.io/go-skynet/local-ai:latest

|

||||

env:

|

||||

threads: 4

|

||||

debug: "true"

|

||||

context_size: 512

|

||||

preload_models: '[{ "url": "github:go-skynet/model-gallery/wizard.yaml", "name": "gpt-3.5-turbo", "overrides": { "parameters": { "model": "WizardLM-7B-uncensored.ggmlv3.q5_1" }},"files": [ { "uri": "https://huggingface.co//WizardLM-7B-uncensored-GGML/resolve/main/WizardLM-7B-uncensored.ggmlv3.q5_1.bin", "sha256": "d92a509d83a8ea5e08ba4c2dbaf08f29015932dc2accd627ce0665ac72c2bb2b", "filename": "WizardLM-7B-uncensored.ggmlv3.q5_1" }]}]'

|

||||

modelsPath: "/models"

|

||||

|

||||

resources:

|

||||

{}

|

||||

# We usually recommend not to specify default resources and to leave this as a conscious

|

||||

# choice for the user. This also increases chances charts run on environments with little

|

||||

# resources, such as Minikube. If you do want to specify resources, uncomment the following

|

||||

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

|

||||

# limits:

|

||||

# cpu: 100m

|

||||

# memory: 128Mi

|

||||

# requests:

|

||||

# cpu: 100m

|

||||

# memory: 128Mi

|

||||

|

||||

# Prompt templates to include

|

||||

# Note: the keys of this map will be the names of the prompt template files

|

||||

promptTemplates:

|

||||

{}

|

||||

# ggml-gpt4all-j.tmpl: |

|

||||

# The prompt below is a question to answer, a task to complete, or a conversation to respond to; decide which and write an appropriate response.

|

||||

# ### Prompt:

|

||||

# {{.Input}}

|

||||

# ### Response:

|

||||

|

||||

# Models to download at runtime

|

||||

models:

|

||||

# Whether to force download models even if they already exist

|

||||

forceDownload: false

|

||||

|

||||

# The list of URLs to download models from

|

||||

# Note: the name of the file will be the name of the loaded model

|

||||

list:

|

||||

#- url: "https://gpt4all.io/models/ggml-gpt4all-j.bin"

|

||||

# basicAuth: base64EncodedCredentials

|

||||

|

||||

# Persistent storage for models and prompt templates.

|

||||

# PVC and HostPath are mutually exclusive. If both are enabled,

|

||||

# PVC configuration takes precedence. If neither are enabled, ephemeral

|

||||

# storage is used.

|

||||

persistence:

|

||||

pvc:

|

||||

enabled: false

|

||||

size: 6Gi

|

||||

accessModes:

|

||||

- ReadWriteOnce

|

||||

|

||||

annotations: {}

|

||||

|

||||

# Optional

|

||||

storageClass: ~

|

||||

|

||||

hostPath:

|

||||

enabled: false

|

||||

path: "/models"

|

||||

|

||||

service:

|

||||

type: ClusterIP

|

||||

port: 8080

|

||||

annotations: {}

|

||||

# If using an AWS load balancer, you'll need to override the default 60s load balancer idle timeout

|

||||

# service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: "1200"

|

||||

|

||||

ingress:

|

||||

enabled: false

|

||||

className: ""

|

||||

annotations:

|

||||

{}

|

||||

# kubernetes.io/ingress.class: nginx

|

||||

# kubernetes.io/tls-acme: "true"

|

||||

hosts:

|

||||

- host: chart-example.local

|

||||

paths:

|

||||

- path: /

|

||||

pathType: ImplementationSpecific

|

||||

tls: []

|

||||

# - secretName: chart-example-tls

|

||||

# hosts:

|

||||

# - chart-example.local

|

||||

|

||||

nodeSelector: {}

|

||||

|

||||

tolerations: []

|

||||

|

||||

affinity: {}

|

||||

@@ -12,15 +12,8 @@ git clone https://github.com/go-skynet/LocalAI

|

||||

|

||||

cd LocalAI/examples/langchain-python

|

||||

|

||||

# (optional) Checkout a specific LocalAI tag

|

||||

# git checkout -b build <TAG>

|

||||

|

||||

# Download gpt4all-j to models/

|

||||

wget https://gpt4all.io/models/ggml-gpt4all-j.bin -O models/ggml-gpt4all-j

|

||||

|

||||

# start with docker-compose

|

||||

docker-compose up -d --build

|

||||

|

||||

docker-compose up --pull always

|

||||

|

||||

pip install langchain

|

||||

pip install openai

|

||||

|

||||

@@ -3,6 +3,14 @@ version: '3.6'

|

||||

services:

|

||||

api:

|

||||

image: quay.io/go-skynet/local-ai:latest

|

||||

# As initially LocalAI will download the models defined in PRELOAD_MODELS

|

||||

# you might need to tweak the healthcheck values here according to your network connection.

|

||||

# Here we give a timespan of 20m to download all the required files.

|

||||

healthcheck:

|

||||

test: ["CMD", "curl", "-f", "http://localhost:8080/readyz"]

|

||||

interval: 1m

|

||||

timeout: 20m

|

||||

retries: 20

|

||||

build:

|

||||

context: ../../

|

||||

dockerfile: Dockerfile

|

||||

@@ -11,6 +19,9 @@ services:

|

||||

environment:

|

||||

- DEBUG=true

|

||||

- MODELS_PATH=/models

|

||||

# You can preload different models here as well.

|

||||

# See: https://github.com/go-skynet/model-gallery

|

||||

- 'PRELOAD_MODELS=[{"url": "github:go-skynet/model-gallery/gpt4all-j.yaml", "name": "gpt-3.5-turbo"}]'

|

||||

volumes:

|

||||

- ./models:/models:cached

|

||||

command: ["/usr/bin/local-ai" ]

|

||||

command: ["/usr/bin/local-ai" ]

|

||||

@@ -1 +0,0 @@

|

||||

../chatbot-ui/models

|

||||

30

examples/telegram-bot/README.md

Normal file

30

examples/telegram-bot/README.md

Normal file

@@ -0,0 +1,30 @@

|

||||

## Telegram bot

|

||||

|

||||

|

||||

|

||||

This example uses a fork of [chatgpt-telegram-bot](https://github.com/karfly/chatgpt_telegram_bot) to deploy a telegram bot with LocalAI instead of OpenAI.

|

||||

|

||||

```bash

|

||||

# Clone LocalAI

|

||||

git clone https://github.com/go-skynet/LocalAI

|

||||

|

||||

cd LocalAI/examples/telegram-bot

|

||||

|

||||

git clone https://github.com/mudler/chatgpt_telegram_bot

|

||||

|

||||

cp -rf docker-compose.yml chatgpt_telegram_bot

|

||||

|

||||

cd chatgpt_telegram_bot

|

||||

|

||||

mv config/config.example.yml config/config.yml

|

||||

mv config/config.example.env config/config.env

|

||||

|

||||

# Edit config/config.yml to set the telegram bot token

|

||||

vim config/config.yml

|

||||

|

||||

# run the bot

|

||||

docker-compose --env-file config/config.env up --build

|

||||

```

|

||||

|

||||

Note: LocalAI is configured to download `gpt4all-j` in place of `gpt-3.5-turbo` and `stablediffusion` for image generation at the first start. Download size is >6GB, if your network connection is slow, adapt the `docker-compose.yml` file healthcheck section accordingly (replace `20m`, for instance with `1h`, etc.).

|

||||

To configure models manually, comment the `PRELOAD_MODELS` environment variable in the `docker-compose.yml` file and see for instance the [chatbot-ui-manual example](https://github.com/go-skynet/LocalAI/tree/master/examples/chatbot-ui-manual) `model` directory.

|

||||

38

examples/telegram-bot/docker-compose.yml

Normal file

38

examples/telegram-bot/docker-compose.yml

Normal file

@@ -0,0 +1,38 @@

|

||||

version: "3"

|

||||

|

||||

services:

|

||||

api:

|

||||

image: quay.io/go-skynet/local-ai:v1.18.0-ffmpeg

|

||||

# As initially LocalAI will download the models defined in PRELOAD_MODELS

|

||||

# you might need to tweak the healthcheck values here according to your network connection.

|

||||

# Here we give a timespan of 20m to download all the required files.

|

||||

healthcheck:

|

||||

test: ["CMD", "curl", "-f", "http://localhost:8080/readyz"]

|

||||

interval: 1m

|

||||

timeout: 20m

|

||||

retries: 20

|

||||

ports:

|

||||

- 8080:8080

|

||||

environment:

|

||||

- DEBUG=true

|

||||

- MODELS_PATH=/models

|

||||

- IMAGE_PATH=/tmp

|

||||

# You can preload different models here as well.

|

||||

# See: https://github.com/go-skynet/model-gallery

|

||||

- 'PRELOAD_MODELS=[{"url": "github:go-skynet/model-gallery/gpt4all-j.yaml", "name": "gpt-3.5-turbo"}, {"url": "github:go-skynet/model-gallery/stablediffusion.yaml"}, {"url": "github:go-skynet/model-gallery/whisper-base.yaml", "name": "whisper-1"}]'

|

||||

volumes:

|

||||

- ./models:/models:cached

|

||||

command: ["/usr/bin/local-ai" ]

|

||||

chatgpt_telegram_bot:

|

||||

container_name: chatgpt_telegram_bot

|

||||

command: python3 bot/bot.py

|

||||

restart: always

|

||||

environment:

|

||||

- OPENAI_API_KEY=sk---anystringhere

|

||||

- OPENAI_API_BASE=http://api:8080/v1

|

||||

build:

|

||||

context: "."

|

||||

dockerfile: Dockerfile

|

||||

depends_on:

|

||||

api:

|

||||

condition: service_healthy

|

||||

33

go.mod

33

go.mod

@@ -3,28 +3,28 @@ module github.com/go-skynet/LocalAI

|

||||

go 1.19

|

||||

|

||||

require (

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230604202420-1e18b2490e7e

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230619005719-f5a8c4539674

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230606002726-57543c169e27

|

||||

github.com/go-audio/wav v1.1.0

|

||||

github.com/go-skynet/bloomz.cpp v0.0.0-20230529155654-1834e77b83fa

|

||||

github.com/go-skynet/go-bert.cpp v0.0.0-20230531070950-0548994371f7

|

||||

github.com/go-skynet/go-ggml-transformers.cpp v0.0.0-20230604074754-6fb862c72bc0

|

||||

github.com/go-skynet/go-llama.cpp v0.0.0-20230606152241-37ef81d01ae0

|

||||

github.com/gofiber/fiber/v2 v2.46.0

|

||||

github.com/go-skynet/go-bert.cpp v0.0.0-20230607105116-6069103f54b9

|

||||

github.com/go-skynet/go-ggml-transformers.cpp v0.0.0-20230617123349-32b9223ccdb1

|

||||

github.com/go-skynet/go-llama.cpp v0.0.0-20230616223721-7ad833b67070

|

||||

github.com/gofiber/fiber/v2 v2.47.0

|

||||

github.com/google/uuid v1.3.0

|

||||

github.com/hashicorp/go-multierror v1.1.1

|

||||

github.com/imdario/mergo v0.3.16

|

||||

github.com/mudler/go-stable-diffusion v0.0.0-20230605122230-d89260f598af

|

||||

github.com/nomic-ai/gpt4all/gpt4all-bindings/golang v0.0.0-20230605194130-266f13aee9d8

|

||||

github.com/onsi/ginkgo/v2 v2.9.7

|

||||

github.com/onsi/gomega v1.27.7

|

||||

github.com/otiai10/openaigo v1.1.0

|

||||

github.com/nomic-ai/gpt4all/gpt4all-bindings/golang v0.0.0-20230619183453-2b6cc99a31a1

|

||||

github.com/onsi/ginkgo/v2 v2.11.0

|

||||

github.com/onsi/gomega v1.27.8

|

||||

github.com/otiai10/openaigo v1.2.0

|

||||

github.com/rs/zerolog v1.29.1

|

||||

github.com/sashabaranov/go-openai v1.10.0

|

||||

github.com/sashabaranov/go-openai v1.10.1

|

||||

github.com/swaggo/swag v1.16.1

|

||||

github.com/tmc/langchaingo v0.0.0-20230605114752-4afed6d7be4a

|

||||

github.com/urfave/cli/v2 v2.25.5

|

||||

github.com/valyala/fasthttp v1.47.0

|

||||

github.com/tmc/langchaingo v0.0.0-20230616220619-1b3da4433944

|

||||

github.com/urfave/cli/v2 v2.25.7

|

||||

github.com/valyala/fasthttp v1.48.0

|

||||

gopkg.in/yaml.v2 v2.4.0

|

||||

gopkg.in/yaml.v3 v3.0.1

|

||||

)

|

||||

@@ -42,7 +42,6 @@ require (

|

||||

github.com/go-openapi/jsonreference v0.19.6 // indirect

|

||||

github.com/go-openapi/spec v0.20.4 // indirect

|

||||

github.com/go-openapi/swag v0.19.15 // indirect

|

||||

github.com/go-skynet/go-gpt2.cpp v0.0.0-20230523153133-3eb3a32c0874 // indirect

|

||||

github.com/go-task/slim-sprig v0.0.0-20230315185526-52ccab3ef572 // indirect

|

||||

github.com/google/go-cmp v0.5.9 // indirect

|

||||

github.com/google/pprof v0.0.0-20210407192527-94a9f03dee38 // indirect

|

||||

@@ -51,7 +50,7 @@ require (

|

||||

github.com/klauspost/compress v1.16.3 // indirect

|

||||

github.com/mailru/easyjson v0.7.6 // indirect

|

||||

github.com/mattn/go-colorable v0.1.13 // indirect

|

||||

github.com/mattn/go-isatty v0.0.18 // indirect

|

||||

github.com/mattn/go-isatty v0.0.19 // indirect

|

||||

github.com/mattn/go-runewidth v0.0.14 // indirect

|

||||

github.com/otiai10/mint v1.5.1 // indirect

|

||||

github.com/philhofer/fwd v1.1.2 // indirect

|

||||

@@ -64,7 +63,7 @@ require (

|

||||

github.com/valyala/tcplisten v1.0.0 // indirect

|

||||

github.com/xrash/smetrics v0.0.0-20201216005158-039620a65673 // indirect

|

||||

golang.org/x/net v0.10.0 // indirect

|

||||

golang.org/x/sys v0.8.0 // indirect

|

||||

golang.org/x/sys v0.9.0 // indirect

|

||||

golang.org/x/text v0.9.0 // indirect

|

||||

golang.org/x/tools v0.9.1 // indirect

|

||||

golang.org/x/tools v0.9.3 // indirect

|

||||

)

|

||||

|

||||

185

go.sum

185

go.sum

@@ -16,32 +16,10 @@ github.com/creack/pty v1.1.9/go.mod h1:oKZEueFk5CKHvIhNR5MUki03XCEU+Q6VDXinZuGJ3

|

||||

github.com/davecgh/go-spew v1.1.0/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

|

||||

github.com/davecgh/go-spew v1.1.1 h1:vj9j/u1bqnvCEfJOwUhtlOARqs3+rkHYY13jYWTU97c=

|

||||

github.com/davecgh/go-spew v1.1.1/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230515123100-6fdd0c338e56 h1:s8/MZdicstKi5fn9D9mKGIQ/q6IWCYCk/BM68i8v51w=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230515123100-6fdd0c338e56/go.mod h1:gWy7FIWioqYmYxkaoFyBnaKApeZVrUkHhv9EV9pz4dM=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230529074347-ccb05c3e1c6e h1:YbcLoxAwS0r7otEqU/d8bArubmfEJaG7dZPp0Aa52Io=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230529074347-ccb05c3e1c6e/go.mod h1:gWy7FIWioqYmYxkaoFyBnaKApeZVrUkHhv9EV9pz4dM=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230531084548-c43cdf5fc5bf h1:upCz8WYdzMeJg0qywUaVaGndY+niuicj5j6V4pvhNS4=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230531084548-c43cdf5fc5bf/go.mod h1:gWy7FIWioqYmYxkaoFyBnaKApeZVrUkHhv9EV9pz4dM=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230601111443-3b28b09469fc h1:RCGGh/zw+K09sjCIYHUV7lFenxONml+LS02RdN+AkwI=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230601111443-3b28b09469fc/go.mod h1:gWy7FIWioqYmYxkaoFyBnaKApeZVrUkHhv9EV9pz4dM=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230604202420-1e18b2490e7e h1:Qne1BO0ltmyJcsizxZ61SV+uwuD1F8NztsfBDHOd0LI=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230604202420-1e18b2490e7e/go.mod h1:gWy7FIWioqYmYxkaoFyBnaKApeZVrUkHhv9EV9pz4dM=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230520182345-041be06d5881 h1:dafqVivljYk51VLFnnpTXJnfWDe637EobWZ1l8PyEf8=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230520182345-041be06d5881/go.mod h1:QIjZ9OktHFG7p+/m3sMvrAJKKdWrr1fZIK0rM6HZlyo=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230523110439-77eab3fbfe5e h1:4PMorQuoUGAXmIzCtnNOHaasyLokXdgd8jUWwsraFTo=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230523110439-77eab3fbfe5e/go.mod h1:QIjZ9OktHFG7p+/m3sMvrAJKKdWrr1fZIK0rM6HZlyo=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230524181101-5e2b3407ef46 h1:+STJWsBFikYC90LnR8I9gcBdysQn7Jv9Jb44+5WBi68=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230524181101-5e2b3407ef46/go.mod h1:QIjZ9OktHFG7p+/m3sMvrAJKKdWrr1fZIK0rM6HZlyo=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230527074028-9b926844e3ae h1:uzi5myq/qNX9xiKMRF/fW3HfxuEo2WcnTalwg9fe2hM=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230527074028-9b926844e3ae/go.mod h1:QIjZ9OktHFG7p+/m3sMvrAJKKdWrr1fZIK0rM6HZlyo=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230528233858-d7c936b44a80 h1:IeeVcNaQHdcG+GPg+meOPFvtonvO8p/HBzTrZGjpWZk=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230528233858-d7c936b44a80/go.mod h1:QIjZ9OktHFG7p+/m3sMvrAJKKdWrr1fZIK0rM6HZlyo=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230531071314-ce6f7470649f h1:oGTI2SlcA7oGPFsmkS1m8psq3uKNnhhJ/MZ2ZWVZDe0=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230531071314-ce6f7470649f/go.mod h1:QIjZ9OktHFG7p+/m3sMvrAJKKdWrr1fZIK0rM6HZlyo=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230601065548-3f7436e8a096 h1:TD7v8FnwWCWlOsrkpnumsbxsflyhTI3rSm2HInqqSAI=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230601065548-3f7436e8a096/go.mod h1:QIjZ9OktHFG7p+/m3sMvrAJKKdWrr1fZIK0rM6HZlyo=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230601124500-5b9e59bc07dd h1:os3FeYEIB4j5m5QlbFC3HkVcaAmLxNXz48uIfQAexm0=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230601124500-5b9e59bc07dd/go.mod h1:QIjZ9OktHFG7p+/m3sMvrAJKKdWrr1fZIK0rM6HZlyo=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230614130248-a57bca3031fb h1:ekua5AlHdmz8LaCOyX2bMp+a1cOEzReUEDFr5A1NOjg=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230614130248-a57bca3031fb/go.mod h1:gWy7FIWioqYmYxkaoFyBnaKApeZVrUkHhv9EV9pz4dM=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230619005719-f5a8c4539674 h1:G70Yf/QOCEL1v24idWnGd6rJsbqiGkJAJnMaWaolzEg=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230619005719-f5a8c4539674/go.mod h1:gWy7FIWioqYmYxkaoFyBnaKApeZVrUkHhv9EV9pz4dM=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230606002726-57543c169e27 h1:boeMTUUBtnLU8JElZJHXrsUzROJar9/t6vGOFjkrhhI=

|

||||

github.com/ggerganov/whisper.cpp/bindings/go v0.0.0-20230606002726-57543c169e27/go.mod h1:QIjZ9OktHFG7p+/m3sMvrAJKKdWrr1fZIK0rM6HZlyo=

|

||||

github.com/go-audio/audio v1.0.0 h1:zS9vebldgbQqktK4H0lUqWrG8P0NxCJVqcj7ZpNnwd4=

|

||||

@@ -62,59 +40,25 @@ github.com/go-openapi/spec v0.20.4/go.mod h1:faYFR1CvsJZ0mNsmsphTMSoRrNV3TEDoAM7

|

||||

github.com/go-openapi/swag v0.19.5/go.mod h1:POnQmlKehdgb5mhVOsnJFsivZCEZ/vjK9gh66Z9tfKk=

|

||||

github.com/go-openapi/swag v0.19.15 h1:D2NRCBzS9/pEY3gP9Nl8aDqGUcPFrwG2p+CNFrLyrCM=

|

||||

github.com/go-openapi/swag v0.19.15/go.mod h1:QYRuS/SOXUCsnplDa677K7+DxSOj6IPNl/eQntq43wQ=

|

||||

github.com/go-skynet/bloomz.cpp v0.0.0-20230510223001-e9366e82abdf h1:VJfSn8hIDE+K5+h38M3iAyFXrxpRExMKRdTk33UDxsw=

|

||||

github.com/go-skynet/bloomz.cpp v0.0.0-20230510223001-e9366e82abdf/go.mod h1:wc0fJ9V04yiYTfgKvE5RUUSRQ5Kzi0Bo4I+U3nNOUuA=

|

||||

github.com/go-skynet/bloomz.cpp v0.0.0-20230529155654-1834e77b83fa h1:gxr68r/6EWroay4iI81jxqGCDbKotY4+CiwdUkBz2NQ=

|

||||

github.com/go-skynet/bloomz.cpp v0.0.0-20230529155654-1834e77b83fa/go.mod h1:wc0fJ9V04yiYTfgKvE5RUUSRQ5Kzi0Bo4I+U3nNOUuA=

|

||||

github.com/go-skynet/go-bert.cpp v0.0.0-20230516063724-cea1ed76a7f4 h1:+3KPDf4Wv1VHOkzAfZnlj9qakLSYggTpm80AswhD/FU=

|

||||

github.com/go-skynet/go-bert.cpp v0.0.0-20230516063724-cea1ed76a7f4/go.mod h1:VY0s5KoAI2jRCvQXKuDeEEe8KG7VaWifSNJSk+E1KtY=

|

||||

github.com/go-skynet/go-bert.cpp v0.0.0-20230529074307-771b4a085972 h1:eiE1CTqanNjpNWF2xp9GvNZXgKgRzNaUSyFZGMLu8Vo=

|

||||

github.com/go-skynet/go-bert.cpp v0.0.0-20230529074307-771b4a085972/go.mod h1:IQrVVZiAuWpneNrahrGu3m7VVaKLDIvQGp+Q6B8jw5g=

|

||||

github.com/go-skynet/go-bert.cpp v0.0.0-20230531070950-0548994371f7 h1:hm5rOxRf2Y8zmQTBgtDabLoprYHHQHmZ8ui8i4KQSgU=

|

||||

github.com/go-skynet/go-bert.cpp v0.0.0-20230531070950-0548994371f7/go.mod h1:55l02IF2kD+LGEH4yXzmPPygeuWiUIo8Nbh/+ZU9cb0=

|

||||

github.com/go-skynet/go-ggml-transformers.cpp v0.0.0-20230523173010-f89d7c22df6b h1:uKICsAbdRJxMPZ4RXltwOwXPRDO1/d/pdGR3gEEUV9M=

|

||||

github.com/go-skynet/go-ggml-transformers.cpp v0.0.0-20230523173010-f89d7c22df6b/go.mod h1:hjmO5UfipWl6xkPT54acOs9DDto8GPV81IvsBcvRjsA=

|

||||

github.com/go-skynet/go-ggml-transformers.cpp v0.0.0-20230524084634-c4c581f1853c h1:jXUOCh2K4OzRItTtHzdxvkylE9r1szRSleRpXCNvraY=

|