mirror of

https://github.com/mudler/LocalAI.git

synced 2026-02-03 03:02:38 -05:00

Compare commits

125 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

4413defca5 | ||

|

|

f359e1c6c4 | ||

|

|

1bc87d582d | ||

|

|

a86a383357 | ||

|

|

16f02c7b30 | ||

|

|

fe2706890c | ||

|

|

85f0f8227d | ||

|

|

59e3c02002 | ||

|

|

032dee256f | ||

|

|

6b5e2b2bf5 | ||

|

|

6fc303de87 | ||

|

|

6ad6e4873d | ||

|

|

d6d7391da8 | ||

|

|

11675932ac | ||

|

|

f02202e1e1 | ||

|

|

f8ee20991c | ||

|

|

e6db14e2f1 | ||

|

|

d00886abea | ||

|

|

4873d2bfa1 | ||

|

|

9f426578cf | ||

|

|

9d01b695a8 | ||

|

|

93829ab228 | ||

|

|

dd234f86d5 | ||

|

|

3daff6f1aa | ||

|

|

89dfa0f5fc | ||

|

|

bc03c492a0 | ||

|

|

f50a4c1454 | ||

|

|

d13d4d95ce | ||

|

|

428790ec06 | ||

|

|

4f551ce414 | ||

|

|

6ed7b10273 | ||

|

|

02979566ee | ||

|

|

cbdcc839f3 | ||

|

|

e1c8f087f4 | ||

|

|

3a90ea44a5 | ||

|

|

e55492475d | ||

|

|

07ec2e441d | ||

|

|

38d7e0b43c | ||

|

|

3411bfd00d | ||

|

|

7e5fe35ae4 | ||

|

|

8c8cf38d4d | ||

|

|

75b25297fd | ||

|

|

009ee47fe2 | ||

|

|

ec2adc2c03 | ||

|

|

ad301e6ed7 | ||

|

|

d094381e5d | ||

|

|

3ff9bbd217 | ||

|

|

e62ee2bc06 | ||

|

|

b49721cdd1 | ||

|

|

64c0a7967f | ||

|

|

e96eadab40 | ||

|

|

e73283121b | ||

|

|

857d13e8d6 | ||

|

|

91db3d4d5c | ||

|

|

961cf29217 | ||

|

|

c839b334eb | ||

|

|

714bfcd45b | ||

|

|

77ce8b953e | ||

|

|

01ada95941 | ||

|

|

eabdc5042a | ||

|

|

96267d9437 | ||

|

|

9497a24127 | ||

|

|

fdf75c6d0e | ||

|

|

6352308882 | ||

|

|

a8172a0f4e | ||

|

|

ebcd10d66f | ||

|

|

885642915f | ||

|

|

2e424491c0 | ||

|

|

aa6faef8f7 | ||

|

|

b3254baf60 | ||

|

|

0a43d27f0e | ||

|

|

3fe11fe24d | ||

|

|

af18fdc749 | ||

|

|

32b5eddd7d | ||

|

|

07c3aa1869 | ||

|

|

e59bad89e7 | ||

|

|

b971807980 | ||

|

|

c974dad799 | ||

|

|

4eae570ef5 | ||

|

|

67992a7d99 | ||

|

|

0a4899f366 | ||

|

|

1eb02f6c91 | ||

|

|

575874e4fb | ||

|

|

751b7eca62 | ||

|

|

1ae7150810 | ||

|

|

70caf9bf8c | ||

|

|

0b226ac027 | ||

|

|

220d6fd59b | ||

|

|

0a00a4b58e | ||

|

|

156e15a4fa | ||

|

|

271d3f6673 | ||

|

|

fec4ab93c5 | ||

|

|

38a7a7a54d | ||

|

|

0db0704e2c | ||

|

|

88f472e5d2 | ||

|

|

92452d46da | ||

|

|

ac70252d70 | ||

|

|

f6451d2518 | ||

|

|

2473f9d19b | ||

|

|

bc583385a9 | ||

|

|

8286bfbab7 | ||

|

|

d129fabe3b | ||

|

|

2539867247 | ||

|

|

69fedb92d9 | ||

|

|

54b5eadcc4 | ||

|

|

16773e2a35 | ||

|

|

78503c62b7 | ||

|

|

a330c9cee5 | ||

|

|

ff0867996e | ||

|

|

1bf8f996d1 | ||

|

|

52f4d993c1 | ||

|

|

d0ceebc5d7 | ||

|

|

9122af3ae1 | ||

|

|

b8533428bc | ||

|

|

677905334c | ||

|

|

d1d55d29a0 | ||

|

|

e07dba7ad6 | ||

|

|

062f832510 | ||

|

|

d0330bb64b | ||

|

|

91a23ec6ec | ||

|

|

0b000dd043 | ||

|

|

c73ba91a66 | ||

|

|

dfc00f8bc1 | ||

|

|

a18ff9c9b3 | ||

|

|

d0199279ad |

9

.github/bump_deps.sh

vendored

Executable file

9

.github/bump_deps.sh

vendored

Executable file

@@ -0,0 +1,9 @@

|

||||

#!/bin/bash

|

||||

set -xe

|

||||

REPO=$1

|

||||

BRANCH=$2

|

||||

VAR=$3

|

||||

|

||||

LAST_COMMIT=$(curl -s -H "Accept: application/vnd.github.VERSION.sha" "https://api.github.com/repos/$REPO/commits/$BRANCH")

|

||||

|

||||

sed -i Makefile -e "s/$VAR?=.*/$VAR?=$LAST_COMMIT/"

|

||||

54

.github/workflows/bump_deps.yaml

vendored

Normal file

54

.github/workflows/bump_deps.yaml

vendored

Normal file

@@ -0,0 +1,54 @@

|

||||

name: Bump dependencies

|

||||

on:

|

||||

schedule:

|

||||

- cron: 0 20 * * *

|

||||

workflow_dispatch:

|

||||

jobs:

|

||||

bump:

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

include:

|

||||

- repository: "go-skynet/go-gpt4all-j.cpp"

|

||||

variable: "GOGPT4ALLJ_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/go-llama.cpp"

|

||||

variable: "GOLLAMA_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/go-gpt2.cpp"

|

||||

variable: "GOGPT2_VERSION"

|

||||

branch: "master"

|

||||

- repository: "donomii/go-rwkv.cpp"

|

||||

variable: "RWKV_VERSION"

|

||||

branch: "main"

|

||||

- repository: "ggerganov/whisper.cpp"

|

||||

variable: "WHISPER_CPP_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/go-bert.cpp"

|

||||

variable: "BERT_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/bloomz.cpp"

|

||||

variable: "BLOOMZ_VERSION"

|

||||

branch: "main"

|

||||

- repository: "go-skynet/gpt4all"

|

||||

variable: "GPT4ALL_VERSION"

|

||||

branch: "main"

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Bump dependencies 🔧

|

||||

run: |

|

||||

bash .github/bump_deps.sh ${{ matrix.repository }} ${{ matrix.branch }} ${{ matrix.variable }}

|

||||

- name: Create Pull Request

|

||||

uses: peter-evans/create-pull-request@v5

|

||||

with:

|

||||

token: ${{ secrets.UPDATE_BOT_TOKEN }}

|

||||

push-to-fork: ci-forks/LocalAI

|

||||

commit-message: ':arrow_up: Update ${{ matrix.repository }}'

|

||||

title: ':arrow_up: Update ${{ matrix.repository }}'

|

||||

branch: "update/${{ matrix.variable }}"

|

||||

body: Bump of ${{ matrix.repository }} version

|

||||

signoff: true

|

||||

|

||||

|

||||

|

||||

4

.github/workflows/image.yml

vendored

4

.github/workflows/image.yml

vendored

@@ -54,8 +54,8 @@ jobs:

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

registry: quay.io

|

||||

username: ${{ secrets.QUAY_USERNAME }}

|

||||

password: ${{ secrets.QUAY_PASSWORD }}

|

||||

username: ${{ secrets.LOCALAI_REGISTRY_USERNAME }}

|

||||

password: ${{ secrets.LOCALAI_REGISTRY_PASSWORD }}

|

||||

- name: Build

|

||||

if: github.event_name != 'pull_request'

|

||||

uses: docker/build-push-action@v4

|

||||

|

||||

7

.gitignore

vendored

7

.gitignore

vendored

@@ -2,6 +2,8 @@

|

||||

go-llama

|

||||

go-gpt4all-j

|

||||

go-gpt2

|

||||

go-rwkv

|

||||

whisper.cpp

|

||||

|

||||

# LocalAI build binary

|

||||

LocalAI

|

||||

@@ -11,4 +13,7 @@ local-ai

|

||||

|

||||

# Ignore models

|

||||

models/*

|

||||

test-models/

|

||||

test-models/

|

||||

|

||||

# just in case

|

||||

.DS_Store

|

||||

|

||||

12

Dockerfile

12

Dockerfile

@@ -1,13 +1,9 @@

|

||||

ARG GO_VERSION=1.20

|

||||

ARG DEBIAN_VERSION=11

|

||||

ARG BUILD_TYPE=

|

||||

|

||||

FROM golang:$GO_VERSION as builder

|

||||

FROM golang:$GO_VERSION

|

||||

WORKDIR /build

|

||||

RUN apt-get update && apt-get install -y cmake

|

||||

COPY . .

|

||||

RUN make build

|

||||

|

||||

FROM debian:$DEBIAN_VERSION

|

||||

COPY --from=builder /build/local-ai /usr/bin/local-ai

|

||||

ENTRYPOINT [ "/usr/bin/local-ai" ]

|

||||

RUN make prepare-sources

|

||||

EXPOSE 8080

|

||||

ENTRYPOINT [ "/build/entrypoint.sh" ]

|

||||

|

||||

14

Dockerfile.dev

Normal file

14

Dockerfile.dev

Normal file

@@ -0,0 +1,14 @@

|

||||

ARG GO_VERSION=1.20

|

||||

ARG DEBIAN_VERSION=11

|

||||

ARG BUILD_TYPE=

|

||||

|

||||

FROM golang:$GO_VERSION as builder

|

||||

WORKDIR /build

|

||||

RUN apt-get update && apt-get install -y cmake

|

||||

COPY . .

|

||||

RUN make build

|

||||

|

||||

FROM debian:$DEBIAN_VERSION

|

||||

COPY --from=builder /build/local-ai /usr/bin/local-ai

|

||||

EXPOSE 8080

|

||||

ENTRYPOINT [ "/usr/bin/local-ai" ]

|

||||

206

Makefile

206

Makefile

@@ -2,12 +2,17 @@ GOCMD=go

|

||||

GOTEST=$(GOCMD) test

|

||||

GOVET=$(GOCMD) vet

|

||||

BINARY_NAME=local-ai

|

||||

# renovate: datasource=github-tags depName=go-skynet/go-llama.cpp

|

||||

GOLLAMA_VERSION?=llama.cpp-25d7abb

|

||||

# renovate: datasource=git-refs packageNameTemplate=https://github.com/go-skynet/go-gpt4all-j.cpp currentValueTemplate=master depNameTemplate=go-gpt4all-j.cpp

|

||||

GOGPT4ALLJ_VERSION?=1f7bff57f66cb7062e40d0ac3abd2217815e5109

|

||||

# renovate: datasource=git-refs packageNameTemplate=https://github.com/go-skynet/go-gpt2.cpp currentValueTemplate=master depNameTemplate=go-gpt2.cpp

|

||||

GOGPT2_VERSION?=245a5bfe6708ab80dc5c733dcdbfbe3cfd2acdaa

|

||||

|

||||

GOLLAMA_VERSION?=c03e8adbc45c866e0f6d876af1887d6b01d57eb4

|

||||

GPT4ALL_REPO?=https://github.com/go-skynet/gpt4all

|

||||

GPT4ALL_VERSION?=3657f9417e17edf378c27d0a9274a1bf41caa914

|

||||

GOGPT2_VERSION?=6a10572

|

||||

RWKV_REPO?=https://github.com/donomii/go-rwkv.cpp

|

||||

RWKV_VERSION?=07166da10cb2a9e8854395a4f210464dcea76e47

|

||||

WHISPER_CPP_VERSION?=bf2449dfae35a46b2cd92ab22661ce81a48d4993

|

||||

BERT_VERSION?=ec771ec715576ac050263bb7bb74bfd616a5ba13

|

||||

BLOOMZ_VERSION?=e9366e82abdfe70565644fbfae9651976714efd1

|

||||

|

||||

|

||||

GREEN := $(shell tput -Txterm setaf 2)

|

||||

YELLOW := $(shell tput -Txterm setaf 3)

|

||||

@@ -15,8 +20,8 @@ WHITE := $(shell tput -Txterm setaf 7)

|

||||

CYAN := $(shell tput -Txterm setaf 6)

|

||||

RESET := $(shell tput -Txterm sgr0)

|

||||

|

||||

C_INCLUDE_PATH=$(shell pwd)/go-llama:$(shell pwd)/go-gpt4all-j:$(shell pwd)/go-gpt2

|

||||

LIBRARY_PATH=$(shell pwd)/go-llama:$(shell pwd)/go-gpt4all-j:$(shell pwd)/go-gpt2

|

||||

C_INCLUDE_PATH=$(shell pwd)/go-llama:$(shell pwd)/gpt4all/gpt4all-bindings/golang/:$(shell pwd)/go-gpt2:$(shell pwd)/go-rwkv:$(shell pwd)/whisper.cpp:$(shell pwd)/go-bert:$(shell pwd)/bloomz

|

||||

LIBRARY_PATH=$(shell pwd)/go-llama:$(shell pwd)/gpt4all/gpt4all-bindings/golang/:$(shell pwd)/go-gpt2:$(shell pwd)/go-rwkv:$(shell pwd)/whisper.cpp:$(shell pwd)/go-bert:$(shell pwd)/bloomz

|

||||

|

||||

# Use this if you want to set the default behavior

|

||||

ifndef BUILD_TYPE

|

||||

@@ -33,70 +38,143 @@ endif

|

||||

|

||||

all: help

|

||||

|

||||

## GPT4ALL

|

||||

gpt4all:

|

||||

git clone --recurse-submodules $(GPT4ALL_REPO) gpt4all

|

||||

cd gpt4all && git checkout -b build $(GPT4ALL_VERSION) && git submodule update --init --recursive --depth 1

|

||||

# This is hackish, but needed as both go-llama and go-gpt4allj have their own version of ggml..

|

||||

@find ./gpt4all -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_/gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.h" -exec sed -i'' -e 's/gpt_/gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.h" -exec sed -i'' -e 's/set_console_color/set_gptj_console_color/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/set_console_color/set_gptj_console_color/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/llama_/gptjllama_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.go" -exec sed -i'' -e 's/llama_/gptjllama_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.h" -exec sed -i'' -e 's/llama_/gptjllama_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.txt" -exec sed -i'' -e 's/llama_/gptjllama_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/json_/json_gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/void replace/void json_gptj_replace/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/::replace/::json_gptj_replace/g' {} +

|

||||

mv ./gpt4all/gpt4all-backend/llama.cpp/llama_util.h ./gpt4all/gpt4all-backend/llama.cpp/gptjllama_util.h

|

||||

|

||||

## BERT embeddings

|

||||

go-bert:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-bert.cpp go-bert

|

||||

cd go-bert && git checkout -b build $(BERT_VERSION) && git submodule update --init --recursive --depth 1

|

||||

@find ./go-bert -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_bert_/g' {} +

|

||||

@find ./go-bert -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_bert_/g' {} +

|

||||

@find ./go-bert -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_bert_/g' {} +

|

||||

|

||||

## RWKV

|

||||

go-rwkv:

|

||||

git clone --recurse-submodules $(RWKV_REPO) go-rwkv

|

||||

cd go-rwkv && git checkout -b build $(RWKV_VERSION) && git submodule update --init --recursive --depth 1

|

||||

@find ./go-rwkv -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_rwkv_/g' {} +

|

||||

@find ./go-rwkv -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_rwkv_/g' {} +

|

||||

@find ./go-rwkv -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_rwkv_/g' {} +

|

||||

|

||||

go-rwkv/librwkv.a: go-rwkv

|

||||

cd go-rwkv && cd rwkv.cpp && cmake . -DRWKV_BUILD_SHARED_LIBRARY=OFF && cmake --build . && cp librwkv.a .. && cp ggml/src/libggml.a ..

|

||||

|

||||

## bloomz

|

||||

bloomz:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/bloomz.cpp bloomz

|

||||

@find ./bloomz -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_bloomz_/g' {} +

|

||||

@find ./bloomz -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_bloomz_/g' {} +

|

||||

@find ./bloomz -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_bloomz_/g' {} +

|

||||

@find ./bloomz -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_/gpt_bloomz_/g' {} +

|

||||

@find ./bloomz -type f -name "*.h" -exec sed -i'' -e 's/gpt_/gpt_bloomz_/g' {} +

|

||||

|

||||

bloomz/libbloomz.a: bloomz

|

||||

cd bloomz && make libbloomz.a

|

||||

|

||||

go-bert/libgobert.a: go-bert

|

||||

$(MAKE) -C go-bert libgobert.a

|

||||

|

||||

gpt4all/gpt4all-bindings/golang/libgpt4all.a: gpt4all

|

||||

$(MAKE) -C gpt4all/gpt4all-bindings/golang/ $(GENERIC_PREFIX)libgpt4all.a

|

||||

|

||||

## CEREBRAS GPT

|

||||

go-gpt2:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-gpt2.cpp go-gpt2

|

||||

cd go-gpt2 && git checkout -b build $(GOGPT2_VERSION) && git submodule update --init --recursive --depth 1

|

||||

# This is hackish, but needed as both go-llama and go-gpt4allj have their own version of ggml..

|

||||

@find ./go-gpt2 -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_print_usage/gpt2_print_usage/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.h" -exec sed -i'' -e 's/gpt_print_usage/gpt2_print_usage/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_params_parse/gpt2_params_parse/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.h" -exec sed -i'' -e 's/gpt_params_parse/gpt2_params_parse/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_random_prompt/gpt2_random_prompt/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.h" -exec sed -i'' -e 's/gpt_random_prompt/gpt2_random_prompt/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/json_/json_gpt2_/g' {} +

|

||||

|

||||

go-gpt2/libgpt2.a: go-gpt2

|

||||

$(MAKE) -C go-gpt2 $(GENERIC_PREFIX)libgpt2.a

|

||||

|

||||

whisper.cpp:

|

||||

git clone https://github.com/ggerganov/whisper.cpp.git

|

||||

cd whisper.cpp && git checkout -b build $(WHISPER_CPP_VERSION) && git submodule update --init --recursive --depth 1

|

||||

|

||||

whisper.cpp/libwhisper.a: whisper.cpp

|

||||

cd whisper.cpp && make libwhisper.a

|

||||

|

||||

go-llama:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-llama.cpp go-llama

|

||||

cd go-llama && git checkout -b build $(GOLLAMA_VERSION) && git submodule update --init --recursive --depth 1

|

||||

|

||||

go-llama/libbinding.a: go-llama

|

||||

$(MAKE) -C go-llama $(GENERIC_PREFIX)libbinding.a

|

||||

|

||||

replace:

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-llama.cpp=$(shell pwd)/go-llama

|

||||

$(GOCMD) mod edit -replace github.com/nomic/gpt4all/gpt4all-bindings/golang=$(shell pwd)/gpt4all/gpt4all-bindings/golang

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-gpt2.cpp=$(shell pwd)/go-gpt2

|

||||

$(GOCMD) mod edit -replace github.com/donomii/go-rwkv.cpp=$(shell pwd)/go-rwkv

|

||||

$(GOCMD) mod edit -replace github.com/ggerganov/whisper.cpp=$(shell pwd)/whisper.cpp

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-bert.cpp=$(shell pwd)/go-bert

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/bloomz.cpp=$(shell pwd)/bloomz

|

||||

|

||||

prepare-sources: go-llama go-gpt2 gpt4all go-rwkv whisper.cpp go-bert bloomz replace

|

||||

$(GOCMD) mod download

|

||||

|

||||

## GENERIC

|

||||

rebuild: ## Rebuilds the project

|

||||

$(MAKE) -C go-llama clean

|

||||

$(MAKE) -C gpt4all/gpt4all-bindings/golang/ clean

|

||||

$(MAKE) -C go-gpt2 clean

|

||||

$(MAKE) -C go-rwkv clean

|

||||

$(MAKE) -C whisper.cpp clean

|

||||

$(MAKE) -C go-bert clean

|

||||

$(MAKE) -C bloomz clean

|

||||

$(MAKE) build

|

||||

|

||||

prepare: prepare-sources gpt4all/gpt4all-bindings/golang/libgpt4all.a go-llama/libbinding.a go-bert/libgobert.a go-gpt2/libgpt2.a go-rwkv/librwkv.a whisper.cpp/libwhisper.a bloomz/libbloomz.a ## Prepares for building

|

||||

|

||||

clean: ## Remove build related file

|

||||

rm -fr ./go-llama

|

||||

rm -rf ./gpt4all

|

||||

rm -rf ./go-gpt2

|

||||

rm -rf ./go-rwkv

|

||||

rm -rf ./go-bert

|

||||

rm -rf ./bloomz

|

||||

rm -rf $(BINARY_NAME)

|

||||

|

||||

## Build:

|

||||

|

||||

build: prepare ## Build the project

|

||||

$(info ${GREEN}I local-ai build info:${RESET})

|

||||

$(info ${GREEN}I BUILD_TYPE: ${YELLOW}$(BUILD_TYPE)${RESET})

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} $(GOCMD) build -o $(BINARY_NAME) ./

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} $(GOCMD) build -x -o $(BINARY_NAME) ./

|

||||

|

||||

generic-build: ## Build the project using generic

|

||||

BUILD_TYPE="generic" $(MAKE) build

|

||||

|

||||

## GPT4ALL-J

|

||||

go-gpt4all-j:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-gpt4all-j.cpp go-gpt4all-j

|

||||

cd go-gpt4all-j && git checkout -b build $(GOGPT4ALLJ_VERSION)

|

||||

# This is hackish, but needed as both go-llama and go-gpt4allj have their own version of ggml..

|

||||

@find ./go-gpt4all-j -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_/gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.h" -exec sed -i'' -e 's/gpt_/gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/json_/json_gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/void replace/void json_gptj_replace/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/::replace/::json_gptj_replace/g' {} +

|

||||

|

||||

go-gpt4all-j/libgptj.a: go-gpt4all-j

|

||||

$(MAKE) -C go-gpt4all-j $(GENERIC_PREFIX)libgptj.a

|

||||

|

||||

# CEREBRAS GPT

|

||||

go-gpt2:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-gpt2.cpp go-gpt2

|

||||

cd go-gpt2 && git checkout -b build $(GOGPT2_VERSION)

|

||||

# This is hackish, but needed as both go-llama and go-gpt4allj have their own version of ggml..

|

||||

@find ./go-gpt2 -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_/gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.h" -exec sed -i'' -e 's/gpt_/gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/json_/json_gpt2_/g' {} +

|

||||

|

||||

go-gpt2/libgpt2.a: go-gpt2

|

||||

$(MAKE) -C go-gpt2 $(GENERIC_PREFIX)libgpt2.a

|

||||

|

||||

|

||||

go-llama:

|

||||

git clone -b $(GOLLAMA_VERSION) --recurse-submodules https://github.com/go-skynet/go-llama.cpp go-llama

|

||||

|

||||

go-llama/libbinding.a: go-llama

|

||||

$(MAKE) -C go-llama $(GENERIC_PREFIX)libbinding.a

|

||||

|

||||

replace:

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-llama.cpp=$(shell pwd)/go-llama

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-gpt4all-j.cpp=$(shell pwd)/go-gpt4all-j

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-gpt2.cpp=$(shell pwd)/go-gpt2

|

||||

|

||||

prepare: go-llama/libbinding.a go-gpt4all-j/libgptj.a go-gpt2/libgpt2.a replace

|

||||

|

||||

clean: ## Remove build related file

|

||||

rm -fr ./go-llama

|

||||

rm -rf ./go-gpt4all-j

|

||||

rm -rf ./go-gpt2

|

||||

rm -rf $(BINARY_NAME)

|

||||

|

||||

## Run:

|

||||

run: prepare

|

||||

## Run

|

||||

run: prepare ## run local-ai

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} $(GOCMD) run ./main.go

|

||||

|

||||

test-models/testmodel:

|

||||

@@ -106,7 +184,7 @@ test-models/testmodel:

|

||||

|

||||

test: prepare test-models/testmodel

|

||||

cp tests/fixtures/* test-models

|

||||

@C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) test -v -timeout 20m ./...

|

||||

@C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) run github.com/onsi/ginkgo/v2/ginkgo -v -r ./api

|

||||

|

||||

## Help:

|

||||

help: ## Show this help.

|

||||

|

||||

479

README.md

479

README.md

@@ -5,22 +5,50 @@

|

||||

<br>

|

||||

</h1>

|

||||

|

||||

> :warning: This project has been renamed from `llama-cli` to `LocalAI` to reflect the fact that we are focusing on a fast drop-in OpenAI API rather than on the CLI interface. We think that there are already many projects that can be used as a CLI interface already, for instance [llama.cpp](https://github.com/ggerganov/llama.cpp) and [gpt4all](https://github.com/nomic-ai/gpt4all). If you are using `llama-cli` for CLI interactions and want to keep using it, use older versions or please open up an issue - contributions are welcome!

|

||||

|

||||

|

||||

[](https://github.com/go-skynet/LocalAI/actions/workflows/test.yml) [](https://github.com/go-skynet/LocalAI/actions/workflows/image.yml)

|

||||

|

||||

[](https://discord.gg/uJAeKSAGDy)

|

||||

|

||||

**LocalAI** is a straightforward, drop-in replacement API compatible with OpenAI for local CPU inferencing, based on [llama.cpp](https://github.com/ggerganov/llama.cpp), [gpt4all](https://github.com/nomic-ai/gpt4all) and [ggml](https://github.com/ggerganov/ggml), including support GPT4ALL-J which is Apache 2.0 Licensed and can be used for commercial purposes.

|

||||

**LocalAI** is a drop-in replacement REST API compatible with OpenAI for local CPU inferencing. It allows to run models locally or on-prem with consumer grade hardware, supporting multiple models families. Supports also GPT4ALL-J which is licensed under Apache 2.0.

|

||||

|

||||

- OpenAI compatible API

|

||||

- Supports multiple-models

|

||||

- Supports multiple models

|

||||

- Once loaded the first time, it keep models loaded in memory for faster inference

|

||||

- Support for prompt templates

|

||||

- Doesn't shell-out, but uses C bindings for a faster inference and better performance. Uses [go-llama.cpp](https://github.com/go-skynet/go-llama.cpp) and [go-gpt4all-j.cpp](https://github.com/go-skynet/go-gpt4all-j.cpp).

|

||||

- Doesn't shell-out, but uses C bindings for a faster inference and better performance.

|

||||

|

||||

Reddit post: https://www.reddit.com/r/selfhosted/comments/12w4p2f/localai_openai_compatible_api_to_run_llm_models/

|

||||

LocalAI is a community-driven project, focused on making the AI accessible to anyone. Any contribution, feedback and PR is welcome! It was initially created by [mudler](https://github.com/mudler/) at the [SpectroCloud OSS Office](https://github.com/spectrocloud).

|

||||

|

||||

LocalAI uses C++ bindings for optimizing speed. It is based on [llama.cpp](https://github.com/ggerganov/llama.cpp), [gpt4all](https://github.com/nomic-ai/gpt4all), [rwkv.cpp](https://github.com/saharNooby/rwkv.cpp), [ggml](https://github.com/ggerganov/ggml), [whisper.cpp](https://github.com/ggerganov/whisper.cpp) for audio transcriptions, and [bert.cpp](https://github.com/skeskinen/bert.cpp) for embedding.

|

||||

|

||||

See [examples on how to integrate LocalAI](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

|

||||

## News

|

||||

|

||||

- 11-05-2023: __1.9.0__ released! 🔥 Important whisper updates ( https://github.com/go-skynet/LocalAI/pull/233 https://github.com/go-skynet/LocalAI/pull/229 ) and extended gpt4all model families support ( https://github.com/go-skynet/LocalAI/pull/232 ). Redpajama/dolly experimental ( https://github.com/go-skynet/LocalAI/pull/214 )

|

||||

- 10-05-2023: __1.8.0__ released! 🔥 Added support for fast and accurate embeddings with `bert.cpp` ( https://github.com/go-skynet/LocalAI/pull/222 )

|

||||

- 09-05-2023: Added experimental support for transcriptions endpoint ( https://github.com/go-skynet/LocalAI/pull/211 )

|

||||

- 08-05-2023: Support for embeddings with models using the `llama.cpp` backend ( https://github.com/go-skynet/LocalAI/pull/207 )

|

||||

- 02-05-2023: Support for `rwkv.cpp` models ( https://github.com/go-skynet/LocalAI/pull/158 ) and for `/edits` endpoint

|

||||

- 01-05-2023: Support for SSE stream of tokens in `llama.cpp` backends ( https://github.com/go-skynet/LocalAI/pull/152 )

|

||||

|

||||

Twitter: [@LocalAI_API](https://twitter.com/LocalAI_API) and [@mudler_it](https://twitter.com/mudler_it)

|

||||

|

||||

### Blogs and articles

|

||||

|

||||

- [Tutorial to use k8sgpt with LocalAI](https://medium.com/@tyler_97636/k8sgpt-localai-unlock-kubernetes-superpowers-for-free-584790de9b65) - excellent usecase for localAI, using AI to analyse Kubernetes clusters.

|

||||

|

||||

## Contribute and help

|

||||

|

||||

To help the project you can:

|

||||

|

||||

- Upvote the [Reddit post](https://www.reddit.com/r/selfhosted/comments/12w4p2f/localai_openai_compatible_api_to_run_llm_models/) about LocalAI.

|

||||

|

||||

- [Hacker news post](https://news.ycombinator.com/item?id=35726934) - help us out by voting if you like this project.

|

||||

|

||||

- If you have technological skills and want to contribute to development, have a look at the open issues. If you are new you can have a look at the [good-first-issue](https://github.com/go-skynet/LocalAI/issues?q=is%3Aissue+is%3Aopen+label%3A%22good+first+issue%22) and [help-wanted](https://github.com/go-skynet/LocalAI/issues?q=is%3Aissue+is%3Aopen+label%3A%22help+wanted%22) labels.

|

||||

|

||||

- If you don't have technological skills you can still help improving documentation or add examples or share your user-stories with our community, any help and contribution is welcome!

|

||||

|

||||

## Model compatibility

|

||||

|

||||

@@ -29,15 +57,63 @@ It is compatible with the models supported by [llama.cpp](https://github.com/gge

|

||||

Tested with:

|

||||

- Vicuna

|

||||

- Alpaca

|

||||

- [GPT4ALL](https://github.com/nomic-ai/gpt4all)

|

||||

- [GPT4ALL-J](https://gpt4all.io/models/ggml-gpt4all-j.bin)

|

||||

- [GPT4ALL](https://github.com/nomic-ai/gpt4all) (changes required, see below)

|

||||

- [GPT4ALL-J](https://gpt4all.io/models/ggml-gpt4all-j.bin) (no changes required)

|

||||

- Koala

|

||||

- [cerebras-GPT with ggml](https://huggingface.co/lxe/Cerebras-GPT-2.7B-Alpaca-SP-ggml)

|

||||

- WizardLM

|

||||

- [RWKV](https://github.com/BlinkDL/RWKV-LM) models with [rwkv.cpp](https://github.com/saharNooby/rwkv.cpp)

|

||||

|

||||

It should also be compatible with StableLM and GPTNeoX ggml models (untested)

|

||||

### GPT4ALL

|

||||

|

||||

Note: You might need to convert older models to the new format, see [here](https://github.com/ggerganov/llama.cpp#using-gpt4all) for instance to run `gpt4all`.

|

||||

|

||||

### RWKV

|

||||

|

||||

<details>

|

||||

|

||||

A full example on how to run a rwkv model is in the [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/rwkv).

|

||||

|

||||

Note: rwkv models needs to specify the backend `rwkv` in the YAML config files and have an associated tokenizer along that needs to be provided with it:

|

||||

|

||||

```

|

||||

36464540 -rw-r--r-- 1 mudler mudler 1.2G May 3 10:51 rwkv_small

|

||||

36464543 -rw-r--r-- 1 mudler mudler 2.4M May 3 10:51 rwkv_small.tokenizer.json

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

### Others

|

||||

|

||||

It should also be compatible with StableLM and GPTNeoX ggml models (untested).

|

||||

|

||||

### Hardware requirements

|

||||

|

||||

Depending on the model you are attempting to run might need more RAM or CPU resources. Check out also [here](https://github.com/ggerganov/llama.cpp#memorydisk-requirements) for `ggml` based backends. `rwkv` is less expensive on resources.

|

||||

|

||||

|

||||

### Feature support matrix

|

||||

|

||||

<details>

|

||||

|

||||

| Backend | Compatible models | Completion/Chat endpoint | Audio transcription | Embeddings support | Token stream support | Github | Bindings |

|

||||

|-----------------|-----------------------|--------------------------|---------------------|-----------------------------------|----------------------|--------------------------------------------|-------------------------------------------|

|

||||

| llama | Vicuna, Alpaca, LLaMa | yes | no | yes (doesn't seem to be accurate) | yes | https://github.com/ggerganov/llama.cpp | https://github.com/go-skynet/go-llama.cpp |

|

||||

| gpt4all-llama | Vicuna, Alpaca, LLaMa | yes | no | no | yes | https://github.com/nomic-ai/gpt4all | https://github.com/go-skynet/gpt4all |

|

||||

| gpt4all-mpt | MPT | yes | no | no | yes | https://github.com/nomic-ai/gpt4all | https://github.com/go-skynet/gpt4all |

|

||||

| gpt4all-j | GPT4ALL-J | yes | no | no | yes | https://github.com/nomic-ai/gpt4all | https://github.com/go-skynet/gpt4all |

|

||||

| gpt2 | GPT/NeoX, Cerebras | yes | no | no | no | https://github.com/ggerganov/ggml | https://github.com/go-skynet/go-gpt2.cpp |

|

||||

| dolly | Dolly | yes | no | no | no | https://github.com/ggerganov/ggml | https://github.com/go-skynet/go-gpt2.cpp |

|

||||

| redpajama | RedPajama | yes | no | no | no | https://github.com/ggerganov/ggml | https://github.com/go-skynet/go-gpt2.cpp |

|

||||

| stableLM | StableLM GPT/NeoX | yes | no | no | no | https://github.com/ggerganov/ggml | https://github.com/go-skynet/go-gpt2.cpp |

|

||||

| starcoder | Starcoder | yes | no | no | no | https://github.com/ggerganov/ggml | https://github.com/go-skynet/go-gpt2.cpp |

|

||||

| bloomz | Bloom | yes | no | no | no | https://github.com/NouamaneTazi/bloomz.cpp | https://github.com/go-skynet/bloomz.cpp |

|

||||

| rwkv | RWKV | yes | no | no | yes | https://github.com/saharNooby/rwkv.cpp | https://github.com/donomii/go-rwkv.cpp |

|

||||

| bert-embeddings | bert | no | no | yes | no | https://github.com/skeskinen/bert.cpp | https://github.com/go-skynet/go-bert.cpp |

|

||||

| whisper | whisper | no | yes | no | no | https://github.com/ggerganov/whisper.cpp | https://github.com/ggerganov/whisper.cpp |

|

||||

|

||||

</details>

|

||||

|

||||

## Usage

|

||||

|

||||

> `LocalAI` comes by default as a container image. You can check out all the available images with corresponding tags [here](https://quay.io/repository/go-skynet/local-ai?tab=tags&tag=latest).

|

||||

@@ -114,11 +190,101 @@ curl http://localhost:8080/v1/chat/completions -H "Content-Type: application/jso

|

||||

|

||||

To build locally, run `make build` (see below).

|

||||

|

||||

## Other examples

|

||||

### Other examples

|

||||

|

||||

To see other examples on how to integrate with other projects, see: [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

To see other examples on how to integrate with other projects for instance for question answering or for using it with chatbot-ui, see: [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

|

||||

## Prompt templates

|

||||

|

||||

### Advanced configuration

|

||||

|

||||

LocalAI can be configured to serve user-defined models with a set of default parameters and templates.

|

||||

|

||||

<details>

|

||||

|

||||

You can create multiple `yaml` files in the models path or either specify a single YAML configuration file.

|

||||

Consider the following `models` folder in the `example/chatbot-ui`:

|

||||

|

||||

```

|

||||

base ❯ ls -liah examples/chatbot-ui/models

|

||||

36487587 drwxr-xr-x 2 mudler mudler 4.0K May 3 12:27 .

|

||||

36487586 drwxr-xr-x 3 mudler mudler 4.0K May 3 10:42 ..

|

||||

36465214 -rw-r--r-- 1 mudler mudler 10 Apr 27 07:46 completion.tmpl

|

||||

36464855 -rw-r--r-- 1 mudler mudler 3.6G Apr 27 00:08 ggml-gpt4all-j

|

||||

36464537 -rw-r--r-- 1 mudler mudler 245 May 3 10:42 gpt-3.5-turbo.yaml

|

||||

36467388 -rw-r--r-- 1 mudler mudler 180 Apr 27 07:46 gpt4all.tmpl

|

||||

```

|

||||

|

||||

In the `gpt-3.5-turbo.yaml` file it is defined the `gpt-3.5-turbo` model which is an alias to use `gpt4all-j` with pre-defined options.

|

||||

|

||||

For instance, consider the following that declares `gpt-3.5-turbo` backed by the `ggml-gpt4all-j` model:

|

||||

|

||||

```yaml

|

||||

name: gpt-3.5-turbo

|

||||

# Default model parameters

|

||||

parameters:

|

||||

# Relative to the models path

|

||||

model: ggml-gpt4all-j

|

||||

# temperature

|

||||

temperature: 0.3

|

||||

# all the OpenAI request options here..

|

||||

|

||||

# Default context size

|

||||

context_size: 512

|

||||

threads: 10

|

||||

# Define a backend (optional). By default it will try to guess the backend the first time the model is interacted with.

|

||||

backend: gptj # available: llama, stablelm, gpt2, gptj rwkv

|

||||

# stopwords (if supported by the backend)

|

||||

stopwords:

|

||||

- "HUMAN:"

|

||||

- "### Response:"

|

||||

# define chat roles

|

||||

roles:

|

||||

user: "HUMAN:"

|

||||

system: "GPT:"

|

||||

template:

|

||||

# template file ".tmpl" with the prompt template to use by default on the endpoint call. Note there is no extension in the files

|

||||

completion: completion

|

||||

chat: ggml-gpt4all-j

|

||||

```

|

||||

|

||||

Specifying a `config-file` via CLI allows to declare models in a single file as a list, for instance:

|

||||

|

||||

```yaml

|

||||

- name: list1

|

||||

parameters:

|

||||

model: testmodel

|

||||

context_size: 512

|

||||

threads: 10

|

||||

stopwords:

|

||||

- "HUMAN:"

|

||||

- "### Response:"

|

||||

roles:

|

||||

user: "HUMAN:"

|

||||

system: "GPT:"

|

||||

template:

|

||||

completion: completion

|

||||

chat: ggml-gpt4all-j

|

||||

- name: list2

|

||||

parameters:

|

||||

model: testmodel

|

||||

context_size: 512

|

||||

threads: 10

|

||||

stopwords:

|

||||

- "HUMAN:"

|

||||

- "### Response:"

|

||||

roles:

|

||||

user: "HUMAN:"

|

||||

system: "GPT:"

|

||||

template:

|

||||

completion: completion

|

||||

chat: ggml-gpt4all-j

|

||||

```

|

||||

|

||||

See also [chatbot-ui](https://github.com/go-skynet/LocalAI/tree/master/examples/chatbot-ui) as an example on how to use config files.

|

||||

|

||||

</details>

|

||||

|

||||

### Prompt templates

|

||||

|

||||

The API doesn't inject a default prompt for talking to the model. You have to use a prompt similar to what's described in the standford-alpaca docs: https://github.com/tatsu-lab/stanford_alpaca#data-release.

|

||||

|

||||

@@ -136,11 +302,51 @@ The below instruction describes a task. Write a response that appropriately comp

|

||||

|

||||

See the [prompt-templates](https://github.com/go-skynet/LocalAI/tree/master/prompt-templates) directory in this repository for templates for some of the most popular models.

|

||||

|

||||

|

||||

For the edit endpoint, an example template for alpaca-based models can be:

|

||||

|

||||

```yaml

|

||||

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

|

||||

|

||||

### Instruction:

|

||||

{{.Instruction}}

|

||||

|

||||

### Input:

|

||||

{{.Input}}

|

||||

|

||||

### Response:

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

## API

|

||||

### CLI

|

||||

|

||||

`LocalAI` provides an API for running text generation as a service, that follows the OpenAI reference and can be used as a drop-in. The models once loaded the first time will be kept in memory.

|

||||

You can control LocalAI with command line arguments, to specify a binding address, or the number of threads.

|

||||

|

||||

<details>

|

||||

|

||||

Usage:

|

||||

|

||||

```

|

||||

local-ai --models-path <model_path> [--address <address>] [--threads <num_threads>]

|

||||

```

|

||||

|

||||

| Parameter | Environment Variable | Default Value | Description |

|

||||

| ------------ | -------------------- | ------------- | -------------------------------------- |

|

||||

| models-path | MODELS_PATH | | The path where you have models (ending with `.bin`). |

|

||||

| threads | THREADS | Number of Physical cores | The number of threads to use for text generation. |

|

||||

| address | ADDRESS | :8080 | The address and port to listen on. |

|

||||

| context-size | CONTEXT_SIZE | 512 | Default token context size. |

|

||||

| debug | DEBUG | false | Enable debug mode. |

|

||||

| config-file | CONFIG_FILE | empty | Path to a LocalAI config file. |

|

||||

|

||||

</details>

|

||||

|

||||

## Setup

|

||||

|

||||

Currently LocalAI comes as a container image and can be used with docker or a container engine of choice. You can check out all the available images with corresponding tags [here](https://quay.io/repository/go-skynet/local-ai?tab=tags&tag=latest).

|

||||

|

||||

### Docker

|

||||

|

||||

<details>

|

||||

Example of starting the API with `docker`:

|

||||

@@ -161,30 +367,123 @@ You should see:

|

||||

└───────────────────────────────────────────────────┘

|

||||

```

|

||||

|

||||

You can control the API server options with command line arguments:

|

||||

</details>

|

||||

|

||||

### Build locally

|

||||

|

||||

<details>

|

||||

|

||||

In order to build the `LocalAI` container image locally you can use `docker`:

|

||||

|

||||

```

|

||||

local-api --models-path <model_path> [--address <address>] [--threads <num_threads>]

|

||||

# build the image

|

||||

docker build -t LocalAI .

|

||||

docker run LocalAI

|

||||

```

|

||||

|

||||

The API takes takes the following parameters:

|

||||

Or you can build the binary with `make`:

|

||||

|

||||

| Parameter | Environment Variable | Default Value | Description |

|

||||

| ------------ | -------------------- | ------------- | -------------------------------------- |

|

||||

| models-path | MODELS_PATH | | The path where you have models (ending with `.bin`). |

|

||||

| threads | THREADS | Number of Physical cores | The number of threads to use for text generation. |

|

||||

| address | ADDRESS | :8080 | The address and port to listen on. |

|

||||

| context-size | CONTEXT_SIZE | 512 | Default token context size. |

|

||||

| debug | DEBUG | false | Enable debug mode. |

|

||||

|

||||

Once the server is running, you can start making requests to it using HTTP, using the OpenAI API.

|

||||

```

|

||||

make build

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

## Advanced configuration

|

||||

### Build on mac

|

||||

|

||||

Building on Mac (M1 or M2) works, but you may need to install some prerequisites using `brew`.

|

||||

|

||||

### Supported OpenAI API endpoints

|

||||

<details>

|

||||

|

||||

The below has been tested by one mac user and found to work. Note that this doesn't use docker to run the server:

|

||||

|

||||

```

|

||||

# install build dependencies

|

||||

brew install cmake

|

||||

brew install go

|

||||

|

||||

# clone the repo

|

||||

git clone https://github.com/go-skynet/LocalAI.git

|

||||

|

||||

cd LocalAI

|

||||

|

||||

# build the binary

|

||||

make build

|

||||

|

||||

# Download gpt4all-j to models/

|

||||

wget https://gpt4all.io/models/ggml-gpt4all-j.bin -O models/ggml-gpt4all-j

|

||||

|

||||

# Use a template from the examples

|

||||

cp -rf prompt-templates/ggml-gpt4all-j.tmpl models/

|

||||

|

||||

# Run LocalAI

|

||||

./local-ai --models-path ./models/ --debug

|

||||

|

||||

# Now API is accessible at localhost:8080

|

||||

curl http://localhost:8080/v1/models

|

||||

|

||||

curl http://localhost:8080/v1/chat/completions -H "Content-Type: application/json" -d '{

|

||||

"model": "ggml-gpt4all-j",

|

||||

"messages": [{"role": "user", "content": "How are you?"}],

|

||||

"temperature": 0.9

|

||||

}'

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

### Windows compatibility

|

||||

|

||||

It should work, however you need to make sure you give enough resources to the container. See https://github.com/go-skynet/LocalAI/issues/2

|

||||

|

||||

### Run LocalAI in Kubernetes

|

||||

|

||||

LocalAI can be installed inside Kubernetes with helm.

|

||||

|

||||

<details>

|

||||

|

||||

1. Add the helm repo

|

||||

```bash

|

||||

helm repo add go-skynet https://go-skynet.github.io/helm-charts/

|

||||

```

|

||||

1. Create a values files with your settings:

|

||||

```bash

|

||||

cat <<EOF > values.yaml

|

||||

deployment:

|

||||

image: quay.io/go-skynet/local-ai:latest

|

||||

env:

|

||||

threads: 4

|

||||

contextSize: 1024

|

||||

modelsPath: "/models"

|

||||

# Optionally create a PVC, mount the PV to the LocalAI Deployment,

|

||||

# and download a model to prepopulate the models directory

|

||||

modelsVolume:

|

||||

enabled: true

|

||||

url: "https://gpt4all.io/models/ggml-gpt4all-j.bin"

|

||||

pvc:

|

||||

size: 6Gi

|

||||

accessModes:

|

||||

- ReadWriteOnce

|

||||

auth:

|

||||

# Optional value for HTTP basic access authentication header

|

||||

basic: "" # 'username:password' base64 encoded

|

||||

service:

|

||||

type: ClusterIP

|

||||

annotations: {}

|

||||

# If using an AWS load balancer, you'll need to override the default 60s load balancer idle timeout

|

||||

# service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: "1200"

|

||||

EOF

|

||||

```

|

||||

3. Install the helm chart:

|

||||

```bash

|

||||

helm repo update

|

||||

helm install local-ai go-skynet/local-ai -f values.yaml

|

||||

```

|

||||

|

||||

Check out also the [helm chart repository on GitHub](https://github.com/go-skynet/helm-charts).

|

||||

|

||||

</details>

|

||||

|

||||

## Supported OpenAI API endpoints

|

||||

|

||||

You can check out the [OpenAI API reference](https://platform.openai.com/docs/api-reference/chat/create).

|

||||

|

||||

@@ -195,7 +494,7 @@ Note:

|

||||

- You can also specify the model as part of the OpenAI token.

|

||||

- If only one model is available, the API will use it for all the requests.

|

||||

|

||||

#### Chat completions

|

||||

### Chat completions

|

||||

|

||||

<details>

|

||||

For example, to generate a chat completion, you can send a POST request to the `/v1/chat/completions` endpoint with the instruction as the request body:

|

||||

@@ -211,10 +510,30 @@ curl http://localhost:8080/v1/chat/completions -H "Content-Type: application/jso

|

||||

Available additional parameters: `top_p`, `top_k`, `max_tokens`

|

||||

</details>

|

||||

|

||||

#### Completions

|

||||

### Edit completions

|

||||

|

||||

<details>

|

||||

To generate an edit completion you can send a POST request to the `/v1/edits` endpoint with the instruction as the request body:

|

||||

|

||||

```

|

||||

curl http://localhost:8080/v1/edits -H "Content-Type: application/json" -d '{

|

||||

"model": "ggml-koala-7b-model-q4_0-r2.bin",

|

||||

"instruction": "rephrase",

|

||||

"input": "Black cat jumped out of the window",

|

||||

"temperature": 0.7

|

||||

}'

|

||||

```

|

||||

|

||||

Available additional parameters: `top_p`, `top_k`, `max_tokens`.

|

||||

|

||||

</details>

|

||||

|

||||

### Completions

|

||||

|

||||

<details>

|

||||

|

||||

To generate a completion, you can send a POST request to the `/v1/completions` endpoint with the instruction as per the request body:

|

||||

|

||||

```

|

||||

curl http://localhost:8080/v1/completions -H "Content-Type: application/json" -d '{

|

||||

"model": "ggml-koala-7b-model-q4_0-r2.bin",

|

||||

@@ -227,7 +546,7 @@ Available additional parameters: `top_p`, `top_k`, `max_tokens`

|

||||

|

||||

</details>

|

||||

|

||||

#### List models

|

||||

### List models

|

||||

|

||||

<details>

|

||||

You can list all the models available with:

|

||||

@@ -238,57 +557,51 @@ curl http://localhost:8080/v1/models

|

||||

|

||||

</details>

|

||||

|

||||

## Helm Chart Installation (run LocalAI in Kubernetes)

|

||||

|

||||

LocalAI can be installed inside Kubernetes with helm.

|

||||

### Embeddings

|

||||

|

||||

<details>

|

||||

The local-ai Helm chart supports two options for the LocalAI server's models directory:

|

||||

1. Basic deployment with no persistent volume. You must manually update the Deployment to configure your own models directory.

|

||||

|

||||

Install the chart with `.Values.deployment.volumes.enabled == false` and `.Values.dataVolume.enabled == false`.

|

||||

The embedding endpoint is experimental and enabled only if the model is configured with `embeddings: true` in its `yaml` file, for example:

|

||||

|

||||

2. Advanced, two-phase deployment to provision the models directory using a DataVolume. Requires [Containerized Data Importer CDI](https://github.com/kubevirt/containerized-data-importer) to be pre-installed in your cluster.

|

||||

```yaml

|

||||

name: text-embedding-ada-002

|

||||

parameters:

|

||||

model: bert

|

||||

embeddings: true

|

||||

backend: "bert-embeddings"

|

||||

```

|

||||

|

||||

First, install the chart with `.Values.deployment.volumes.enabled == false` and `.Values.dataVolume.enabled == true`:

|

||||

```bash

|

||||

helm install local-ai charts/local-ai -n local-ai --create-namespace

|

||||

```

|

||||

Wait for CDI to create an importer Pod for the DataVolume and for the importer pod to finish provisioning the model archive inside the PV.

|

||||

There is an example available [here](https://github.com/go-skynet/LocalAI/tree/master/examples/query_data/).

|

||||

|

||||

Once the PV is provisioned and the importer Pod removed, set `.Values.deployment.volumes.enabled == true` and `.Values.dataVolume.enabled == false` and upgrade the chart:

|

||||

```bash

|

||||

helm upgrade local-ai -n local-ai charts/local-ai

|

||||

```

|

||||

This will update the local-ai Deployment to mount the PV that was provisioned by the DataVolume.

|

||||

Note: embeddings is supported only with `llama.cpp` compatible models and `bert` models. bert is more performant and available independently of the LLM model.

|

||||

|

||||

</details>

|

||||

|

||||

## Blog posts

|

||||

### Transcriptions endpoint

|

||||

|

||||

- https://medium.com/@tyler_97636/k8sgpt-localai-unlock-kubernetes-superpowers-for-free-584790de9b65

|

||||

<details>

|

||||

|

||||

## Windows compatibility

|

||||

Note: requires ffmpeg in the container image, which is currently not shipped due to licensing issues. We will prepare separated images with ffmpeg. (stay tuned!)

|

||||

|

||||

It should work, however you need to make sure you give enough resources to the container. See https://github.com/go-skynet/LocalAI/issues/2

|

||||

Download one of the models from https://huggingface.co/ggerganov/whisper.cpp/tree/main in the `models` folder, and create a YAML file for your model:

|

||||

|

||||

## Build locally

|

||||

|

||||

Pre-built images might fit well for most of the modern hardware, however you can and might need to build the images manually.

|

||||

|

||||

In order to build the `LocalAI` container image locally you can use `docker`:

|

||||

|

||||

```

|

||||

# build the image

|

||||

docker build -t LocalAI .

|

||||

docker run LocalAI

|

||||

```yaml

|

||||

name: whisper-1

|

||||

backend: whisper

|

||||

parameters:

|

||||

model: whisper-en

|

||||

```

|

||||

|

||||

Or build the binary with `make`:

|

||||

The transcriptions endpoint then can be tested like so:

|

||||

```

|

||||

wget --quiet --show-progress -O gb1.ogg https://upload.wikimedia.org/wikipedia/commons/1/1f/George_W_Bush_Columbia_FINAL.ogg

|

||||

|

||||

curl http://localhost:8080/v1/audio/transcriptions -H "Content-Type: multipart/form-data" -F file="@$PWD/gb1.ogg" -F model="whisper-1"

|

||||

|

||||

{"text":"My fellow Americans, this day has brought terrible news and great sadness to our country.At nine o'clock this morning, Mission Control in Houston lost contact with our Space ShuttleColumbia.A short time later, debris was seen falling from the skies above Texas.The Columbia's lost.There are no survivors.One board was a crew of seven.Colonel Rick Husband, Lieutenant Colonel Michael Anderson, Commander Laurel Clark, Captain DavidBrown, Commander William McCool, Dr. Kultna Shavla, and Elon Ramon, a colonel in the IsraeliAir Force.These men and women assumed great risk in the service to all humanity.In an age when spaceflight has come to seem almost routine, it is easy to overlook thedangers of travel by rocket and the difficulties of navigating the fierce outer atmosphere ofthe Earth.These astronauts knew the dangers, and they faced them willingly, knowing they had a highand noble purpose in life.Because of their courage and daring and idealism, we will miss them all the more.All Americans today are thinking as well of the families of these men and women who havebeen given this sudden shock and grief.You're not alone.Our entire nation agrees with you, and those you loved will always have the respect andgratitude of this country.The cause in which they died will continue.Mankind has led into the darkness beyond our world by the inspiration of discovery andthe longing to understand.Our journey into space will go on.In the skies today, we saw destruction and tragedy.As farther than we can see, there is comfort and hope.In the words of the prophet Isaiah, \"Lift your eyes and look to the heavens who createdall these, he who brings out the starry hosts one by one and calls them each by name.\"Because of his great power and mighty strength, not one of them is missing.The same creator who names the stars also knows the names of the seven souls we mourntoday.The crew of the shuttle Columbia did not return safely to Earth yet we can pray that all aresafely home.May God bless the grieving families and may God continue to bless America.[BLANK_AUDIO]"}

|

||||

```

|

||||

make build

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

## Frequently asked questions

|

||||

|

||||

@@ -333,7 +646,7 @@ Not currently, as ggml doesn't support GPUs yet: https://github.com/ggerganov/ll

|

||||

### Where is the webUI?

|

||||

|

||||

<details>

|

||||

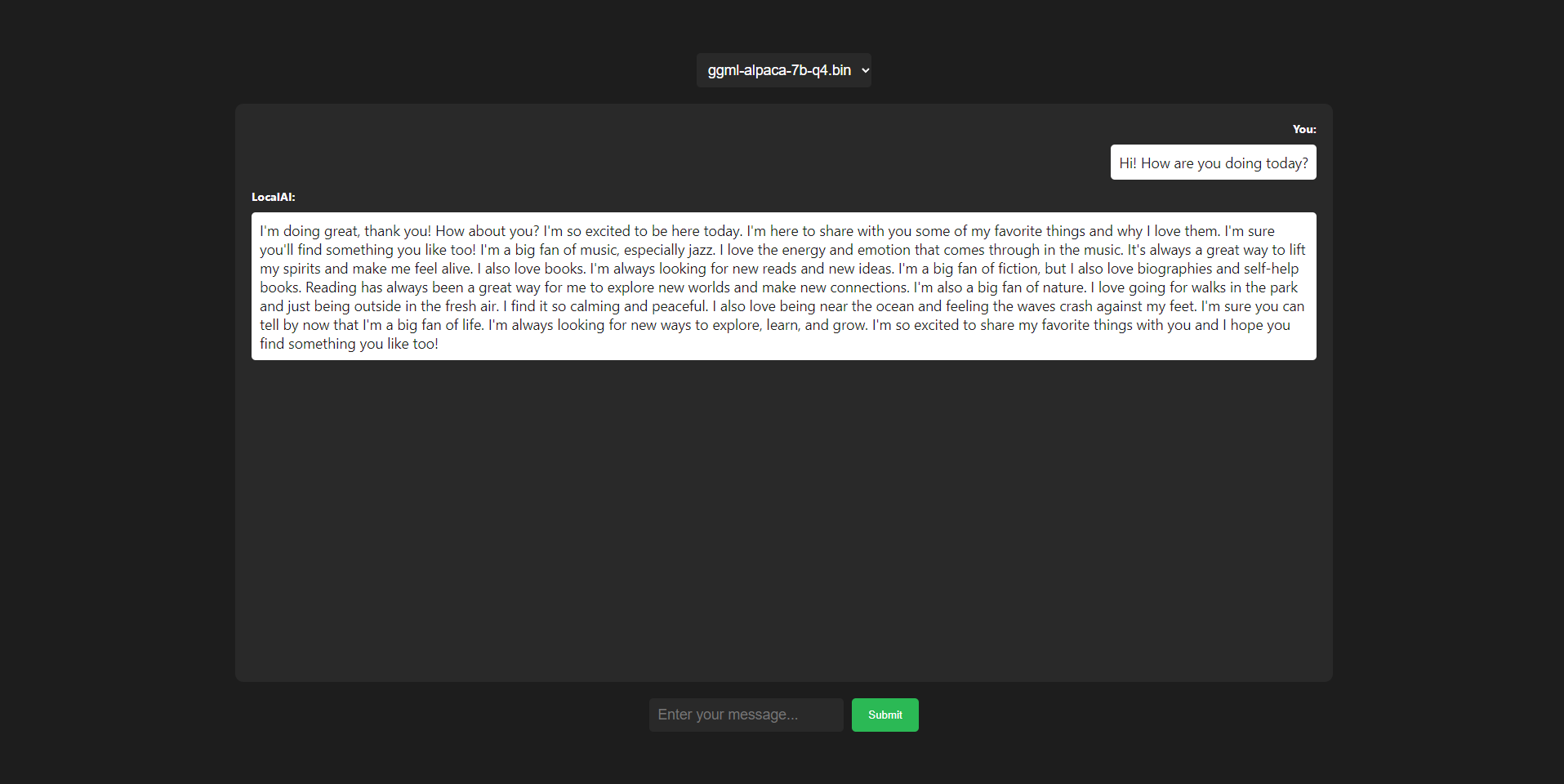

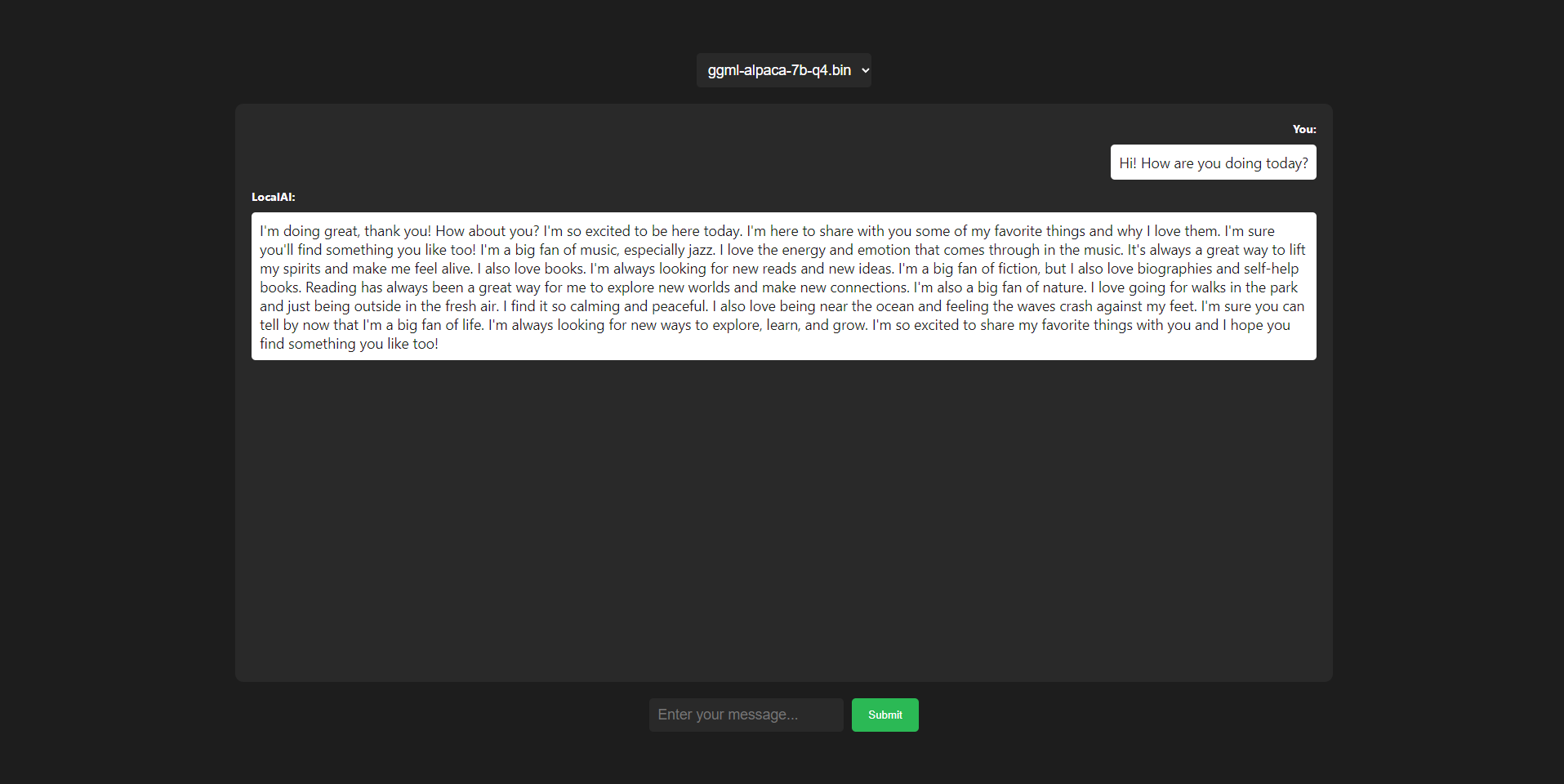

We are working on to have a good out of the box experience - however as LocalAI is an API you can already plug it into existing projects that provides are UI interfaces to OpenAI's APIs. There are several already on github, and should be compatible with LocalAI already (as it mimics the OpenAI API)

|

||||

There is the availability of localai-webui and chatbot-ui in the examples section and can be setup as per the instructions. However as LocalAI is an API you can already plug it into existing projects that provides are UI interfaces to OpenAI's APIs. There are several already on github, and should be compatible with LocalAI already (as it mimics the OpenAI API)

|

||||

|

||||

</details>

|

||||

|

||||

@@ -351,26 +664,50 @@ Feel free to open up a PR to get your project listed!

|

||||

|

||||

- [Kairos](https://github.com/kairos-io/kairos)

|

||||

- [k8sgpt](https://github.com/k8sgpt-ai/k8sgpt#running-local-models)

|

||||

- [Spark](https://github.com/cedriking/spark)

|

||||

|

||||

## Blog posts and other articles

|

||||

|

||||

- https://medium.com/@tyler_97636/k8sgpt-localai-unlock-kubernetes-superpowers-for-free-584790de9b65

|

||||

- https://kairos.io/docs/examples/localai/

|

||||

|

||||

## Short-term roadmap

|

||||

|

||||

- [x] Mimic OpenAI API (https://github.com/go-skynet/LocalAI/issues/10)

|

||||

- [ ] Binary releases (https://github.com/go-skynet/LocalAI/issues/6)

|

||||

- [ ] Upstream our golang bindings to llama.cpp (https://github.com/ggerganov/llama.cpp/issues/351) and gpt4all

|

||||

- [ ] Upstream our golang bindings to llama.cpp (https://github.com/ggerganov/llama.cpp/issues/351) and [gpt4all](https://github.com/go-skynet/LocalAI/issues/85)

|

||||

- [x] Multi-model support

|

||||

- [ ] Have a webUI!

|

||||

- [ ] Allow configuration of defaults for models.

|

||||

- [ ] Enable automatic downloading of models from a curated gallery, with only free-licensed models.

|

||||

- [x] Have a webUI!

|

||||

- [x] Allow configuration of defaults for models.

|

||||

- [ ] Enable automatic downloading of models from a curated gallery, with only free-licensed models, directly from the webui.

|

||||

|

||||

## Star history

|

||||

|

||||

[](https://star-history.com/#go-skynet/LocalAI&Date)

|

||||

|

||||

## License

|

||||

|

||||

LocalAI is a community-driven project. It was initially created by [Ettore Di Giacinto](https://github.com/mudler/) at the [SpectroCloud OSS Office](https://github.com/spectrocloud).

|

||||

|

||||

MIT

|

||||

|

||||

## Golang bindings used

|

||||

|

||||

- [go-skynet/go-llama.cpp](https://github.com/go-skynet/go-llama.cpp)

|

||||

- [go-skynet/go-gpt4all-j.cpp](https://github.com/go-skynet/go-gpt4all-j.cpp)

|

||||

- [go-skynet/go-gpt2.cpp](https://github.com/go-skynet/go-gpt2.cpp)

|

||||

- [go-skynet/go-bert.cpp](https://github.com/go-skynet/go-bert.cpp)

|

||||

- [donomii/go-rwkv.cpp](https://github.com/donomii/go-rwkv.cpp)

|

||||

|

||||

## Acknowledgements

|

||||

|

||||

- [llama.cpp](https://github.com/ggerganov/llama.cpp)

|

||||

- https://github.com/tatsu-lab/stanford_alpaca

|

||||

- https://github.com/cornelk/llama-go for the initial ideas

|

||||

- https://github.com/antimatter15/alpaca.cpp for the light model version (this is compatible and tested only with that checkpoint model!)

|

||||

|

||||

## Contributors

|

||||

|

||||

<a href="https://github.com/go-skynet/LocalAI/graphs/contributors">

|

||||

<img src="https://contrib.rocks/image?repo=go-skynet/LocalAI" />

|

||||

</a>

|

||||

|

||||

27

api/api.go

27

api/api.go

@@ -6,6 +6,7 @@ import (

|

||||

model "github.com/go-skynet/LocalAI/pkg/model"

|

||||

"github.com/gofiber/fiber/v2"

|

||||

"github.com/gofiber/fiber/v2/middleware/cors"

|

||||

"github.com/gofiber/fiber/v2/middleware/logger"

|

||||

"github.com/gofiber/fiber/v2/middleware/recover"

|

||||

"github.com/rs/zerolog"

|

||||

"github.com/rs/zerolog/log"

|

||||

@@ -40,6 +41,12 @@ func App(configFile string, loader *model.ModelLoader, threads, ctxSize int, f16

|

||||

},

|

||||

})

|

||||

|

||||

if debug {

|

||||

app.Use(logger.New(logger.Config{

|

||||

Format: "[${ip}]:${port} ${status} - ${method} ${path}\n",

|

||||

}))

|

||||

}

|

||||

|

||||

cm := make(ConfigMerger)

|

||||

if err := cm.LoadConfigs(loader.ModelPath); err != nil {

|

||||

log.Error().Msgf("error loading config files: %s", err.Error())

|

||||

@@ -61,11 +68,23 @@ func App(configFile string, loader *model.ModelLoader, threads, ctxSize int, f16

|

||||

app.Use(cors.New())

|

||||

|

||||

// openAI compatible API endpoint

|

||||

app.Post("/v1/chat/completions", openAIEndpoint(cm, true, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/chat/completions", openAIEndpoint(cm, true, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/v1/chat/completions", chatEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/chat/completions", chatEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

|

||||

app.Post("/v1/completions", openAIEndpoint(cm, false, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/completions", openAIEndpoint(cm, false, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/v1/edits", editEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/edits", editEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

|

||||

app.Post("/v1/completions", completionEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/completions", completionEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

|

||||

app.Post("/v1/embeddings", embeddingsEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/embeddings", embeddingsEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

|

||||

// /v1/engines/{engine_id}/embeddings

|

||||

|

||||

app.Post("/v1/engines/:model/embeddings", embeddingsEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

|

||||

app.Post("/v1/audio/transcriptions", transcriptEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

|

||||

app.Get("/v1/models", listModels(loader, cm))

|

||||

app.Get("/models", listModels(loader, cm))

|

||||

|

||||

@@ -10,6 +10,7 @@ import (

|

||||

. "github.com/onsi/ginkgo/v2"

|

||||

. "github.com/onsi/gomega"

|

||||

|

||||

openaigo "github.com/otiai10/openaigo"

|

||||

"github.com/sashabaranov/go-openai"

|

||||

)

|

||||

|

||||

@@ -18,6 +19,7 @@ var _ = Describe("API test", func() {

|

||||

var app *fiber.App

|

||||

var modelLoader *model.ModelLoader

|

||||

var client *openai.Client

|

||||

var client2 *openaigo.Client

|

||||

Context("API query", func() {

|

||||

BeforeEach(func() {

|

||||

modelLoader = model.NewModelLoader(os.Getenv("MODELS_PATH"))

|

||||

@@ -27,6 +29,9 @@ var _ = Describe("API test", func() {

|

||||

defaultConfig := openai.DefaultConfig("")

|

||||

defaultConfig.BaseURL = "http://127.0.0.1:9090/v1"

|

||||

|

||||

client2 = openaigo.NewClient("")

|

||||

client2.BaseURL = defaultConfig.BaseURL

|

||||

|

||||

// Wait for API to be ready

|

||||

client = openai.NewClientWithConfig(defaultConfig)

|

||||

Eventually(func() error {

|

||||

@@ -74,7 +79,7 @@ var _ = Describe("API test", func() {

|

||||

It("returns errors", func() {

|

||||

_, err := client.CreateCompletion(context.TODO(), openai.CompletionRequest{Model: "foomodel", Prompt: "abcdedfghikl"})

|

||||

Expect(err).To(HaveOccurred())

|

||||

Expect(err.Error()).To(ContainSubstring("error, status code: 500, message: llama: model does not exist"))

|

||||