mirror of

https://github.com/mudler/LocalAI.git

synced 2026-02-03 03:02:38 -05:00

Compare commits

38 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7e5fe35ae4 | ||

|

|

8c8cf38d4d | ||

|

|

75b25297fd | ||

|

|

009ee47fe2 | ||

|

|

ec2adc2c03 | ||

|

|

ad301e6ed7 | ||

|

|

d094381e5d | ||

|

|

3ff9bbd217 | ||

|

|

e62ee2bc06 | ||

|

|

b49721cdd1 | ||

|

|

64c0a7967f | ||

|

|

e96eadab40 | ||

|

|

e73283121b | ||

|

|

857d13e8d6 | ||

|

|

91db3d4d5c | ||

|

|

961cf29217 | ||

|

|

c839b334eb | ||

|

|

714bfcd45b | ||

|

|

77ce8b953e | ||

|

|

01ada95941 | ||

|

|

eabdc5042a | ||

|

|

96267d9437 | ||

|

|

9497a24127 | ||

|

|

fdf75c6d0e | ||

|

|

6352308882 | ||

|

|

a8172a0f4e | ||

|

|

ebcd10d66f | ||

|

|

885642915f | ||

|

|

2e424491c0 | ||

|

|

aa6faef8f7 | ||

|

|

b3254baf60 | ||

|

|

0a43d27f0e | ||

|

|

3fe11fe24d | ||

|

|

af18fdc749 | ||

|

|

32b5eddd7d | ||

|

|

07c3aa1869 | ||

|

|

e59bad89e7 | ||

|

|

b971807980 |

9

.github/bump_deps.sh

vendored

Executable file

9

.github/bump_deps.sh

vendored

Executable file

@@ -0,0 +1,9 @@

|

||||

#!/bin/bash

|

||||

set -xe

|

||||

REPO=$1

|

||||

BRANCH=$2

|

||||

VAR=$3

|

||||

|

||||

LAST_COMMIT=$(curl -s -H "Accept: application/vnd.github.VERSION.sha" "https://api.github.com/repos/$REPO/commits/$BRANCH")

|

||||

|

||||

sed -i Makefile -e "s/$VAR?=.*/$VAR?=$LAST_COMMIT/"

|

||||

42

.github/workflows/bump_deps.yaml

vendored

Normal file

42

.github/workflows/bump_deps.yaml

vendored

Normal file

@@ -0,0 +1,42 @@

|

||||

name: Bump dependencies

|

||||

on:

|

||||

schedule:

|

||||

- cron: 0 20 * * *

|

||||

workflow_dispatch:

|

||||

jobs:

|

||||

bump:

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

include:

|

||||

- repository: "go-skynet/go-gpt4all-j.cpp"

|

||||

variable: "GOGPT4ALLJ_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/go-llama.cpp"

|

||||

variable: "GOLLAMA_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/go-gpt2.cpp"

|

||||

variable: "GOGPT2_VERSION"

|

||||

branch: "master"

|

||||

- repository: "donomii/go-rwkv.cpp"

|

||||

variable: "RWKV_VERSION"

|

||||

branch: "main"

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Bump dependencies 🔧

|

||||

run: |

|

||||

bash .github/bump_deps.sh ${{ matrix.repository }} ${{ matrix.branch }} ${{ matrix.variable }}

|

||||

- name: Create Pull Request

|

||||

uses: peter-evans/create-pull-request@v5

|

||||

with:

|

||||

token: ${{ secrets.UPDATE_BOT_TOKEN }}

|

||||

push-to-fork: ci-forks/LocalAI

|

||||

commit-message: ':arrow_up: Update ${{ matrix.repository }}'

|

||||

title: ':arrow_up: Update ${{ matrix.repository }}'

|

||||

branch: "update/${{ matrix.variable }}"

|

||||

body: Bump of ${{ matrix.repository }} version

|

||||

signoff: true

|

||||

|

||||

|

||||

|

||||

17

Makefile

17

Makefile

@@ -2,13 +2,10 @@ GOCMD=go

|

||||

GOTEST=$(GOCMD) test

|

||||

GOVET=$(GOCMD) vet

|

||||

BINARY_NAME=local-ai

|

||||

# renovate: datasource=github-tags depName=go-skynet/go-llama.cpp

|

||||

GOLLAMA_VERSION?=llama.cpp-f4cef87

|

||||

# renovate: datasource=git-refs packageNameTemplate=https://github.com/go-skynet/go-gpt4all-j.cpp currentValueTemplate=master depNameTemplate=go-gpt4all-j.cpp

|

||||

GOGPT4ALLJ_VERSION?=1f7bff57f66cb7062e40d0ac3abd2217815e5109

|

||||

# renovate: datasource=git-refs packageNameTemplate=https://github.com/go-skynet/go-gpt2.cpp currentValueTemplate=master depNameTemplate=go-gpt2.cpp

|

||||

GOGPT2_VERSION?=245a5bfe6708ab80dc5c733dcdbfbe3cfd2acdaa

|

||||

|

||||

GOLLAMA_VERSION?=67ff6a4db244b37e6efb4e6a5c5536d2bfae215b

|

||||

GOGPT4ALLJ_VERSION?=1f7bff57f66cb7062e40d0ac3abd2217815e5109

|

||||

GOGPT2_VERSION?=245a5bfe6708ab80dc5c733dcdbfbe3cfd2acdaa

|

||||

RWKV_REPO?=https://github.com/donomii/go-rwkv.cpp

|

||||

RWKV_VERSION?=af62fcc432be2847acb6e0688b2c2491d6588d58

|

||||

|

||||

@@ -54,6 +51,9 @@ go-gpt4all-j:

|

||||

go-rwkv:

|

||||

git clone --recurse-submodules $(RWKV_REPO) go-rwkv

|

||||

cd go-rwkv && git checkout -b build $(RWKV_VERSION) && git submodule update --init --recursive --depth 1

|

||||

@find ./go-rwkv -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_rwkv_/g' {} +

|

||||

@find ./go-rwkv -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_rwkv_/g' {} +

|

||||

@find ./go-rwkv -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_rwkv_/g' {} +

|

||||

|

||||

go-rwkv/librwkv.a: go-rwkv

|

||||

cd go-rwkv && cd rwkv.cpp && cmake . -DRWKV_BUILD_SHARED_LIBRARY=OFF && cmake --build . && cp librwkv.a .. && cp ggml/src/libggml.a ..

|

||||

@@ -77,7 +77,8 @@ go-gpt2/libgpt2.a: go-gpt2

|

||||

$(MAKE) -C go-gpt2 $(GENERIC_PREFIX)libgpt2.a

|

||||

|

||||

go-llama:

|

||||

git clone -b $(GOLLAMA_VERSION) --recurse-submodules https://github.com/go-skynet/go-llama.cpp go-llama

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-llama.cpp go-llama

|

||||

cd go-llama && git checkout -b build $(GOLLAMA_VERSION) && git submodule update --init --recursive --depth 1

|

||||

|

||||

go-llama/libbinding.a: go-llama

|

||||

$(MAKE) -C go-llama $(GENERIC_PREFIX)libbinding.a

|

||||

@@ -129,7 +130,7 @@ test-models/testmodel:

|

||||

|

||||

test: prepare test-models/testmodel

|

||||

cp tests/fixtures/* test-models

|

||||

@C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) test -v -timeout 30m ./...

|

||||

@C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) run github.com/onsi/ginkgo/v2/ginkgo -v -r ./...

|

||||

|

||||

## Help:

|

||||

help: ## Show this help.

|

||||

|

||||

31

README.md

31

README.md

@@ -19,6 +19,8 @@

|

||||

|

||||

LocalAI is a community-driven project, focused on making the AI accessible to anyone. Any contribution, feedback and PR is welcome! It was initially created by [mudler](https://github.com/mudler/) at the [SpectroCloud OSS Office](https://github.com/spectrocloud).

|

||||

|

||||

See [examples on how to integrate LocalAI](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

|

||||

### News

|

||||

|

||||

- 02-05-2023: Support for `rwkv.cpp` models ( https://github.com/go-skynet/LocalAI/pull/158 ) and for `/edits` endpoint

|

||||

@@ -45,26 +47,45 @@ Tested with:

|

||||

- [GPT4ALL-J](https://gpt4all.io/models/ggml-gpt4all-j.bin)

|

||||

- Koala

|

||||

- [cerebras-GPT with ggml](https://huggingface.co/lxe/Cerebras-GPT-2.7B-Alpaca-SP-ggml)

|

||||

- WizardLM

|

||||

- [RWKV](https://github.com/BlinkDL/RWKV-LM) models with [rwkv.cpp](https://github.com/saharNooby/rwkv.cpp)

|

||||

|

||||

It should also be compatible with StableLM and GPTNeoX ggml models (untested)

|

||||

### Vicuna, Alpaca, LLaMa...

|

||||

|

||||

[llama.cpp](https://github.com/ggerganov/llama.cpp) based models are compatible

|

||||

|

||||

### GPT4ALL

|

||||

|

||||

Note: You might need to convert older models to the new format, see [here](https://github.com/ggerganov/llama.cpp#using-gpt4all) for instance to run `gpt4all`.

|

||||

|

||||

### GPT4ALL-J

|

||||

|

||||

No changes required to the model.

|

||||

|

||||

### RWKV

|

||||

|

||||

<details>

|

||||

|

||||

For `rwkv` models, you need to put also the associated tokenizer along with the ggml model:

|

||||

A full example on how to run a rwkv model is in the [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/rwkv).

|

||||

|

||||

Note: rwkv models have an associated tokenizer along that needs to be provided with it:

|

||||

|

||||

```

|

||||

ls models

|

||||

36464540 -rw-r--r-- 1 mudler mudler 1.2G May 3 10:51 rwkv_small

|

||||

36464543 -rw-r--r-- 1 mudler mudler 2.4M May 3 10:51 rwkv_small.tokenizer.json

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

### Others

|

||||

|

||||

It should also be compatible with StableLM and GPTNeoX ggml models (untested).

|

||||

|

||||

### Hardware requirements

|

||||

|

||||

Depending on the model you are attempting to run might need more RAM or CPU resources. Check out also [here](https://github.com/ggerganov/llama.cpp#memorydisk-requirements) for `ggml` based backends. `rwkv` is less expensive on resources.

|

||||

|

||||

|

||||

## Usage

|

||||

|

||||

> `LocalAI` comes by default as a container image. You can check out all the available images with corresponding tags [here](https://quay.io/repository/go-skynet/local-ai?tab=tags&tag=latest).

|

||||

@@ -143,8 +164,6 @@ To build locally, run `make build` (see below).

|

||||

|

||||

### Other examples

|

||||

|

||||

|

||||

|

||||

To see other examples on how to integrate with other projects for instance chatbot-ui, see: [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

|

||||

|

||||

@@ -553,7 +572,7 @@ Not currently, as ggml doesn't support GPUs yet: https://github.com/ggerganov/ll

|

||||

### Where is the webUI?

|

||||

|

||||

<details>

|

||||

We are working on to have a good out of the box experience - however as LocalAI is an API you can already plug it into existing projects that provides are UI interfaces to OpenAI's APIs. There are several already on github, and should be compatible with LocalAI already (as it mimics the OpenAI API)

|

||||

There is the availability of localai-webui and chatbot-ui in the examples section and can be setup as per the instructions. However as LocalAI is an API you can already plug it into existing projects that provides are UI interfaces to OpenAI's APIs. There are several already on github, and should be compatible with LocalAI already (as it mimics the OpenAI API)

|

||||

|

||||

</details>

|

||||

|

||||

|

||||

14

api/api.go

14

api/api.go

@@ -6,6 +6,7 @@ import (

|

||||

model "github.com/go-skynet/LocalAI/pkg/model"

|

||||

"github.com/gofiber/fiber/v2"

|

||||

"github.com/gofiber/fiber/v2/middleware/cors"

|

||||

"github.com/gofiber/fiber/v2/middleware/logger"

|

||||

"github.com/gofiber/fiber/v2/middleware/recover"

|

||||

"github.com/rs/zerolog"

|

||||

"github.com/rs/zerolog/log"

|

||||

@@ -40,6 +41,12 @@ func App(configFile string, loader *model.ModelLoader, threads, ctxSize int, f16

|

||||

},

|

||||

})

|

||||

|

||||

if debug {

|

||||

app.Use(logger.New(logger.Config{

|

||||

Format: "[${ip}]:${port} ${status} - ${method} ${path}\n",

|

||||

}))

|

||||

}

|

||||

|

||||

cm := make(ConfigMerger)

|

||||

if err := cm.LoadConfigs(loader.ModelPath); err != nil {

|

||||

log.Error().Msgf("error loading config files: %s", err.Error())

|

||||

@@ -70,6 +77,13 @@ func App(configFile string, loader *model.ModelLoader, threads, ctxSize int, f16

|

||||

app.Post("/v1/completions", completionEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/completions", completionEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

|

||||

app.Post("/v1/embeddings", embeddingsEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

app.Post("/embeddings", embeddingsEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

|

||||

// /v1/engines/{engine_id}/embeddings

|

||||

|

||||

app.Post("/v1/engines/:model/embeddings", embeddingsEndpoint(cm, debug, loader, threads, ctxSize, f16))

|

||||

|

||||

app.Get("/v1/models", listModels(loader, cm))

|

||||

app.Get("/models", listModels(loader, cm))

|

||||

|

||||

|

||||

179

api/config.go

179

api/config.go

@@ -1,12 +1,16 @@

|

||||

package api

|

||||

|

||||

import (

|

||||

"encoding/json"

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

"os"

|

||||

"path/filepath"

|

||||

"strings"

|

||||

|

||||

model "github.com/go-skynet/LocalAI/pkg/model"

|

||||

"github.com/gofiber/fiber/v2"

|

||||

"github.com/rs/zerolog/log"

|

||||

"gopkg.in/yaml.v3"

|

||||

)

|

||||

|

||||

@@ -21,8 +25,14 @@ type Config struct {

|

||||

Threads int `yaml:"threads"`

|

||||

Debug bool `yaml:"debug"`

|

||||

Roles map[string]string `yaml:"roles"`

|

||||

Embeddings bool `yaml:"embeddings"`

|

||||

Backend string `yaml:"backend"`

|

||||

TemplateConfig TemplateConfig `yaml:"template"`

|

||||

MirostatETA float64 `yaml:"mirostat_eta"`

|

||||

MirostatTAU float64 `yaml:"mirostat_tau"`

|

||||

Mirostat int `yaml:"mirostat"`

|

||||

|

||||

PromptStrings, InputStrings []string

|

||||

}

|

||||

|

||||

type TemplateConfig struct {

|

||||

@@ -100,3 +110,172 @@ func (cm ConfigMerger) LoadConfigs(path string) error {

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

func updateConfig(config *Config, input *OpenAIRequest) {

|

||||

if input.Echo {

|

||||

config.Echo = input.Echo

|

||||

}

|

||||

if input.TopK != 0 {

|

||||

config.TopK = input.TopK

|

||||

}

|

||||

if input.TopP != 0 {

|

||||

config.TopP = input.TopP

|

||||

}

|

||||

|

||||

if input.Temperature != 0 {

|

||||

config.Temperature = input.Temperature

|

||||

}

|

||||

|

||||

if input.Maxtokens != 0 {

|

||||

config.Maxtokens = input.Maxtokens

|

||||

}

|

||||

|

||||

switch stop := input.Stop.(type) {

|

||||

case string:

|

||||

if stop != "" {

|

||||

config.StopWords = append(config.StopWords, stop)

|

||||

}

|

||||

case []interface{}:

|

||||

for _, pp := range stop {

|

||||

if s, ok := pp.(string); ok {

|

||||

config.StopWords = append(config.StopWords, s)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

if input.RepeatPenalty != 0 {

|

||||

config.RepeatPenalty = input.RepeatPenalty

|

||||

}

|

||||

|

||||

if input.Keep != 0 {

|

||||

config.Keep = input.Keep

|

||||

}

|

||||

|

||||

if input.Batch != 0 {

|

||||

config.Batch = input.Batch

|

||||

}

|

||||

|

||||

if input.F16 {

|

||||

config.F16 = input.F16

|

||||

}

|

||||

|

||||

if input.IgnoreEOS {

|

||||

config.IgnoreEOS = input.IgnoreEOS

|

||||

}

|

||||

|

||||

if input.Seed != 0 {

|

||||

config.Seed = input.Seed

|

||||

}

|

||||

|

||||

if input.Mirostat != 0 {

|

||||

config.Mirostat = input.Mirostat

|

||||

}

|

||||

|

||||

if input.MirostatETA != 0 {

|

||||

config.MirostatETA = input.MirostatETA

|

||||

}

|

||||

|

||||

if input.MirostatTAU != 0 {

|

||||

config.MirostatTAU = input.MirostatTAU

|

||||

}

|

||||

|

||||

switch inputs := input.Input.(type) {

|

||||

case string:

|

||||

if inputs != "" {

|

||||

config.InputStrings = append(config.InputStrings, inputs)

|

||||

}

|

||||

case []interface{}:

|

||||

for _, pp := range inputs {

|

||||

if s, ok := pp.(string); ok {

|

||||

config.InputStrings = append(config.InputStrings, s)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

switch p := input.Prompt.(type) {

|

||||

case string:

|

||||

config.PromptStrings = append(config.PromptStrings, p)

|

||||

case []interface{}:

|

||||

for _, pp := range p {

|

||||

if s, ok := pp.(string); ok {

|

||||

config.PromptStrings = append(config.PromptStrings, s)

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

func readConfig(cm ConfigMerger, c *fiber.Ctx, loader *model.ModelLoader, debug bool, threads, ctx int, f16 bool) (*Config, *OpenAIRequest, error) {

|

||||

input := new(OpenAIRequest)

|

||||

// Get input data from the request body

|

||||

if err := c.BodyParser(input); err != nil {

|

||||

return nil, nil, err

|

||||

}

|

||||

|

||||

modelFile := input.Model

|

||||

|

||||

if c.Params("model") != "" {

|

||||

modelFile = c.Params("model")

|

||||

}

|

||||

|

||||

received, _ := json.Marshal(input)

|

||||

|

||||

log.Debug().Msgf("Request received: %s", string(received))

|

||||

|

||||

// Set model from bearer token, if available

|

||||

bearer := strings.TrimLeft(c.Get("authorization"), "Bearer ")

|

||||

bearerExists := bearer != "" && loader.ExistsInModelPath(bearer)

|

||||

|

||||

// If no model was specified, take the first available

|

||||

if modelFile == "" && !bearerExists {

|

||||

models, _ := loader.ListModels()

|

||||

if len(models) > 0 {

|

||||

modelFile = models[0]

|

||||

log.Debug().Msgf("No model specified, using: %s", modelFile)

|

||||

} else {

|

||||

log.Debug().Msgf("No model specified, returning error")

|

||||

return nil, nil, fmt.Errorf("no model specified")

|

||||

}

|

||||

}

|

||||

|

||||

// If a model is found in bearer token takes precedence

|

||||

if bearerExists {

|

||||

log.Debug().Msgf("Using model from bearer token: %s", bearer)

|

||||

modelFile = bearer

|

||||

}

|

||||

|

||||

// Load a config file if present after the model name

|

||||

modelConfig := filepath.Join(loader.ModelPath, modelFile+".yaml")

|

||||

if _, err := os.Stat(modelConfig); err == nil {

|

||||

if err := cm.LoadConfig(modelConfig); err != nil {

|

||||

return nil, nil, fmt.Errorf("failed loading model config (%s) %s", modelConfig, err.Error())

|

||||

}

|

||||

}

|

||||

|

||||

var config *Config

|

||||

cfg, exists := cm[modelFile]

|

||||

if !exists {

|

||||

config = &Config{

|

||||

OpenAIRequest: defaultRequest(modelFile),

|

||||

ContextSize: ctx,

|

||||

Threads: threads,

|

||||

F16: f16,

|

||||

Debug: debug,

|

||||

}

|

||||

} else {

|

||||

config = &cfg

|

||||

}

|

||||

|

||||

// Set the parameters for the language model prediction

|

||||

updateConfig(config, input)

|

||||

|

||||

// Don't allow 0 as setting

|

||||

if config.Threads == 0 {

|

||||

if threads != 0 {

|

||||

config.Threads = threads

|

||||

} else {

|

||||

config.Threads = 4

|

||||

}

|

||||

}

|

||||

|

||||

return config, input, nil

|

||||

}

|

||||

|

||||

279

api/openai.go

279

api/openai.go

@@ -5,8 +5,6 @@ import (

|

||||

"bytes"

|

||||

"encoding/json"

|

||||

"fmt"

|

||||

"os"

|

||||

"path/filepath"

|

||||

"strings"

|

||||

|

||||

model "github.com/go-skynet/LocalAI/pkg/model"

|

||||

@@ -33,13 +31,21 @@ type OpenAIUsage struct {

|

||||

TotalTokens int `json:"total_tokens"`

|

||||

}

|

||||

|

||||

type Item struct {

|

||||

Embedding []float32 `json:"embedding"`

|

||||

Index int `json:"index"`

|

||||

Object string `json:"object,omitempty"`

|

||||

}

|

||||

|

||||

type OpenAIResponse struct {

|

||||

Created int `json:"created,omitempty"`

|

||||

Object string `json:"object,omitempty"`

|

||||

ID string `json:"id,omitempty"`

|

||||

Model string `json:"model,omitempty"`

|

||||

Choices []Choice `json:"choices,omitempty"`

|

||||

Usage OpenAIUsage `json:"usage"`

|

||||

Created int `json:"created,omitempty"`

|

||||

Object string `json:"object,omitempty"`

|

||||

ID string `json:"id,omitempty"`

|

||||

Model string `json:"model,omitempty"`

|

||||

Choices []Choice `json:"choices,omitempty"`

|

||||

Data []Item `json:"data,omitempty"`

|

||||

|

||||

Usage OpenAIUsage `json:"usage"`

|

||||

}

|

||||

|

||||

type Choice struct {

|

||||

@@ -67,8 +73,8 @@ type OpenAIRequest struct {

|

||||

Prompt interface{} `json:"prompt" yaml:"prompt"`

|

||||

|

||||

// Edit endpoint

|

||||

Instruction string `json:"instruction" yaml:"instruction"`

|

||||

Input string `json:"input" yaml:"input"`

|

||||

Instruction string `json:"instruction" yaml:"instruction"`

|

||||

Input interface{} `json:"input" yaml:"input"`

|

||||

|

||||

Stop interface{} `json:"stop" yaml:"stop"`

|

||||

|

||||

@@ -92,6 +98,10 @@ type OpenAIRequest struct {

|

||||

RepeatPenalty float64 `json:"repeat_penalty" yaml:"repeat_penalty"`

|

||||

Keep int `json:"n_keep" yaml:"n_keep"`

|

||||

|

||||

MirostatETA float64 `json:"mirostat_eta" yaml:"mirostat_eta"`

|

||||

MirostatTAU float64 `json:"mirostat_tau" yaml:"mirostat_tau"`

|

||||

Mirostat int `json:"mirostat" yaml:"mirostat"`

|

||||

|

||||

Seed int `json:"seed" yaml:"seed"`

|

||||

}

|

||||

|

||||

@@ -105,135 +115,6 @@ func defaultRequest(modelFile string) OpenAIRequest {

|

||||

}

|

||||

}

|

||||

|

||||

func updateConfig(config *Config, input *OpenAIRequest) {

|

||||

if input.Echo {

|

||||

config.Echo = input.Echo

|

||||

}

|

||||

if input.TopK != 0 {

|

||||

config.TopK = input.TopK

|

||||

}

|

||||

if input.TopP != 0 {

|

||||

config.TopP = input.TopP

|

||||

}

|

||||

|

||||

if input.Temperature != 0 {

|

||||

config.Temperature = input.Temperature

|

||||

}

|

||||

|

||||

if input.Maxtokens != 0 {

|

||||

config.Maxtokens = input.Maxtokens

|

||||

}

|

||||

|

||||

switch stop := input.Stop.(type) {

|

||||

case string:

|

||||

if stop != "" {

|

||||

config.StopWords = append(config.StopWords, stop)

|

||||

}

|

||||

case []interface{}:

|

||||

for _, pp := range stop {

|

||||

if s, ok := pp.(string); ok {

|

||||

config.StopWords = append(config.StopWords, s)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

if input.RepeatPenalty != 0 {

|

||||

config.RepeatPenalty = input.RepeatPenalty

|

||||

}

|

||||

|

||||

if input.Keep != 0 {

|

||||

config.Keep = input.Keep

|

||||

}

|

||||

|

||||

if input.Batch != 0 {

|

||||

config.Batch = input.Batch

|

||||

}

|

||||

|

||||

if input.F16 {

|

||||

config.F16 = input.F16

|

||||

}

|

||||

|

||||

if input.IgnoreEOS {

|

||||

config.IgnoreEOS = input.IgnoreEOS

|

||||

}

|

||||

|

||||

if input.Seed != 0 {

|

||||

config.Seed = input.Seed

|

||||

}

|

||||

}

|

||||

|

||||

func readConfig(cm ConfigMerger, c *fiber.Ctx, loader *model.ModelLoader, debug bool, threads, ctx int, f16 bool) (*Config, *OpenAIRequest, error) {

|

||||

input := new(OpenAIRequest)

|

||||

// Get input data from the request body

|

||||

if err := c.BodyParser(input); err != nil {

|

||||

return nil, nil, err

|

||||

}

|

||||

|

||||

modelFile := input.Model

|

||||

received, _ := json.Marshal(input)

|

||||

|

||||

log.Debug().Msgf("Request received: %s", string(received))

|

||||

|

||||

// Set model from bearer token, if available

|

||||

bearer := strings.TrimLeft(c.Get("authorization"), "Bearer ")

|

||||

bearerExists := bearer != "" && loader.ExistsInModelPath(bearer)

|

||||

|

||||

// If no model was specified, take the first available

|

||||

if modelFile == "" && !bearerExists {

|

||||

models, _ := loader.ListModels()

|

||||

if len(models) > 0 {

|

||||

modelFile = models[0]

|

||||

log.Debug().Msgf("No model specified, using: %s", modelFile)

|

||||

} else {

|

||||

log.Debug().Msgf("No model specified, returning error")

|

||||

return nil, nil, fmt.Errorf("no model specified")

|

||||

}

|

||||

}

|

||||

|

||||

// If a model is found in bearer token takes precedence

|

||||

if bearerExists {

|

||||

log.Debug().Msgf("Using model from bearer token: %s", bearer)

|

||||

modelFile = bearer

|

||||

}

|

||||

|

||||

// Load a config file if present after the model name

|

||||

modelConfig := filepath.Join(loader.ModelPath, modelFile+".yaml")

|

||||

if _, err := os.Stat(modelConfig); err == nil {

|

||||

if err := cm.LoadConfig(modelConfig); err != nil {

|

||||

return nil, nil, fmt.Errorf("failed loading model config (%s) %s", modelConfig, err.Error())

|

||||

}

|

||||

}

|

||||

|

||||

var config *Config

|

||||

cfg, exists := cm[modelFile]

|

||||

if !exists {

|

||||

config = &Config{

|

||||

OpenAIRequest: defaultRequest(modelFile),

|

||||

}

|

||||

} else {

|

||||

config = &cfg

|

||||

}

|

||||

|

||||

// Set the parameters for the language model prediction

|

||||

updateConfig(config, input)

|

||||

|

||||

if threads != 0 {

|

||||

config.Threads = threads

|

||||

}

|

||||

if ctx != 0 {

|

||||

config.ContextSize = ctx

|

||||

}

|

||||

if f16 {

|

||||

config.F16 = true

|

||||

}

|

||||

|

||||

if debug {

|

||||

config.Debug = true

|

||||

}

|

||||

|

||||

return config, input, nil

|

||||

}

|

||||

|

||||

// https://platform.openai.com/docs/api-reference/completions

|

||||

func completionEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader, threads, ctx int, f16 bool) func(c *fiber.Ctx) error {

|

||||

return func(c *fiber.Ctx) error {

|

||||

@@ -244,19 +125,6 @@ func completionEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader,

|

||||

|

||||

log.Debug().Msgf("Parameter Config: %+v", config)

|

||||

|

||||

predInput := []string{}

|

||||

|

||||

switch p := input.Prompt.(type) {

|

||||

case string:

|

||||

predInput = append(predInput, p)

|

||||

case []interface{}:

|

||||

for _, pp := range p {

|

||||

if s, ok := pp.(string); ok {

|

||||

predInput = append(predInput, s)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

templateFile := config.Model

|

||||

|

||||

if config.TemplateConfig.Completion != "" {

|

||||

@@ -264,7 +132,7 @@ func completionEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader,

|

||||

}

|

||||

|

||||

var result []Choice

|

||||

for _, i := range predInput {

|

||||

for _, i := range config.PromptStrings {

|

||||

// A model can have a "file.bin.tmpl" file associated with a prompt template prefix

|

||||

templatedInput, err := loader.TemplatePrefix(templateFile, struct {

|

||||

Input string

|

||||

@@ -298,7 +166,62 @@ func completionEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader,

|

||||

}

|

||||

}

|

||||

|

||||

// https://platform.openai.com/docs/api-reference/completions

|

||||

func embeddingsEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader, threads, ctx int, f16 bool) func(c *fiber.Ctx) error {

|

||||

return func(c *fiber.Ctx) error {

|

||||

config, input, err := readConfig(cm, c, loader, debug, threads, ctx, f16)

|

||||

if err != nil {

|

||||

return fmt.Errorf("failed reading parameters from request:%w", err)

|

||||

}

|

||||

|

||||

log.Debug().Msgf("Parameter Config: %+v", config)

|

||||

items := []Item{}

|

||||

|

||||

for i, s := range config.InputStrings {

|

||||

|

||||

// get the model function to call for the result

|

||||

embedFn, err := ModelEmbedding(s, loader, *config)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

embeddings, err := embedFn()

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

items = append(items, Item{Embedding: embeddings, Index: i, Object: "embedding"})

|

||||

}

|

||||

|

||||

resp := &OpenAIResponse{

|

||||

Model: input.Model, // we have to return what the user sent here, due to OpenAI spec.

|

||||

Data: items,

|

||||

Object: "list",

|

||||

}

|

||||

|

||||

jsonResult, _ := json.Marshal(resp)

|

||||

log.Debug().Msgf("Response: %s", jsonResult)

|

||||

|

||||

// Return the prediction in the response body

|

||||

return c.JSON(resp)

|

||||

}

|

||||

}

|

||||

|

||||

func chatEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader, threads, ctx int, f16 bool) func(c *fiber.Ctx) error {

|

||||

|

||||

process := func(s string, req *OpenAIRequest, config *Config, loader *model.ModelLoader, responses chan OpenAIResponse) {

|

||||

ComputeChoices(s, req, config, loader, func(s string, c *[]Choice) {}, func(s string) bool {

|

||||

resp := OpenAIResponse{

|

||||

Model: req.Model, // we have to return what the user sent here, due to OpenAI spec.

|

||||

Choices: []Choice{{Delta: &Message{Role: "assistant", Content: s}}},

|

||||

Object: "chat.completion.chunk",

|

||||

}

|

||||

log.Debug().Msgf("Sending goroutine: %s", s)

|

||||

|

||||

responses <- resp

|

||||

return true

|

||||

})

|

||||

close(responses)

|

||||

}

|

||||

return func(c *fiber.Ctx) error {

|

||||

config, input, err := readConfig(cm, c, loader, debug, threads, ctx, f16)

|

||||

if err != nil {

|

||||

@@ -350,19 +273,7 @@ func chatEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader, thread

|

||||

if input.Stream {

|

||||

responses := make(chan OpenAIResponse)

|

||||

|

||||

go func() {

|

||||

ComputeChoices(predInput, input, config, loader, func(s string, c *[]Choice) {}, func(s string) bool {

|

||||

resp := OpenAIResponse{

|

||||

Model: input.Model, // we have to return what the user sent here, due to OpenAI spec.

|

||||

Choices: []Choice{{Delta: &Message{Role: "assistant", Content: s}}},

|

||||

Object: "chat.completion.chunk",

|

||||

}

|

||||

|

||||

responses <- resp

|

||||

return true

|

||||

})

|

||||

close(responses)

|

||||

}()

|

||||

go process(predInput, input, config, loader, responses)

|

||||

|

||||

c.Context().SetBodyStreamWriter(fasthttp.StreamWriter(func(w *bufio.Writer) {

|

||||

|

||||

@@ -402,6 +313,8 @@ func chatEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader, thread

|

||||

Choices: result,

|

||||

Object: "chat.completion",

|

||||

}

|

||||

respData, _ := json.Marshal(resp)

|

||||

log.Debug().Msgf("Response: %s", respData)

|

||||

|

||||

// Return the prediction in the response body

|

||||

return c.JSON(resp)

|

||||

@@ -417,28 +330,32 @@ func editEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader, thread

|

||||

|

||||

log.Debug().Msgf("Parameter Config: %+v", config)

|

||||

|

||||

predInput := input.Input

|

||||

templateFile := config.Model

|

||||

|

||||

if config.TemplateConfig.Edit != "" {

|

||||

templateFile = config.TemplateConfig.Edit

|

||||

}

|

||||

|

||||

// A model can have a "file.bin.tmpl" file associated with a prompt template prefix

|

||||

templatedInput, err := loader.TemplatePrefix(templateFile, struct {

|

||||

Input string

|

||||

Instruction string

|

||||

}{Input: predInput, Instruction: input.Instruction})

|

||||

if err == nil {

|

||||

predInput = templatedInput

|

||||

log.Debug().Msgf("Template found, input modified to: %s", predInput)

|

||||

}

|

||||

var result []Choice

|

||||

for _, i := range config.InputStrings {

|

||||

// A model can have a "file.bin.tmpl" file associated with a prompt template prefix

|

||||

templatedInput, err := loader.TemplatePrefix(templateFile, struct {

|

||||

Input string

|

||||

Instruction string

|

||||

}{Input: i})

|

||||

if err == nil {

|

||||

i = templatedInput

|

||||

log.Debug().Msgf("Template found, input modified to: %s", i)

|

||||

}

|

||||

|

||||

result, err := ComputeChoices(predInput, input, config, loader, func(s string, c *[]Choice) {

|

||||

*c = append(*c, Choice{Text: s})

|

||||

}, nil)

|

||||

if err != nil {

|

||||

return err

|

||||

r, err := ComputeChoices(i, input, config, loader, func(s string, c *[]Choice) {

|

||||

*c = append(*c, Choice{Text: s})

|

||||

}, nil)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

result = append(result, r...)

|

||||

}

|

||||

|

||||

resp := &OpenAIResponse{

|

||||

|

||||

@@ -11,98 +11,13 @@ import (

|

||||

gpt2 "github.com/go-skynet/go-gpt2.cpp"

|

||||

gptj "github.com/go-skynet/go-gpt4all-j.cpp"

|

||||

llama "github.com/go-skynet/go-llama.cpp"

|

||||

"github.com/hashicorp/go-multierror"

|

||||

)

|

||||

|

||||

const tokenizerSuffix = ".tokenizer.json"

|

||||

|

||||

// mutex still needed, see: https://github.com/ggerganov/llama.cpp/discussions/784

|

||||

var mutexMap sync.Mutex

|

||||

var mutexes map[string]*sync.Mutex = make(map[string]*sync.Mutex)

|

||||

|

||||

var loadedModels map[string]interface{} = map[string]interface{}{}

|

||||

var muModels sync.Mutex

|

||||

|

||||

func backendLoader(backendString string, loader *model.ModelLoader, modelFile string, llamaOpts []llama.ModelOption, threads uint32) (model interface{}, err error) {

|

||||

switch strings.ToLower(backendString) {

|

||||

case "llama":

|

||||

return loader.LoadLLaMAModel(modelFile, llamaOpts...)

|

||||

case "stablelm":

|

||||

return loader.LoadStableLMModel(modelFile)

|

||||

case "gpt2":

|

||||

return loader.LoadGPT2Model(modelFile)

|

||||

case "gptj":

|

||||

return loader.LoadGPTJModel(modelFile)

|

||||

case "rwkv":

|

||||

return loader.LoadRWKV(modelFile, modelFile+tokenizerSuffix, threads)

|

||||

default:

|

||||

return nil, fmt.Errorf("backend unsupported: %s", backendString)

|

||||

}

|

||||

}

|

||||

|

||||

func greedyLoader(loader *model.ModelLoader, modelFile string, llamaOpts []llama.ModelOption, threads uint32) (model interface{}, err error) {

|

||||

updateModels := func(model interface{}) {

|

||||

muModels.Lock()

|

||||

defer muModels.Unlock()

|

||||

loadedModels[modelFile] = model

|

||||

}

|

||||

|

||||

muModels.Lock()

|

||||

m, exists := loadedModels[modelFile]

|

||||

if exists {

|

||||

muModels.Unlock()

|

||||

return m, nil

|

||||

}

|

||||

muModels.Unlock()

|

||||

|

||||

model, modelerr := loader.LoadLLaMAModel(modelFile, llamaOpts...)

|

||||

if modelerr == nil {

|

||||

updateModels(model)

|

||||

return model, nil

|

||||

} else {

|

||||

err = multierror.Append(err, modelerr)

|

||||

}

|

||||

|

||||

model, modelerr = loader.LoadGPTJModel(modelFile)

|

||||

if modelerr == nil {

|

||||

updateModels(model)

|

||||

return model, nil

|

||||

} else {

|

||||

err = multierror.Append(err, modelerr)

|

||||

}

|

||||

|

||||

model, modelerr = loader.LoadGPT2Model(modelFile)

|

||||

if modelerr == nil {

|

||||

updateModels(model)

|

||||

return model, nil

|

||||

} else {

|

||||

err = multierror.Append(err, modelerr)

|

||||

}

|

||||

|

||||

model, modelerr = loader.LoadStableLMModel(modelFile)

|

||||

if modelerr == nil {

|

||||

updateModels(model)

|

||||

return model, nil

|

||||

} else {

|

||||

err = multierror.Append(err, modelerr)

|

||||

}

|

||||

|

||||

model, modelerr = loader.LoadRWKV(modelFile, modelFile+tokenizerSuffix, threads)

|

||||

if modelerr == nil {

|

||||

updateModels(model)

|

||||

return model, nil

|

||||

} else {

|

||||

err = multierror.Append(err, modelerr)

|

||||

}

|

||||

|

||||

return nil, fmt.Errorf("could not load model - all backends returned error: %s", err.Error())

|

||||

}

|

||||

|

||||

func ModelInference(s string, loader *model.ModelLoader, c Config, tokenCallback func(string) bool) (func() (string, error), error) {

|

||||

supportStreams := false

|

||||

modelFile := c.Model

|

||||

|

||||

// Try to load the model

|

||||

func defaultLLamaOpts(c Config) []llama.ModelOption {

|

||||

llamaOpts := []llama.ModelOption{}

|

||||

if c.ContextSize != 0 {

|

||||

llamaOpts = append(llamaOpts, llama.SetContext(c.ContextSize))

|

||||

@@ -110,13 +25,142 @@ func ModelInference(s string, loader *model.ModelLoader, c Config, tokenCallback

|

||||

if c.F16 {

|

||||

llamaOpts = append(llamaOpts, llama.EnableF16Memory)

|

||||

}

|

||||

if c.Embeddings {

|

||||

llamaOpts = append(llamaOpts, llama.EnableEmbeddings)

|

||||

}

|

||||

|

||||

return llamaOpts

|

||||

}

|

||||

|

||||

func ModelEmbedding(s string, loader *model.ModelLoader, c Config) (func() ([]float32, error), error) {

|

||||

if !c.Embeddings {

|

||||

return nil, fmt.Errorf("endpoint disabled for this model by API configuration")

|

||||

}

|

||||

|

||||

modelFile := c.Model

|

||||

|

||||

llamaOpts := defaultLLamaOpts(c)

|

||||

|

||||

var inferenceModel interface{}

|

||||

var err error

|

||||

if c.Backend == "" {

|

||||

inferenceModel, err = greedyLoader(loader, modelFile, llamaOpts, uint32(c.Threads))

|

||||

inferenceModel, err = loader.GreedyLoader(modelFile, llamaOpts, uint32(c.Threads))

|

||||

} else {

|

||||

inferenceModel, err = backendLoader(c.Backend, loader, modelFile, llamaOpts, uint32(c.Threads))

|

||||

inferenceModel, err = loader.BackendLoader(c.Backend, modelFile, llamaOpts, uint32(c.Threads))

|

||||

}

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

|

||||

var fn func() ([]float32, error)

|

||||

switch model := inferenceModel.(type) {

|

||||

case *llama.LLama:

|

||||

fn = func() ([]float32, error) {

|

||||

predictOptions := buildLLamaPredictOptions(c)

|

||||

return model.Embeddings(s, predictOptions...)

|

||||

}

|

||||

default:

|

||||

fn = func() ([]float32, error) {

|

||||

return nil, fmt.Errorf("embeddings not supported by the backend")

|

||||

}

|

||||

}

|

||||

|

||||

return func() ([]float32, error) {

|

||||

// This is still needed, see: https://github.com/ggerganov/llama.cpp/discussions/784

|

||||

mutexMap.Lock()

|

||||

l, ok := mutexes[modelFile]

|

||||

if !ok {

|

||||

m := &sync.Mutex{}

|

||||

mutexes[modelFile] = m

|

||||

l = m

|

||||

}

|

||||

mutexMap.Unlock()

|

||||

l.Lock()

|

||||

defer l.Unlock()

|

||||

|

||||

embeds, err := fn()

|

||||

if err != nil {

|

||||

return embeds, err

|

||||

}

|

||||

// Remove trailing 0s

|

||||

for i := len(embeds) - 1; i >= 0; i-- {

|

||||

if embeds[i] == 0.0 {

|

||||

embeds = embeds[:i]

|

||||

} else {

|

||||

break

|

||||

}

|

||||

}

|

||||

return embeds, nil

|

||||

}, nil

|

||||

}

|

||||

|

||||

func buildLLamaPredictOptions(c Config) []llama.PredictOption {

|

||||

// Generate the prediction using the language model

|

||||

predictOptions := []llama.PredictOption{

|

||||

llama.SetTemperature(c.Temperature),

|

||||

llama.SetTopP(c.TopP),

|

||||

llama.SetTopK(c.TopK),

|

||||

llama.SetTokens(c.Maxtokens),

|

||||

llama.SetThreads(c.Threads),

|

||||

}

|

||||

|

||||

if c.Mirostat != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetMirostat(c.Mirostat))

|

||||

}

|

||||

|

||||

if c.MirostatETA != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetMirostatETA(c.MirostatETA))

|

||||

}

|

||||

|

||||

if c.MirostatTAU != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetMirostatTAU(c.MirostatTAU))

|

||||

}

|

||||

|

||||

if c.Debug {

|

||||

predictOptions = append(predictOptions, llama.Debug)

|

||||

}

|

||||

|

||||

predictOptions = append(predictOptions, llama.SetStopWords(c.StopWords...))

|

||||

|

||||

if c.RepeatPenalty != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetPenalty(c.RepeatPenalty))

|

||||

}

|

||||

|

||||

if c.Keep != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetNKeep(c.Keep))

|

||||

}

|

||||

|

||||

if c.Batch != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetBatch(c.Batch))

|

||||

}

|

||||

|

||||

if c.F16 {

|

||||

predictOptions = append(predictOptions, llama.EnableF16KV)

|

||||

}

|

||||

|

||||

if c.IgnoreEOS {

|

||||

predictOptions = append(predictOptions, llama.IgnoreEOS)

|

||||

}

|

||||

|

||||

if c.Seed != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetSeed(c.Seed))

|

||||

}

|

||||

|

||||

return predictOptions

|

||||

}

|

||||

|

||||

func ModelInference(s string, loader *model.ModelLoader, c Config, tokenCallback func(string) bool) (func() (string, error), error) {

|

||||

supportStreams := false

|

||||

modelFile := c.Model

|

||||

|

||||

llamaOpts := defaultLLamaOpts(c)

|

||||

|

||||

var inferenceModel interface{}

|

||||

var err error

|

||||

if c.Backend == "" {

|

||||

inferenceModel, err = loader.GreedyLoader(modelFile, llamaOpts, uint32(c.Threads))

|

||||

} else {

|

||||

inferenceModel, err = loader.BackendLoader(c.Backend, modelFile, llamaOpts, uint32(c.Threads))

|

||||

}

|

||||

if err != nil {

|

||||

return nil, err

|

||||

@@ -129,12 +173,15 @@ func ModelInference(s string, loader *model.ModelLoader, c Config, tokenCallback

|

||||

supportStreams = true

|

||||

|

||||

fn = func() (string, error) {

|

||||

//model.ProcessInput("You are a chatbot that is very good at chatting. blah blah blah")

|

||||

stopWord := "\n"

|

||||

if len(c.StopWords) > 0 {

|

||||

stopWord = c.StopWords[0]

|

||||

}

|

||||

|

||||

if err := model.ProcessInput(s); err != nil {

|

||||

return "", err

|

||||

}

|

||||

|

||||

response := model.GenerateResponse(c.Maxtokens, stopWord, float32(c.Temperature), float32(c.TopP), tokenCallback)

|

||||

|

||||

return response, nil

|

||||

@@ -219,49 +266,17 @@ func ModelInference(s string, loader *model.ModelLoader, c Config, tokenCallback

|

||||

model.SetTokenCallback(tokenCallback)

|

||||

}

|

||||

|

||||

// Generate the prediction using the language model

|

||||

predictOptions := []llama.PredictOption{

|

||||

llama.SetTemperature(c.Temperature),

|

||||

llama.SetTopP(c.TopP),

|

||||

llama.SetTopK(c.TopK),

|

||||

llama.SetTokens(c.Maxtokens),

|

||||

llama.SetThreads(c.Threads),

|

||||

}

|

||||

predictOptions := buildLLamaPredictOptions(c)

|

||||

|

||||

if c.Debug {

|

||||

predictOptions = append(predictOptions, llama.Debug)

|

||||

}

|

||||

|

||||

predictOptions = append(predictOptions, llama.SetStopWords(c.StopWords...))

|

||||

|

||||

if c.RepeatPenalty != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetPenalty(c.RepeatPenalty))

|

||||

}

|

||||

|

||||

if c.Keep != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetNKeep(c.Keep))

|

||||

}

|

||||

|

||||

if c.Batch != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetBatch(c.Batch))

|

||||

}

|

||||

|

||||

if c.F16 {

|

||||

predictOptions = append(predictOptions, llama.EnableF16KV)

|

||||

}

|

||||

|

||||

if c.IgnoreEOS {

|

||||

predictOptions = append(predictOptions, llama.IgnoreEOS)

|

||||

}

|

||||

|

||||

if c.Seed != 0 {

|

||||

predictOptions = append(predictOptions, llama.SetSeed(c.Seed))

|

||||

}

|

||||

|

||||

return model.Predict(

|

||||

str, er := model.Predict(

|

||||

s,

|

||||

predictOptions...,

|

||||

)

|

||||

// Seems that if we don't free the callback explicitly we leave functions registered (that might try to send on closed channels)

|

||||

// For instance otherwise the API returns: {"error":{"code":500,"message":"send on closed channel","type":""}}

|

||||

// after a stream event has occurred

|

||||

model.SetTokenCallback(nil)

|

||||

return str, er

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

@@ -8,6 +8,8 @@ Here is a list of projects that can easily be integrated with the LocalAI backen

|

||||

- [discord-bot](https://github.com/go-skynet/LocalAI/tree/master/examples/discord-bot/) (by [@mudler](https://github.com/mudler))

|

||||

- [langchain](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain/) (by [@dave-gray101](https://github.com/dave-gray101))

|

||||

- [langchain-python](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain-python/) (by [@mudler](https://github.com/mudler))

|

||||

- [localai-webui](https://github.com/go-skynet/LocalAI/tree/master/examples/localai-webui/) (by [@dhruvgera](https://github.com/dhruvgera))

|

||||

- [rwkv](https://github.com/go-skynet/LocalAI/tree/master/examples/rwkv/) (by [@mudler](https://github.com/mudler))

|

||||

- [slack-bot](https://github.com/go-skynet/LocalAI/tree/master/examples/slack-bot/) (by [@mudler](https://github.com/mudler))

|

||||

|

||||

## Want to contribute?

|

||||

|

||||

5

examples/langchain/PY.Dockerfile

Normal file

5

examples/langchain/PY.Dockerfile

Normal file

@@ -0,0 +1,5 @@

|

||||

FROM python:3.10-bullseye

|

||||

COPY ./langchainpy-localai-example /app

|

||||

WORKDIR /app

|

||||

RUN pip install --no-cache-dir -r requirements.txt

|

||||

ENTRYPOINT [ "python", "./simple_demo.py" ];

|

||||

@@ -1,10 +1,6 @@

|

||||

# langchain

|

||||

|

||||

Example of using langchain in TypeScript, with the standard OpenAI llm module, and LocalAI.

|

||||

|

||||

Example for python langchain to follow at a later date

|

||||

|

||||

Set up to make it easy to modify the `index.mts` file to look like any langchain example file.

|

||||

Example of using langchain, with the standard OpenAI llm module, and LocalAI. Has docker compose profiles for both the Typescript and Python versions.

|

||||

|

||||

**Please Note** - This is a tech demo example at this time. ggml-gpt4all-j has pretty terrible results for most langchain applications with the settings used in this example.

|

||||

|

||||

@@ -22,8 +18,11 @@ cd LocalAI/examples/langchain

|

||||

# Download gpt4all-j to models/

|

||||

wget https://gpt4all.io/models/ggml-gpt4all-j.bin -O models/ggml-gpt4all-j

|

||||

|

||||

# start with docker-compose

|

||||

docker-compose up --build

|

||||

# start with docker-compose for typescript!

|

||||

docker-compose --profile ts up --build

|

||||

|

||||

# or start with docker-compose for python!

|

||||

docker-compose --profile py up --build

|

||||

```

|

||||

|

||||

## Copyright

|

||||

|

||||

@@ -15,11 +15,29 @@ services:

|

||||

- ./models:/models:cached

|

||||

command: ["/usr/bin/local-ai" ]

|

||||

|

||||

langchainjs:

|

||||

js:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: JS.Dockerfile

|

||||

profiles:

|

||||

- js

|

||||

- ts

|

||||

depends_on:

|

||||

- "api"

|

||||

environment:

|

||||

- 'OPENAI_API_KEY=sk-XXXXXXXXXXXXXXXXXXXX'

|

||||

- 'OPENAI_API_HOST=http://api:8080/v1'

|

||||

- 'OPENAI_API_BASE=http://api:8080/v1'

|

||||

- 'MODEL_NAME=gpt-3.5-turbo' #gpt-3.5-turbo' # ggml-gpt4all-j' # ggml-koala-13B-4bit-128g'

|

||||

|

||||

py:

|

||||

build:

|

||||

context: .

|

||||

dockerfile: PY.Dockerfile

|

||||

profiles:

|

||||

- py

|

||||

depends_on:

|

||||

- "api"

|

||||

environment:

|

||||

- 'OPENAI_API_KEY=sk-XXXXXXXXXXXXXXXXXXXX'

|

||||

- 'OPENAI_API_BASE=http://api:8080/v1'

|

||||

- 'MODEL_NAME=gpt-3.5-turbo' #gpt-3.5-turbo' # ggml-gpt4all-j' # ggml-koala-13B-4bit-128g'

|

||||

@@ -4,7 +4,7 @@ import { Document } from "langchain/document";

|

||||

import { initializeAgentExecutorWithOptions } from "langchain/agents";

|

||||

import {Calculator} from "langchain/tools/calculator";

|

||||

|

||||

const pathToLocalAi = process.env['OPENAI_API_HOST'] || 'http://api:8080/v1';

|

||||

const pathToLocalAi = process.env['OPENAI_API_BASE'] || 'http://api:8080/v1';

|

||||

const fakeApiKey = process.env['OPENAI_API_KEY'] || '-';

|

||||

const modelName = process.env['MODEL_NAME'] || 'gpt-3.5-turbo';

|

||||

|

||||

|

||||

24

examples/langchain/langchainpy-localai-example/.vscode/launch.json

vendored

Normal file

24

examples/langchain/langchainpy-localai-example/.vscode/launch.json

vendored

Normal file

@@ -0,0 +1,24 @@

|

||||

{

|

||||

"version": "0.2.0",

|

||||

"configurations": [

|

||||

{

|

||||

"name": "Python: Current File",

|

||||

"type": "python",

|

||||

"request": "launch",

|

||||

"program": "${file}",

|

||||

"console": "integratedTerminal",

|

||||

"redirectOutput": true,

|

||||

"justMyCode": false

|

||||

},

|

||||

{

|

||||

"name": "Python: Attach to Port 5678",

|

||||

"type": "python",

|

||||

"request": "attach",

|

||||

"connect": {

|

||||

"host": "localhost",

|

||||

"port": 5678

|

||||

},

|

||||

"justMyCode": false

|

||||

}

|

||||

]

|

||||

}

|

||||

3

examples/langchain/langchainpy-localai-example/.vscode/settings.json

vendored

Normal file

3

examples/langchain/langchainpy-localai-example/.vscode/settings.json

vendored

Normal file

@@ -0,0 +1,3 @@

|

||||

{

|

||||

"python.defaultInterpreterPath": "${workspaceFolder}/.venv/Scripts/python"

|

||||

}

|

||||

39

examples/langchain/langchainpy-localai-example/full_demo.py

Normal file

39

examples/langchain/langchainpy-localai-example/full_demo.py

Normal file

@@ -0,0 +1,39 @@

|

||||

import os

|

||||

from langchain.chat_models import ChatOpenAI

|

||||

from langchain import PromptTemplate, LLMChain

|

||||

from langchain.prompts.chat import (

|

||||

ChatPromptTemplate,

|

||||

SystemMessagePromptTemplate,

|

||||

AIMessagePromptTemplate,

|

||||

HumanMessagePromptTemplate,

|

||||

)

|

||||

from langchain.schema import (

|

||||

AIMessage,

|

||||

HumanMessage,

|

||||

SystemMessage

|

||||

)

|

||||

|

||||

print('Langchain + LocalAI PYTHON Tests')

|

||||

|

||||

base_path = os.environ.get('OPENAI_API_BASE', 'http://api:8080/v1')

|

||||

key = os.environ.get('OPENAI_API_KEY', '-')

|

||||

model_name = os.environ.get('MODEL_NAME', 'gpt-3.5-turbo')

|

||||

|

||||

|

||||

chat = ChatOpenAI(temperature=0, openai_api_base=base_path, openai_api_key=key, model_name=model_name, max_tokens=100)

|

||||

|

||||

print("Created ChatOpenAI for ", chat.model_name)

|

||||

|

||||

template = "You are a helpful assistant that translates {input_language} to {output_language}."

|

||||

system_message_prompt = SystemMessagePromptTemplate.from_template(template)

|

||||

human_template = "{text}"

|

||||

human_message_prompt = HumanMessagePromptTemplate.from_template(human_template)

|

||||

|

||||

chat_prompt = ChatPromptTemplate.from_messages([system_message_prompt, human_message_prompt])

|

||||

|

||||

print("ABOUT to execute")

|

||||

|

||||

# get a chat completion from the formatted messages

|

||||

chat(chat_prompt.format_prompt(input_language="English", output_language="French", text="I love programming.").to_messages())

|

||||

|

||||

print(".");

|

||||

@@ -0,0 +1,32 @@

|

||||

aiohttp==3.8.4

|

||||

aiosignal==1.3.1

|

||||

async-timeout==4.0.2

|

||||

attrs==23.1.0

|

||||

certifi==2022.12.7

|

||||

charset-normalizer==3.1.0

|

||||

colorama==0.4.6

|

||||

dataclasses-json==0.5.7

|

||||

debugpy==1.6.7

|

||||

frozenlist==1.3.3

|

||||

greenlet==2.0.2

|

||||

idna==3.4

|

||||

langchain==0.0.157

|

||||

marshmallow==3.19.0

|

||||

marshmallow-enum==1.5.1

|

||||

multidict==6.0.4

|

||||

mypy-extensions==1.0.0

|

||||

numexpr==2.8.4

|

||||

numpy==1.24.3

|

||||

openai==0.27.6

|

||||

openapi-schema-pydantic==1.2.4

|

||||

packaging==23.1

|

||||

pydantic==1.10.7

|

||||

PyYAML==6.0

|

||||

requests==2.29.0

|

||||

SQLAlchemy==2.0.12

|

||||

tenacity==8.2.2

|

||||

tqdm==4.65.0

|

||||

typing-inspect==0.8.0

|

||||

typing_extensions==4.5.0

|

||||

urllib3==1.26.15

|

||||

yarl==1.9.2

|

||||

@@ -0,0 +1,6 @@

|

||||

|

||||

from langchain.llms import OpenAI

|

||||

|

||||

llm = OpenAI(temperature=0.9,model_name="gpt-3.5-turbo")

|

||||

text = "What would be a good company name for a company that makes colorful socks?"

|

||||

print(llm(text))

|

||||

@@ -12,6 +12,7 @@ stopwords:

|

||||

roles:

|

||||

user: " "

|

||||

system: " "

|

||||

backend: "gptj"

|

||||

template:

|

||||

completion: completion

|

||||

chat: completion # gpt4all

|

||||

26

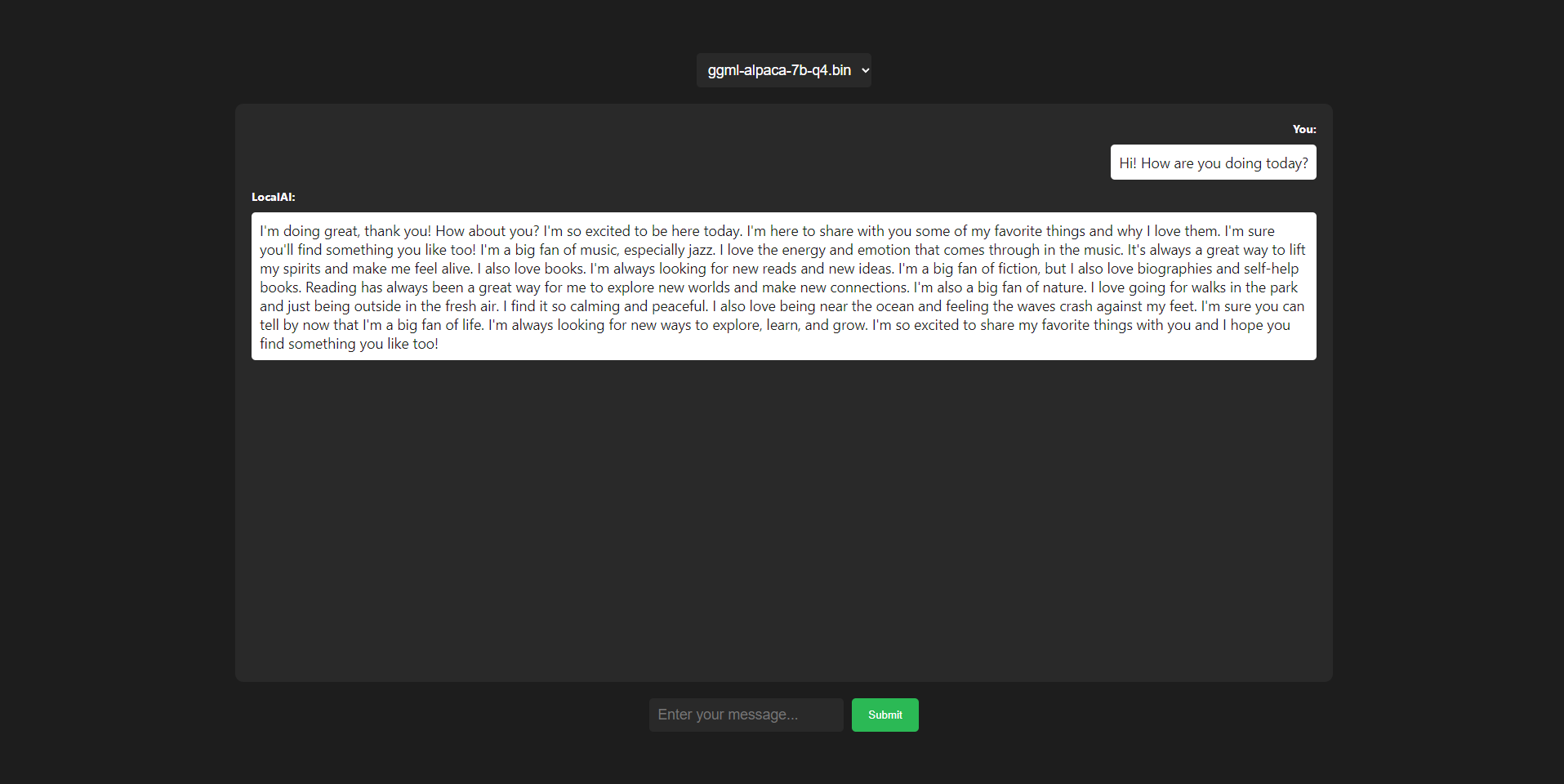

examples/localai-webui/README.md

Normal file

26

examples/localai-webui/README.md

Normal file

@@ -0,0 +1,26 @@

|

||||

# localai-webui

|

||||

|

||||

Example of integration with [dhruvgera/localai-frontend](https://github.com/Dhruvgera/LocalAI-frontend).

|

||||

|

||||

|

||||

|

||||

## Setup

|

||||

|

||||

```bash

|

||||

# Clone LocalAI

|

||||

git clone https://github.com/go-skynet/LocalAI

|

||||

|

||||

cd LocalAI/examples/localai-webui

|

||||

|

||||

# (optional) Checkout a specific LocalAI tag

|

||||

# git checkout -b build <TAG>

|

||||

|

||||

# Download any desired models to models/ in the parent LocalAI project dir

|

||||

# For example: wget https://gpt4all.io/models/ggml-gpt4all-j.bin

|

||||

|

||||

# start with docker-compose

|

||||

docker-compose up -d --build

|

||||

```

|

||||

|

||||

Open http://localhost:3000 for the Web UI.

|

||||

|

||||

20

examples/localai-webui/docker-compose.yml

Normal file

20

examples/localai-webui/docker-compose.yml

Normal file

@@ -0,0 +1,20 @@

|

||||

version: '3.6'

|

||||

|

||||

services:

|

||||

api:

|

||||

image: quay.io/go-skynet/local-ai:latest

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile

|

||||

ports:

|

||||

- 8080:8080

|

||||

env_file:

|

||||

- .env

|

||||

volumes:

|

||||

- ./models:/models:cached

|

||||

command: ["/usr/bin/local-ai"]

|

||||

|

||||

frontend:

|

||||

image: quay.io/go-skynet/localai-frontend:master

|

||||

ports:

|

||||

- 3000:3000

|

||||

1

examples/query_data/.gitignore

vendored

Normal file

1

examples/query_data/.gitignore

vendored

Normal file

@@ -0,0 +1 @@

|

||||

storage/

|

||||

49

examples/query_data/README.md

Normal file

49

examples/query_data/README.md

Normal file

@@ -0,0 +1,49 @@

|

||||

# Data query example

|

||||

|

||||

This example makes use of [Llama-Index](https://gpt-index.readthedocs.io/en/stable/getting_started/installation.html) to enable question answering on a set of documents.

|

||||

|

||||

It loosely follows [the quickstart](https://gpt-index.readthedocs.io/en/stable/guides/primer/usage_pattern.html).

|

||||

|

||||

## Requirements

|

||||

|

||||

For this in order to work, you will need a model compatible with the `llama.cpp` backend. This is will not work with gpt4all.

|

||||

|

||||

The example uses `WizardLM`. Edit the config files in `models/` accordingly to specify the model you use (change `HERE`).

|

||||

|

||||

You will also need a training data set. Copy that over `data`.

|

||||

|

||||

## Setup

|

||||

|

||||

Start the API:

|

||||

|

||||

```bash

|

||||

# Clone LocalAI

|

||||

git clone https://github.com/go-skynet/LocalAI

|

||||

|

||||

cd LocalAI/examples/query_data

|

||||

|

||||

# Copy your models, edit config files accordingly

|

||||

|

||||

# start with docker-compose

|

||||

docker-compose up -d --build

|

||||

```

|

||||

|

||||

### Create a storage:

|

||||

|

||||

```bash

|

||||

export OPENAI_API_BASE=http://localhost:8080/v1

|

||||

export OPENAI_API_KEY=sk-

|

||||

|

||||

python store.py

|

||||

```

|

||||

|

||||

After it finishes, a directory "storage" will be created with the vector index database.

|

||||

|

||||

## Query

|

||||

|

||||

```bash

|

||||

export OPENAI_API_BASE=http://localhost:8080/v1

|

||||

export OPENAI_API_KEY=sk-

|

||||

|

||||

python query.py

|

||||

```

|

||||

0

examples/query_data/data/.keep

Normal file

0

examples/query_data/data/.keep

Normal file

15

examples/query_data/docker-compose.yml

Normal file

15

examples/query_data/docker-compose.yml

Normal file

@@ -0,0 +1,15 @@

|

||||

version: '3.6'

|

||||

|

||||

services:

|

||||

api:

|

||||

image: quay.io/go-skynet/local-ai:latest

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile

|

||||

ports:

|

||||

- 8080:8080

|

||||

env_file:

|

||||

- .env

|

||||

volumes:

|

||||

- ./models:/models:cached

|

||||

command: ["/usr/bin/local-ai"]

|

||||

1