mirror of

https://github.com/mudler/LocalAI.git

synced 2026-02-04 11:42:57 -05:00

Compare commits

5 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

714bfcd45b | ||

|

|

77ce8b953e | ||

|

|

01ada95941 | ||

|

|

eabdc5042a | ||

|

|

96267d9437 |

2

Makefile

2

Makefile

@@ -3,7 +3,7 @@ GOTEST=$(GOCMD) test

|

||||

GOVET=$(GOCMD) vet

|

||||

BINARY_NAME=local-ai

|

||||

|

||||

GOLLAMA_VERSION?=llama.cpp-f4cef87

|

||||

GOLLAMA_VERSION?=2e6ae1269e035886fc64e268a6dda9d8c4ba8c75

|

||||

GOGPT4ALLJ_VERSION?=1f7bff57f66cb7062e40d0ac3abd2217815e5109

|

||||

GOGPT2_VERSION?=245a5bfe6708ab80dc5c733dcdbfbe3cfd2acdaa

|

||||

RWKV_REPO?=https://github.com/donomii/go-rwkv.cpp

|

||||

|

||||

@@ -162,8 +162,6 @@ To build locally, run `make build` (see below).

|

||||

|

||||

### Other examples

|

||||

|

||||

|

||||

|

||||

To see other examples on how to integrate with other projects for instance chatbot-ui, see: [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

|

||||

|

||||

@@ -572,7 +570,7 @@ Not currently, as ggml doesn't support GPUs yet: https://github.com/ggerganov/ll

|

||||

### Where is the webUI?

|

||||

|

||||

<details>

|

||||

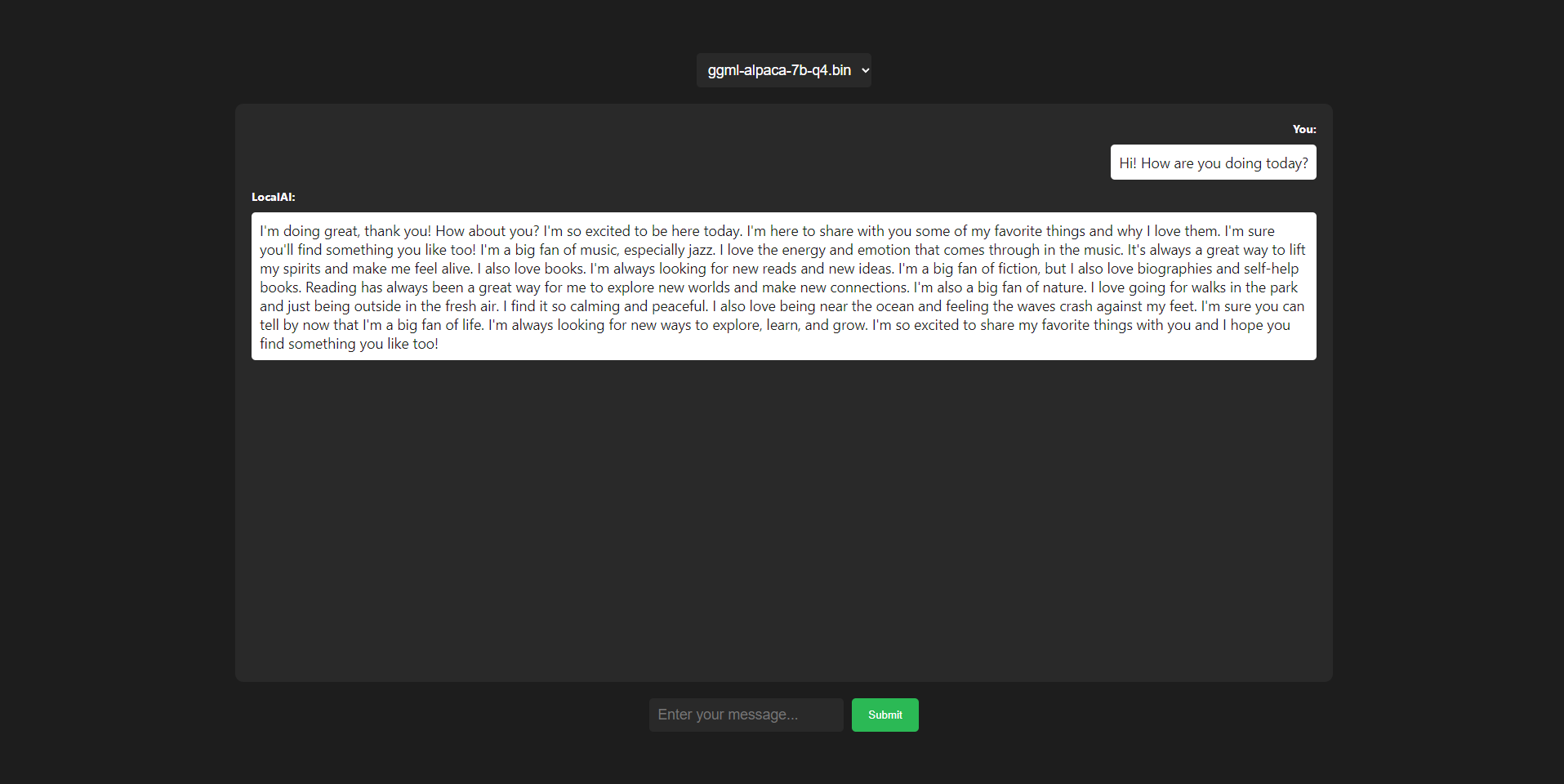

We are working on to have a good out of the box experience - however as LocalAI is an API you can already plug it into existing projects that provides are UI interfaces to OpenAI's APIs. There are several already on github, and should be compatible with LocalAI already (as it mimics the OpenAI API)

|

||||

There is the availability of localai-webui and chatbot-ui in the examples section and can be setup as per the instructions. However as LocalAI is an API you can already plug it into existing projects that provides are UI interfaces to OpenAI's APIs. There are several already on github, and should be compatible with LocalAI already (as it mimics the OpenAI API)

|

||||

|

||||

</details>

|

||||

|

||||

|

||||

@@ -299,6 +299,21 @@ func completionEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader,

|

||||

}

|

||||

|

||||

func chatEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader, threads, ctx int, f16 bool) func(c *fiber.Ctx) error {

|

||||

|

||||

process := func(s string, req *OpenAIRequest, config *Config, loader *model.ModelLoader, responses chan OpenAIResponse) {

|

||||

ComputeChoices(s, req, config, loader, func(s string, c *[]Choice) {}, func(s string) bool {

|

||||

resp := OpenAIResponse{

|

||||

Model: req.Model, // we have to return what the user sent here, due to OpenAI spec.

|

||||

Choices: []Choice{{Delta: &Message{Role: "assistant", Content: s}}},

|

||||

Object: "chat.completion.chunk",

|

||||

}

|

||||

log.Debug().Msgf("Sending goroutine: %s", s)

|

||||

|

||||

responses <- resp

|

||||

return true

|

||||

})

|

||||

close(responses)

|

||||

}

|

||||

return func(c *fiber.Ctx) error {

|

||||

config, input, err := readConfig(cm, c, loader, debug, threads, ctx, f16)

|

||||

if err != nil {

|

||||

@@ -350,19 +365,7 @@ func chatEndpoint(cm ConfigMerger, debug bool, loader *model.ModelLoader, thread

|

||||

if input.Stream {

|

||||

responses := make(chan OpenAIResponse)

|

||||

|

||||

go func() {

|

||||

ComputeChoices(predInput, input, config, loader, func(s string, c *[]Choice) {}, func(s string) bool {

|

||||

resp := OpenAIResponse{

|

||||

Model: input.Model, // we have to return what the user sent here, due to OpenAI spec.

|

||||

Choices: []Choice{{Delta: &Message{Role: "assistant", Content: s}}},

|

||||

Object: "chat.completion.chunk",

|

||||

}

|

||||

|

||||

responses <- resp

|

||||

return true

|

||||

})

|

||||

close(responses)

|

||||

}()

|

||||

go process(predInput, input, config, loader, responses)

|

||||

|

||||

c.Context().SetBodyStreamWriter(fasthttp.StreamWriter(func(w *bufio.Writer) {

|

||||

|

||||

|

||||

@@ -261,10 +261,15 @@ func ModelInference(s string, loader *model.ModelLoader, c Config, tokenCallback

|

||||

predictOptions = append(predictOptions, llama.SetSeed(c.Seed))

|

||||

}

|

||||

|

||||

return model.Predict(

|

||||

str, er := model.Predict(

|

||||

s,

|

||||

predictOptions...,

|

||||

)

|

||||

// Seems that if we don't free the callback explicitly we leave functions registered (that might try to send on closed channels)

|

||||

// For instance otherwise the API returns: {"error":{"code":500,"message":"send on closed channel","type":""}}

|

||||

// after a stream event has occurred

|

||||

model.SetTokenCallback(nil)

|

||||

return str, er

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

@@ -8,6 +8,7 @@ Here is a list of projects that can easily be integrated with the LocalAI backen

|

||||

- [discord-bot](https://github.com/go-skynet/LocalAI/tree/master/examples/discord-bot/) (by [@mudler](https://github.com/mudler))

|

||||

- [langchain](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain/) (by [@dave-gray101](https://github.com/dave-gray101))

|

||||

- [langchain-python](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain-python/) (by [@mudler](https://github.com/mudler))

|

||||

- [localai-webui](https://github.com/go-skynet/LocalAI/tree/master/examples/localai-webui/) (by [@dhruvgera](https://github.com/dhruvgera))

|

||||

- [rwkv](https://github.com/go-skynet/LocalAI/tree/master/examples/rwkv/) (by [@mudler](https://github.com/mudler))

|

||||

- [slack-bot](https://github.com/go-skynet/LocalAI/tree/master/examples/slack-bot/) (by [@mudler](https://github.com/mudler))

|

||||

|

||||

|

||||

26

examples/localai-webui/README.md

Normal file

26

examples/localai-webui/README.md

Normal file

@@ -0,0 +1,26 @@

|

||||

# localai-webui

|

||||

|

||||

Example of integration with [dhruvgera/localai-frontend](https://github.com/Dhruvgera/LocalAI-frontend).

|

||||

|

||||

|

||||

|

||||

## Setup

|

||||

|

||||

```bash

|

||||

# Clone LocalAI

|

||||

git clone https://github.com/go-skynet/LocalAI

|

||||

|

||||

cd LocalAI/examples/localai-webui

|

||||

|

||||

# (optional) Checkout a specific LocalAI tag

|

||||

# git checkout -b build <TAG>

|

||||

|

||||

# Download any desired models to models/ in the parent LocalAI project dir

|

||||

# For example: wget https://gpt4all.io/models/ggml-gpt4all-j.bin

|

||||

|

||||

# start with docker-compose

|

||||

docker-compose up -d --build

|

||||

```

|

||||

|

||||

Open http://localhost:3000 for the Web UI.

|

||||

|

||||

20

examples/localai-webui/docker-compose.yml

Normal file

20

examples/localai-webui/docker-compose.yml

Normal file

@@ -0,0 +1,20 @@

|

||||

version: '3.6'

|

||||

|

||||

services:

|

||||

api:

|

||||

image: quay.io/go-skynet/local-ai:latest

|

||||

build:

|

||||

context: .

|

||||

dockerfile: Dockerfile

|

||||

ports:

|

||||

- 8080:8080

|

||||

env_file:

|

||||

- .env

|

||||

volumes:

|

||||

- ./models:/models:cached

|

||||

command: ["/usr/bin/local-ai"]

|

||||

|

||||

frontend:

|

||||

image: quay.io/go-skynet/localai-frontend:master

|

||||

ports:

|

||||

- 3000:3000

|

||||

4

go.mod

4

go.mod

@@ -3,10 +3,10 @@ module github.com/go-skynet/LocalAI

|

||||

go 1.19

|

||||

|

||||

require (

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230502223004-0a3db3d72e7d

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230503112711-af62fcc432be

|

||||

github.com/go-skynet/go-gpt2.cpp v0.0.0-20230422085954-245a5bfe6708

|

||||

github.com/go-skynet/go-gpt4all-j.cpp v0.0.0-20230422090028-1f7bff57f66c

|

||||

github.com/go-skynet/go-llama.cpp v0.0.0-20230502121737-8ceb6167e405

|

||||

github.com/go-skynet/go-llama.cpp v0.0.0-20230503200855-2e6ae1269e03

|

||||

github.com/gofiber/fiber/v2 v2.44.0

|

||||

github.com/hashicorp/go-multierror v1.1.1

|

||||

github.com/jaypipes/ghw v0.10.0

|

||||

|

||||

4

go.sum

4

go.sum

@@ -14,6 +14,8 @@ github.com/davecgh/go-spew v1.1.1 h1:vj9j/u1bqnvCEfJOwUhtlOARqs3+rkHYY13jYWTU97c

|

||||

github.com/davecgh/go-spew v1.1.1/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230502223004-0a3db3d72e7d h1:lSHwlYf1H4WAWYgf7rjEVTGen1qmigUq2Egpu8mnQiY=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230502223004-0a3db3d72e7d/go.mod h1:H6QBF7/Tz6DAEBDXQged4H1BvsmqY/K5FG9wQRGa01g=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230503112711-af62fcc432be h1:3Hic97PY6hcw/SY44RuR7kyONkxd744RFeRrqckzwNQ=

|

||||

github.com/donomii/go-rwkv.cpp v0.0.0-20230503112711-af62fcc432be/go.mod h1:gWy7FIWioqYmYxkaoFyBnaKApeZVrUkHhv9EV9pz4dM=

|

||||

github.com/ghodss/yaml v1.0.0 h1:wQHKEahhL6wmXdzwWG11gIVCkOv05bNOh+Rxn0yngAk=

|

||||

github.com/ghodss/yaml v1.0.0/go.mod h1:4dBDuWmgqj2HViK6kFavaiC9ZROes6MMH2rRYeMEF04=

|

||||

github.com/go-logr/logr v1.2.3 h1:2DntVwHkVopvECVRSlL5PSo9eG+cAkDCuckLubN+rq0=

|

||||

@@ -31,6 +33,8 @@ github.com/go-skynet/go-llama.cpp v0.0.0-20230430075552-377fd245eae2 h1:CYQRCbOf

|

||||

github.com/go-skynet/go-llama.cpp v0.0.0-20230430075552-377fd245eae2/go.mod h1:35AKIEMY+YTKCBJIa/8GZcNGJ2J+nQk1hQiWo/OnEWw=

|

||||

github.com/go-skynet/go-llama.cpp v0.0.0-20230502121737-8ceb6167e405 h1:pbIxJ/eiL1Irdprxk/mquaxjR1XDGCE+7CT9BGJNRaY=

|

||||

github.com/go-skynet/go-llama.cpp v0.0.0-20230502121737-8ceb6167e405/go.mod h1:35AKIEMY+YTKCBJIa/8GZcNGJ2J+nQk1hQiWo/OnEWw=

|

||||

github.com/go-skynet/go-llama.cpp v0.0.0-20230503200855-2e6ae1269e03 h1:j9fhITFhkz4SczJU0jIaMYo5tdTVTrj+zdhEgWHEr40=

|

||||

github.com/go-skynet/go-llama.cpp v0.0.0-20230503200855-2e6ae1269e03/go.mod h1:LvSQx5QAYBAMpWkbyVFFDiM1Tzj8LP55DvmUM3hbRMY=

|

||||

github.com/go-task/slim-sprig v0.0.0-20230315185526-52ccab3ef572 h1:tfuBGBXKqDEevZMzYi5KSi8KkcZtzBcTgAUUtapy0OI=

|

||||

github.com/go-task/slim-sprig v0.0.0-20230315185526-52ccab3ef572/go.mod h1:9Pwr4B2jHnOSGXyyzV8ROjYa2ojvAY6HCGYYfMoC3Ls=

|

||||

github.com/godbus/dbus/v5 v5.0.4/go.mod h1:xhWf0FNVPg57R7Z0UbKHbJfkEywrmjJnf7w5xrFpKfA=

|

||||

|

||||

@@ -81,10 +81,9 @@ func (ml *ModelLoader) TemplatePrefix(modelName string, in interface{}) (string,

|

||||

if exists {

|

||||

m = t

|

||||

}

|

||||

|

||||

}

|

||||

if m == nil {

|

||||

return "", nil

|

||||

return "", fmt.Errorf("failed loading any template")

|

||||

}

|

||||

|

||||

var buf bytes.Buffer

|

||||

|

||||

Reference in New Issue

Block a user