mirror of

https://github.com/mudler/LocalAI.git

synced 2026-02-03 03:02:38 -05:00

Compare commits

189 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

18a701355c | ||

|

|

3911957d34 | ||

|

|

f5146bde18 | ||

|

|

b57ea10c94 | ||

|

|

821cfed6c0 | ||

|

|

728f297bb8 | ||

|

|

4c0013fd79 | ||

|

|

65d06285d8 | ||

|

|

e0d1a8995d | ||

|

|

425beea6c5 | ||

|

|

cdfb930a69 | ||

|

|

09641b9790 | ||

|

|

aac9a57500 | ||

|

|

59f7953249 | ||

|

|

217dbb448e | ||

|

|

76c881043e | ||

|

|

835a20610b | ||

|

|

74e808b8c3 | ||

|

|

53c83f2fae | ||

|

|

62365fa31d | ||

|

|

a44c8e9b4e | ||

|

|

320e430c7f | ||

|

|

8615646827 | ||

|

|

925d7c3057 | ||

|

|

e350924ac1 | ||

|

|

e891a46740 | ||

|

|

cd9285bbe6 | ||

|

|

917ff13c86 | ||

|

|

2a40f44023 | ||

|

|

c22d06c780 | ||

|

|

babbd23744 | ||

|

|

eee41cbe2b | ||

|

|

bf54b78270 | ||

|

|

589dfae89f | ||

|

|

c8cc197ddd | ||

|

|

76c561a908 | ||

|

|

04797a80e1 | ||

|

|

29583a5ea5 | ||

|

|

d12c1f7a4a | ||

|

|

505572dae8 | ||

|

|

3ddea794e1 | ||

|

|

10e03bde35 | ||

|

|

e969604d75 | ||

|

|

c822e18f0d | ||

|

|

891af1c524 | ||

|

|

5807d0b766 | ||

|

|

9decd0813c | ||

|

|

43d3fb3eba | ||

|

|

f5f8c687be | ||

|

|

9e5cd0f10b | ||

|

|

231a3e7c02 | ||

|

|

57172e2e30 | ||

|

|

043399dd07 | ||

|

|

6b19356740 | ||

|

|

1cbe6a7067 | ||

|

|

2912f9870f | ||

|

|

9630be56e1 | ||

|

|

4aa78843c0 | ||

|

|

b36d9f3776 | ||

|

|

6f54cab3f0 | ||

|

|

ed5df1e68e | ||

|

|

3c07e11e73 | ||

|

|

91bdad1d12 | ||

|

|

482a83886e | ||

|

|

b8f52d67e1 | ||

|

|

9ed82199c5 | ||

|

|

864aaf8c4d | ||

|

|

c7056756d5 | ||

|

|

93cc8569c3 | ||

|

|

05a3d569b0 | ||

|

|

7bc08797f9 | ||

|

|

5b22704799 | ||

|

|

9609e4392b | ||

|

|

d0c033d09b | ||

|

|

4e381cbe92 | ||

|

|

ffaf3b1d36 | ||

|

|

465a3b755d | ||

|

|

91fc52bfb7 | ||

|

|

b425954b9e | ||

|

|

2e64ed6255 | ||

|

|

bf3d936aea | ||

|

|

19deea986a | ||

|

|

aa7a18f131 | ||

|

|

837ce2cb31 | ||

|

|

cadce540f9 | ||

|

|

1fade53a61 | ||

|

|

207ce81e4a | ||

|

|

fc59f74849 | ||

|

|

9d3c5ead93 | ||

|

|

549a01b62e | ||

|

|

5a6d9d4e5b | ||

|

|

1a7587ee48 | ||

|

|

cc9aa9eb3f | ||

|

|

5617e50ebc | ||

|

|

b83e8b950d | ||

|

|

d15fc5371a | ||

|

|

3f739575d8 | ||

|

|

7e4616646f | ||

|

|

44ffaf86ad | ||

|

|

d096644c67 | ||

|

|

1428600de4 | ||

|

|

17b18df600 | ||

|

|

cd81dbae1c | ||

|

|

76be06ed56 | ||

|

|

c2026e01c0 | ||

|

|

cdca286be1 | ||

|

|

41de6efca9 | ||

|

|

63a4ccebdc | ||

|

|

9237c1e91d | ||

|

|

9d051c5d4f | ||

|

|

acd03d15f2 | ||

|

|

a035de2fdd | ||

|

|

76a1267799 | ||

|

|

e533b008d4 | ||

|

|

a4380228e3 | ||

|

|

2a9d7474ce | ||

|

|

850a690290 | ||

|

|

39edd9ff73 | ||

|

|

b82bbbfc6b | ||

|

|

023c065812 | ||

|

|

a627a6c4e2 | ||

|

|

6c9ddff8e9 | ||

|

|

c5318587b8 | ||

|

|

c3622299ce | ||

|

|

de36a48861 | ||

|

|

961ca93219 | ||

|

|

557ccc5ad8 | ||

|

|

2488c445b6 | ||

|

|

b4241d0a0d | ||

|

|

8250391e49 | ||

|

|

fd1df4e971 | ||

|

|

5115b2faa3 | ||

|

|

93e82a8bf4 | ||

|

|

4413defca5 | ||

|

|

f359e1c6c4 | ||

|

|

1bc87d582d | ||

|

|

a86a383357 | ||

|

|

16f02c7b30 | ||

|

|

fe2706890c | ||

|

|

85f0f8227d | ||

|

|

59e3c02002 | ||

|

|

032dee256f | ||

|

|

6b5e2b2bf5 | ||

|

|

6fc303de87 | ||

|

|

6ad6e4873d | ||

|

|

d6d7391da8 | ||

|

|

11675932ac | ||

|

|

f02202e1e1 | ||

|

|

f8ee20991c | ||

|

|

e6db14e2f1 | ||

|

|

d00886abea | ||

|

|

4873d2bfa1 | ||

|

|

9f426578cf | ||

|

|

9d01b695a8 | ||

|

|

93829ab228 | ||

|

|

dd234f86d5 | ||

|

|

3daff6f1aa | ||

|

|

89dfa0f5fc | ||

|

|

bc03c492a0 | ||

|

|

f50a4c1454 | ||

|

|

d13d4d95ce | ||

|

|

428790ec06 | ||

|

|

4f551ce414 | ||

|

|

6ed7b10273 | ||

|

|

02979566ee | ||

|

|

cbdcc839f3 | ||

|

|

e1c8f087f4 | ||

|

|

3a90ea44a5 | ||

|

|

e55492475d | ||

|

|

07ec2e441d | ||

|

|

38d7e0b43c | ||

|

|

3411bfd00d | ||

|

|

7e5fe35ae4 | ||

|

|

8c8cf38d4d | ||

|

|

75b25297fd | ||

|

|

009ee47fe2 | ||

|

|

ec2adc2c03 | ||

|

|

ad301e6ed7 | ||

|

|

d094381e5d | ||

|

|

3ff9bbd217 | ||

|

|

e62ee2bc06 | ||

|

|

b49721cdd1 | ||

|

|

64c0a7967f | ||

|

|

e96eadab40 | ||

|

|

e73283121b | ||

|

|

857d13e8d6 | ||

|

|

91db3d4d5c | ||

|

|

961cf29217 | ||

|

|

c839b334eb |

@@ -1,2 +1,4 @@

|

||||

models

|

||||

examples/chatbot-ui/models

|

||||

examples/chatbot-ui/models

|

||||

examples/rwkv/models

|

||||

examples/**/models

|

||||

|

||||

27

.env

27

.env

@@ -1,5 +1,30 @@

|

||||

## Set number of threads.

|

||||

## Note: prefer the number of physical cores. Overbooking the CPU degrades performance notably.

|

||||

# THREADS=14

|

||||

|

||||

## Specify a different bind address (defaults to ":8080")

|

||||

# ADDRESS=127.0.0.1:8080

|

||||

|

||||

## Default models context size

|

||||

# CONTEXT_SIZE=512

|

||||

|

||||

## Default path for models

|

||||

MODELS_PATH=/models

|

||||

|

||||

## Enable debug mode

|

||||

# DEBUG=true

|

||||

# BUILD_TYPE=generic

|

||||

|

||||

## Specify a build type. Available: cublas, openblas.

|

||||

# BUILD_TYPE=openblas

|

||||

|

||||

## Uncomment and set to false to disable rebuilding from source

|

||||

# REBUILD=false

|

||||

|

||||

## Enable image generation with stablediffusion (requires REBUILD=true)

|

||||

# GO_TAGS=stablediffusion

|

||||

|

||||

## Path where to store generated images

|

||||

# IMAGE_PATH=/tmp

|

||||

|

||||

## Specify a default upload limit in MB (whisper)

|

||||

# UPLOAD_LIMIT

|

||||

31

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

31

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,31 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: bug

|

||||

assignees: mudler

|

||||

|

||||

---

|

||||

|

||||

<!-- Thanks for helping us to improve LocalAI! We welcome all bug reports. Please fill out each area of the template so we can better help you. Comments like this will be hidden when you post but you can delete them if you wish. -->

|

||||

|

||||

**LocalAI version:**

|

||||

<!-- Container Image or LocalAI tag/commit -->

|

||||

|

||||

**Environment, CPU architecture, OS, and Version:**

|

||||

<!-- Provide the output from "uname -a", HW specs, if it's a VM -->

|

||||

|

||||

**Describe the bug**

|

||||

<!-- A clear and concise description of what the bug is. -->

|

||||

|

||||

**To Reproduce**

|

||||

<!-- Steps to reproduce the behavior, including the LocalAI command used, if any -->

|

||||

|

||||

**Expected behavior**

|

||||

<!-- A clear and concise description of what you expected to happen. -->

|

||||

|

||||

**Logs**

|

||||

<!-- If applicable, add logs while running LocalAI in debug mode (`--debug` or `DEBUG=true`) to help explain your problem. -->

|

||||

|

||||

**Additional context**

|

||||

<!-- Add any other context about the problem here. -->

|

||||

8

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

8

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@@ -0,0 +1,8 @@

|

||||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: Community Support

|

||||

url: https://github.com/go-skynet/LocalAI/discussions

|

||||

about: Please ask and answer questions here.

|

||||

- name: Discord

|

||||

url: https://discord.gg/uJAeKSAGDy

|

||||

about: Join our community on Discord!

|

||||

22

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

22

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,22 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: enhancement

|

||||

assignees: mudler

|

||||

|

||||

---

|

||||

|

||||

<!-- Thanks for helping us to improve LocalAI! We welcome all feature requests. Please fill out each area of the template so we can better help you. Comments like this will be hidden when you post but you can delete them if you wish. -->

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

<!-- A clear and concise description of what the problem is. Ex. I'm always frustrated when [...] -->

|

||||

|

||||

**Describe the solution you'd like**

|

||||

<!-- A clear and concise description of what you want to happen. -->

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

<!-- A clear and concise description of any alternative solutions or features you've considered. -->

|

||||

|

||||

**Additional context**

|

||||

<!-- Add any other context or screenshots about the feature request here. -->

|

||||

23

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

23

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

@@ -0,0 +1,23 @@

|

||||

**Description**

|

||||

|

||||

This PR fixes #

|

||||

|

||||

**Notes for Reviewers**

|

||||

|

||||

|

||||

**[Signed commits](../CONTRIBUTING.md#signing-off-on-commits-developer-certificate-of-origin)**

|

||||

- [ ] Yes, I signed my commits.

|

||||

|

||||

|

||||

<!--

|

||||

Thank you for contributing to LocalAI!

|

||||

|

||||

Contributing Conventions:

|

||||

|

||||

1. Include descriptive PR titles with [<component-name>] prepended.

|

||||

2. Build and test your changes before submitting a PR.

|

||||

3. Sign your commits

|

||||

|

||||

By following the community's contribution conventions upfront, the review process will

|

||||

be accelerated and your PR merged more quickly.

|

||||

-->

|

||||

24

.github/release.yml

vendored

Normal file

24

.github/release.yml

vendored

Normal file

@@ -0,0 +1,24 @@

|

||||

# .github/release.yml

|

||||

|

||||

changelog:

|

||||

exclude:

|

||||

labels:

|

||||

- ignore-for-release

|

||||

categories:

|

||||

- title: Breaking Changes 🛠

|

||||

labels:

|

||||

- Semver-Major

|

||||

- breaking-change

|

||||

- title: "Bug fixes :bug:"

|

||||

labels:

|

||||

- bug

|

||||

- title: Exciting New Features 🎉

|

||||

labels:

|

||||

- Semver-Minor

|

||||

- enhancement

|

||||

- title: 👒 Dependencies

|

||||

labels:

|

||||

- dependencies

|

||||

- title: Other Changes

|

||||

labels:

|

||||

- "*"

|

||||

18

.github/stale.yml

vendored

Normal file

18

.github/stale.yml

vendored

Normal file

@@ -0,0 +1,18 @@

|

||||

# Number of days of inactivity before an issue becomes stale

|

||||

daysUntilStale: 45

|

||||

# Number of days of inactivity before a stale issue is closed

|

||||

daysUntilClose: 10

|

||||

# Issues with these labels will never be considered stale

|

||||

exemptLabels:

|

||||

- issue/willfix

|

||||

# Label to use when marking an issue as stale

|

||||

staleLabel: issue/stale

|

||||

# Comment to post when marking an issue as stale. Set to `false` to disable

|

||||

markComment: >

|

||||

This issue has been automatically marked as stale because it has not had

|

||||

recent activity. It will be closed if no further activity occurs. Thank you

|

||||

for your contributions.

|

||||

# Comment to post when closing a stale issue. Set to `false` to disable

|

||||

closeComment: >

|

||||

This issue is being automatically closed due to inactivity.

|

||||

However, you may choose to reopen this issue.

|

||||

19

.github/workflows/bump_deps.yaml

vendored

19

.github/workflows/bump_deps.yaml

vendored

@@ -9,18 +9,27 @@ jobs:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

include:

|

||||

- repository: "go-skynet/go-gpt4all-j.cpp"

|

||||

variable: "GOGPT4ALLJ_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/go-llama.cpp"

|

||||

variable: "GOLLAMA_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/go-gpt2.cpp"

|

||||

variable: "GOGPT2_VERSION"

|

||||

- repository: "go-skynet/go-ggml-transformers.cpp"

|

||||

variable: "GOGGMLTRANSFORMERS_VERSION"

|

||||

branch: "master"

|

||||

- repository: "donomii/go-rwkv.cpp"

|

||||

variable: "RWKV_VERSION"

|

||||

branch: "main"

|

||||

- repository: "ggerganov/whisper.cpp"

|

||||

variable: "WHISPER_CPP_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/go-bert.cpp"

|

||||

variable: "BERT_VERSION"

|

||||

branch: "master"

|

||||

- repository: "go-skynet/bloomz.cpp"

|

||||

variable: "BLOOMZ_VERSION"

|

||||

branch: "main"

|

||||

- repository: "nomic-ai/gpt4all"

|

||||

variable: "GPT4ALL_VERSION"

|

||||

branch: "main"

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

|

||||

4

.github/workflows/image.yml

vendored

4

.github/workflows/image.yml

vendored

@@ -9,6 +9,10 @@ on:

|

||||

tags:

|

||||

- '*'

|

||||

|

||||

concurrency:

|

||||

group: ci-${{ github.head_ref || github.ref }}-${{ github.repository }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

docker:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

84

.github/workflows/release.yaml

vendored

Normal file

84

.github/workflows/release.yaml

vendored

Normal file

@@ -0,0 +1,84 @@

|

||||

name: Build and Release

|

||||

|

||||

on: push

|

||||

|

||||

permissions:

|

||||

contents: write

|

||||

|

||||

jobs:

|

||||

build-linux:

|

||||

strategy:

|

||||

matrix:

|

||||

include:

|

||||

- build: 'avx2'

|

||||

defines: ''

|

||||

- build: 'avx'

|

||||

defines: '-DLLAMA_AVX2=OFF'

|

||||

- build: 'avx512'

|

||||

defines: '-DLLAMA_AVX512=ON'

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Clone

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

submodules: true

|

||||

- name: Dependencies

|

||||

run: |

|

||||

sudo apt-get update

|

||||

sudo apt-get install build-essential ffmpeg

|

||||

- name: Build

|

||||

id: build

|

||||

env:

|

||||

CMAKE_ARGS: "${{ matrix.define }}"

|

||||

BUILD_ID: "${{ matrix.build }}"

|

||||

run: |

|

||||

make dist

|

||||

- uses: actions/upload-artifact@v3

|

||||

with:

|

||||

name: ${{ matrix.build }}

|

||||

path: release/

|

||||

- name: Release

|

||||

uses: softprops/action-gh-release@v1

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

with:

|

||||

files: |

|

||||

release/*

|

||||

|

||||

build-macOS:

|

||||

strategy:

|

||||

matrix:

|

||||

include:

|

||||

- build: 'avx2'

|

||||

defines: ''

|

||||

- build: 'avx'

|

||||

defines: '-DLLAMA_AVX2=OFF'

|

||||

- build: 'avx512'

|

||||

defines: '-DLLAMA_AVX512=ON'

|

||||

runs-on: macOS-latest

|

||||

steps:

|

||||

- name: Clone

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

submodules: true

|

||||

|

||||

- name: Dependencies

|

||||

run: |

|

||||

brew update

|

||||

brew install sdl2 ffmpeg

|

||||

- name: Build

|

||||

id: build

|

||||

env:

|

||||

CMAKE_ARGS: "${{ matrix.define }}"

|

||||

BUILD_ID: "${{ matrix.build }}"

|

||||

run: |

|

||||

make dist

|

||||

- uses: actions/upload-artifact@v3

|

||||

with:

|

||||

name: ${{ matrix.build }}

|

||||

path: release/

|

||||

- name: Release

|

||||

uses: softprops/action-gh-release@v1

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

with:

|

||||

files: |

|

||||

release/*

|

||||

26

.github/workflows/release.yml.disabled

vendored

26

.github/workflows/release.yml.disabled

vendored

@@ -1,26 +0,0 @@

|

||||

name: goreleaser

|

||||

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- 'v*'

|

||||

|

||||

jobs:

|

||||

goreleaser:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: Set up Go

|

||||

uses: actions/setup-go@v3

|

||||

with:

|

||||

go-version: 1.18

|

||||

- name: Run GoReleaser

|

||||

uses: goreleaser/goreleaser-action@v4

|

||||

with:

|

||||

version: latest

|

||||

args: release --clean

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

8

.github/workflows/test.yml

vendored

8

.github/workflows/test.yml

vendored

@@ -9,6 +9,10 @@ on:

|

||||

tags:

|

||||

- '*'

|

||||

|

||||

concurrency:

|

||||

group: ci-tests-${{ github.head_ref || github.ref }}-${{ github.repository }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

ubuntu-latest:

|

||||

runs-on: ubuntu-latest

|

||||

@@ -21,7 +25,7 @@ jobs:

|

||||

- name: Dependencies

|

||||

run: |

|

||||

sudo apt-get update

|

||||

sudo apt-get install build-essential

|

||||

sudo apt-get install build-essential ffmpeg

|

||||

- name: Test

|

||||

run: |

|

||||

make test

|

||||

@@ -38,7 +42,7 @@ jobs:

|

||||

- name: Dependencies

|

||||

run: |

|

||||

brew update

|

||||

brew install sdl2

|

||||

brew install sdl2 ffmpeg

|

||||

- name: Test

|

||||

run: |

|

||||

make test

|

||||

12

.gitignore

vendored

12

.gitignore

vendored

@@ -1,7 +1,10 @@

|

||||

# go-llama build artifacts

|

||||

go-llama

|

||||

go-gpt4all-j

|

||||

gpt4all

|

||||

go-stable-diffusion

|

||||

go-gpt2

|

||||

go-rwkv

|

||||

whisper.cpp

|

||||

|

||||

# LocalAI build binary

|

||||

LocalAI

|

||||

@@ -11,4 +14,9 @@ local-ai

|

||||

|

||||

# Ignore models

|

||||

models/*

|

||||

test-models/

|

||||

test-models/

|

||||

|

||||

release/

|

||||

|

||||

# just in case

|

||||

.DS_Store

|

||||

|

||||

@@ -1,15 +0,0 @@

|

||||

# Make sure to check the documentation at http://goreleaser.com

|

||||

project_name: local-ai

|

||||

builds:

|

||||

- ldflags:

|

||||

- -w -s

|

||||

env:

|

||||

- CGO_ENABLED=0

|

||||

goos:

|

||||

- linux

|

||||

- darwin

|

||||

- windows

|

||||

goarch:

|

||||

- amd64

|

||||

- arm64

|

||||

binary: '{{ .ProjectName }}'

|

||||

19

.vscode/launch.json

vendored

19

.vscode/launch.json

vendored

@@ -2,7 +2,20 @@

|

||||

"version": "0.2.0",

|

||||

"configurations": [

|

||||

{

|

||||

"name": "Launch Go",

|

||||

"name": "Python: Current File",

|

||||

"type": "python",

|

||||

"request": "launch",

|

||||

"program": "${file}",

|

||||

"console": "integratedTerminal",

|

||||

"justMyCode": false,

|

||||

"cwd": "${workspaceFolder}/examples/langchain-chroma",

|

||||

"env": {

|

||||

"OPENAI_API_BASE": "http://localhost:8080/v1",

|

||||

"OPENAI_API_KEY": "abc"

|

||||

}

|

||||

},

|

||||

{

|

||||

"name": "Launch LocalAI API",

|

||||

"type": "go",

|

||||

"request": "launch",

|

||||

"mode": "debug",

|

||||

@@ -11,8 +24,8 @@

|

||||

"api"

|

||||

],

|

||||

"env": {

|

||||

"C_INCLUDE_PATH": "/workspace/go-llama:/workspace/go-gpt4all-j:/workspace/go-gpt2",

|

||||

"LIBRARY_PATH": "/workspace/go-llama:/workspace/go-gpt4all-j:/workspace/go-gpt2",

|

||||

"C_INCLUDE_PATH": "${workspaceFolder}/go-llama:${workspaceFolder}/go-stable-diffusion/:${workspaceFolder}/gpt4all/gpt4all-bindings/golang/:${workspaceFolder}/go-gpt2:${workspaceFolder}/go-rwkv:${workspaceFolder}/whisper.cpp:${workspaceFolder}/go-bert:${workspaceFolder}/bloomz",

|

||||

"LIBRARY_PATH": "$${workspaceFolder}/go-llama:${workspaceFolder}/go-stable-diffusion/:${workspaceFolder}/gpt4all/gpt4all-bindings/golang/:${workspaceFolder}/go-gpt2:${workspaceFolder}/go-rwkv:${workspaceFolder}/whisper.cpp:${workspaceFolder}/go-bert:${workspaceFolder}/bloomz",

|

||||

"DEBUG": "true"

|

||||

}

|

||||

}

|

||||

|

||||

10

Dockerfile

10

Dockerfile

@@ -1,9 +1,15 @@

|

||||

ARG GO_VERSION=1.20

|

||||

ARG BUILD_TYPE=

|

||||

FROM golang:$GO_VERSION

|

||||

ENV REBUILD=true

|

||||

WORKDIR /build

|

||||

RUN apt-get update && apt-get install -y cmake

|

||||

RUN apt-get update && apt-get install -y cmake curl libgomp1 libopenblas-dev libopenblas-base libopencv-dev libopencv-core-dev libopencv-core4.5 ca-certificates

|

||||

COPY . .

|

||||

RUN make prepare-sources

|

||||

RUN ln -s /usr/include/opencv4/opencv2/ /usr/include/opencv2

|

||||

RUN make build

|

||||

ENV HEALTHCHECK_ENDPOINT=http://localhost:8080/readyz

|

||||

# Define the health check command

|

||||

HEALTHCHECK --interval=30s --timeout=360s --retries=10 \

|

||||

CMD curl -f $HEALTHCHECK_ENDPOINT || exit 1

|

||||

EXPOSE 8080

|

||||

ENTRYPOINT [ "/build/entrypoint.sh" ]

|

||||

|

||||

@@ -4,11 +4,17 @@ ARG BUILD_TYPE=

|

||||

|

||||

FROM golang:$GO_VERSION as builder

|

||||

WORKDIR /build

|

||||

RUN apt-get update && apt-get install -y cmake

|

||||

RUN apt-get update && apt-get install -y cmake libgomp1 libopenblas-dev libopenblas-base libopencv-dev libopencv-core-dev libopencv-core4.5

|

||||

RUN ln -s /usr/include/opencv4/opencv2/ /usr/include/opencv2

|

||||

COPY . .

|

||||

RUN make build

|

||||

|

||||

FROM debian:$DEBIAN_VERSION

|

||||

COPY --from=builder /build/local-ai /usr/bin/local-ai

|

||||

RUN apt-get update && apt-get install -y ca-certificates curl

|

||||

ENV HEALTHCHECK_ENDPOINT=http://localhost:8080/readyz

|

||||

# Define the health check command

|

||||

HEALTHCHECK --interval=30s --timeout=360s --retries=10 \

|

||||

CMD curl -f $HEALTHCHECK_ENDPOINT || exit 1

|

||||

EXPOSE 8080

|

||||

ENTRYPOINT [ "/usr/bin/local-ai" ]

|

||||

215

Makefile

215

Makefile

@@ -3,131 +3,238 @@ GOTEST=$(GOCMD) test

|

||||

GOVET=$(GOCMD) vet

|

||||

BINARY_NAME=local-ai

|

||||

|

||||

GOLLAMA_VERSION?=2e6ae1269e035886fc64e268a6dda9d8c4ba8c75

|

||||

GOGPT4ALLJ_VERSION?=1f7bff57f66cb7062e40d0ac3abd2217815e5109

|

||||

GOGPT2_VERSION?=245a5bfe6708ab80dc5c733dcdbfbe3cfd2acdaa

|

||||

RWKV_REPO?=https://github.com/donomii/go-rwkv.cpp

|

||||

RWKV_VERSION?=af62fcc432be2847acb6e0688b2c2491d6588d58

|

||||

GOLLAMA_VERSION?=4bd3910005a593a6db237bc82c506d6d9fb81b18

|

||||

GPT4ALL_REPO?=https://github.com/nomic-ai/gpt4all

|

||||

GPT4ALL_VERSION?=73db20ba85fbbdc66a56e2619394c0eea40dc72b

|

||||

GOGGMLTRANSFORMERS_VERSION?=4f18e5eb75089dc1fc8f1c955bb8f73d18520a46

|

||||

RWKV_REPO?=https://github.com/mudler/go-rwkv.cpp

|

||||

RWKV_VERSION?=dcbd34aff983b3d04fa300c5da5ec4bfdf6db295

|

||||

WHISPER_CPP_VERSION?=9b926844e3ae0ca6a0d13573b2e0349be1a4b573

|

||||

BERT_VERSION?=cea1ed76a7f48ef386a8e369f6c82c48cdf2d551

|

||||

BLOOMZ_VERSION?=e9366e82abdfe70565644fbfae9651976714efd1

|

||||

BUILD_TYPE?=

|

||||

CGO_LDFLAGS?=

|

||||

CUDA_LIBPATH?=/usr/local/cuda/lib64/

|

||||

STABLEDIFFUSION_VERSION?=c0748eca3642d58bcf9521108bcee46959c647dc

|

||||

GO_TAGS?=

|

||||

BUILD_ID?=git

|

||||

LD_FLAGS=?=

|

||||

OPTIONAL_TARGETS?=

|

||||

|

||||

OS := $(shell uname -s)

|

||||

ARCH := $(shell uname -m)

|

||||

GREEN := $(shell tput -Txterm setaf 2)

|

||||

YELLOW := $(shell tput -Txterm setaf 3)

|

||||

WHITE := $(shell tput -Txterm setaf 7)

|

||||

CYAN := $(shell tput -Txterm setaf 6)

|

||||

RESET := $(shell tput -Txterm sgr0)

|

||||

|

||||

C_INCLUDE_PATH=$(shell pwd)/go-llama:$(shell pwd)/go-gpt4all-j:$(shell pwd)/go-gpt2:$(shell pwd)/go-rwkv

|

||||

LIBRARY_PATH=$(shell pwd)/go-llama:$(shell pwd)/go-gpt4all-j:$(shell pwd)/go-gpt2:$(shell pwd)/go-rwkv

|

||||

C_INCLUDE_PATH=$(shell pwd)/go-llama:$(shell pwd)/go-stable-diffusion/:$(shell pwd)/gpt4all/gpt4all-bindings/golang/:$(shell pwd)/go-ggml-transformers:$(shell pwd)/go-rwkv:$(shell pwd)/whisper.cpp:$(shell pwd)/go-bert:$(shell pwd)/bloomz

|

||||

LIBRARY_PATH=$(shell pwd)/go-llama:$(shell pwd)/go-stable-diffusion/:$(shell pwd)/gpt4all/gpt4all-bindings/golang/:$(shell pwd)/go-ggml-transformers:$(shell pwd)/go-rwkv:$(shell pwd)/whisper.cpp:$(shell pwd)/go-bert:$(shell pwd)/bloomz

|

||||

|

||||

# Use this if you want to set the default behavior

|

||||

ifndef BUILD_TYPE

|

||||

BUILD_TYPE:=default

|

||||

ifeq ($(BUILD_TYPE),openblas)

|

||||

CGO_LDFLAGS+=-lopenblas

|

||||

endif

|

||||

|

||||

ifeq ($(BUILD_TYPE), "generic")

|

||||

GENERIC_PREFIX:=generic-

|

||||

else

|

||||

GENERIC_PREFIX:=

|

||||

ifeq ($(BUILD_TYPE),cublas)

|

||||

CGO_LDFLAGS+=-lcublas -lcudart -L$(CUDA_LIBPATH)

|

||||

export LLAMA_CUBLAS=1

|

||||

endif

|

||||

|

||||

ifeq ($(BUILD_TYPE),clblas)

|

||||

CGO_LDFLAGS+=-lOpenCL -lclblast

|

||||

endif

|

||||

|

||||

# glibc-static or glibc-devel-static required

|

||||

ifeq ($(STATIC),true)

|

||||

LD_FLAGS=-linkmode external -extldflags -static

|

||||

endif

|

||||

|

||||

ifeq ($(GO_TAGS),stablediffusion)

|

||||

OPTIONAL_TARGETS+=go-stable-diffusion/libstablediffusion.a

|

||||

endif

|

||||

|

||||

.PHONY: all test build vendor

|

||||

|

||||

all: help

|

||||

|

||||

## GPT4ALL-J

|

||||

go-gpt4all-j:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-gpt4all-j.cpp go-gpt4all-j

|

||||

cd go-gpt4all-j && git checkout -b build $(GOGPT4ALLJ_VERSION) && git submodule update --init --recursive --depth 1

|

||||

## GPT4ALL

|

||||

gpt4all:

|

||||

git clone --recurse-submodules $(GPT4ALL_REPO) gpt4all

|

||||

cd gpt4all && git checkout -b build $(GPT4ALL_VERSION) && git submodule update --init --recursive --depth 1

|

||||

# This is hackish, but needed as both go-llama and go-gpt4allj have their own version of ggml..

|

||||

@find ./go-gpt4all-j -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_/gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.h" -exec sed -i'' -e 's/gpt_/gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/json_/json_gptj_/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/void replace/void json_gptj_replace/g' {} +

|

||||

@find ./go-gpt4all-j -type f -name "*.cpp" -exec sed -i'' -e 's/::replace/::json_gptj_replace/g' {} +

|

||||

@find ./gpt4all -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_/gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.h" -exec sed -i'' -e 's/gpt_/gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.h" -exec sed -i'' -e 's/set_console_color/set_gptj_console_color/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/set_console_color/set_gptj_console_color/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/llama_/gptjllama_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.go" -exec sed -i'' -e 's/llama_/gptjllama_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.h" -exec sed -i'' -e 's/llama_/gptjllama_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.txt" -exec sed -i'' -e 's/llama_/gptjllama_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/json_/json_gptj_/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/void replace/void json_gptj_replace/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/::replace/::json_gptj_replace/g' {} +

|

||||

@find ./gpt4all -type f -name "*.cpp" -exec sed -i'' -e 's/regex_escape/gpt4allregex_escape/g' {} +

|

||||

mv ./gpt4all/gpt4all-backend/llama.cpp/llama_util.h ./gpt4all/gpt4all-backend/llama.cpp/gptjllama_util.h

|

||||

|

||||

## BERT embeddings

|

||||

go-bert:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-bert.cpp go-bert

|

||||

cd go-bert && git checkout -b build $(BERT_VERSION) && git submodule update --init --recursive --depth 1

|

||||

@find ./go-bert -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_bert_/g' {} +

|

||||

@find ./go-bert -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_bert_/g' {} +

|

||||

@find ./go-bert -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_bert_/g' {} +

|

||||

|

||||

## stable diffusion

|

||||

go-stable-diffusion:

|

||||

git clone --recurse-submodules https://github.com/mudler/go-stable-diffusion go-stable-diffusion

|

||||

cd go-stable-diffusion && git checkout -b build $(STABLEDIFFUSION_VERSION) && git submodule update --init --recursive --depth 1

|

||||

|

||||

go-stable-diffusion/libstablediffusion.a:

|

||||

$(MAKE) -C go-stable-diffusion libstablediffusion.a

|

||||

|

||||

## RWKV

|

||||

go-rwkv:

|

||||

git clone --recurse-submodules $(RWKV_REPO) go-rwkv

|

||||

cd go-rwkv && git checkout -b build $(RWKV_VERSION) && git submodule update --init --recursive --depth 1

|

||||

@find ./go-rwkv -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_rwkv_/g' {} +

|

||||

@find ./go-rwkv -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_rwkv_/g' {} +

|

||||

@find ./go-rwkv -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_rwkv_/g' {} +

|

||||

|

||||

go-rwkv/librwkv.a: go-rwkv

|

||||

cd go-rwkv && cd rwkv.cpp && cmake . -DRWKV_BUILD_SHARED_LIBRARY=OFF && cmake --build . && cp librwkv.a .. && cp ggml/src/libggml.a ..

|

||||

|

||||

go-gpt4all-j/libgptj.a: go-gpt4all-j

|

||||

$(MAKE) -C go-gpt4all-j $(GENERIC_PREFIX)libgptj.a

|

||||

## bloomz

|

||||

bloomz:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/bloomz.cpp bloomz

|

||||

@find ./bloomz -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_bloomz_/g' {} +

|

||||

@find ./bloomz -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_bloomz_/g' {} +

|

||||

@find ./bloomz -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_bloomz_/g' {} +

|

||||

@find ./bloomz -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_/gpt_bloomz_/g' {} +

|

||||

@find ./bloomz -type f -name "*.h" -exec sed -i'' -e 's/gpt_/gpt_bloomz_/g' {} +

|

||||

@find ./bloomz -type f -name "*.cpp" -exec sed -i'' -e 's/void replace/void json_bloomz_replace/g' {} +

|

||||

@find ./bloomz -type f -name "*.cpp" -exec sed -i'' -e 's/::replace/::json_bloomz_replace/g' {} +

|

||||

|

||||

bloomz/libbloomz.a: bloomz

|

||||

cd bloomz && make libbloomz.a

|

||||

|

||||

go-bert/libgobert.a: go-bert

|

||||

$(MAKE) -C go-bert libgobert.a

|

||||

|

||||

gpt4all/gpt4all-bindings/golang/libgpt4all.a: gpt4all

|

||||

$(MAKE) -C gpt4all/gpt4all-bindings/golang/ libgpt4all.a

|

||||

|

||||

## CEREBRAS GPT

|

||||

go-gpt2:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-gpt2.cpp go-gpt2

|

||||

cd go-gpt2 && git checkout -b build $(GOGPT2_VERSION) && git submodule update --init --recursive --depth 1

|

||||

go-ggml-transformers:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-ggml-transformers.cpp go-ggml-transformers

|

||||

cd go-ggml-transformers && git checkout -b build $(GOGPT2_VERSION) && git submodule update --init --recursive --depth 1

|

||||

# This is hackish, but needed as both go-llama and go-gpt4allj have their own version of ggml..

|

||||

@find ./go-gpt2 -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_/gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.h" -exec sed -i'' -e 's/gpt_/gpt2_/g' {} +

|

||||

@find ./go-gpt2 -type f -name "*.cpp" -exec sed -i'' -e 's/json_/json_gpt2_/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_gpt2_/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_print_usage/gpt2_print_usage/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.h" -exec sed -i'' -e 's/gpt_print_usage/gpt2_print_usage/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_params_parse/gpt2_params_parse/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.h" -exec sed -i'' -e 's/gpt_params_parse/gpt2_params_parse/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.cpp" -exec sed -i'' -e 's/gpt_random_prompt/gpt2_random_prompt/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.h" -exec sed -i'' -e 's/gpt_random_prompt/gpt2_random_prompt/g' {} +

|

||||

@find ./go-ggml-transformers -type f -name "*.cpp" -exec sed -i'' -e 's/json_/json_gpt2_/g' {} +

|

||||

|

||||

go-gpt2/libgpt2.a: go-gpt2

|

||||

$(MAKE) -C go-gpt2 $(GENERIC_PREFIX)libgpt2.a

|

||||

go-ggml-transformers/libtransformers.a: go-ggml-transformers

|

||||

$(MAKE) -C go-ggml-transformers libtransformers.a

|

||||

|

||||

whisper.cpp:

|

||||

git clone https://github.com/ggerganov/whisper.cpp.git

|

||||

cd whisper.cpp && git checkout -b build $(WHISPER_CPP_VERSION) && git submodule update --init --recursive --depth 1

|

||||

@find ./whisper.cpp -type f -name "*.c" -exec sed -i'' -e 's/ggml_/ggml_whisper_/g' {} +

|

||||

@find ./whisper.cpp -type f -name "*.cpp" -exec sed -i'' -e 's/ggml_/ggml_whisper_/g' {} +

|

||||

@find ./whisper.cpp -type f -name "*.h" -exec sed -i'' -e 's/ggml_/ggml_whisper_/g' {} +

|

||||

|

||||

whisper.cpp/libwhisper.a: whisper.cpp

|

||||

cd whisper.cpp && make libwhisper.a

|

||||

|

||||

go-llama:

|

||||

git clone --recurse-submodules https://github.com/go-skynet/go-llama.cpp go-llama

|

||||

cd go-llama && git checkout -b build $(GOLLAMA_VERSION) && git submodule update --init --recursive --depth 1

|

||||

|

||||

go-llama/libbinding.a: go-llama

|

||||

$(MAKE) -C go-llama $(GENERIC_PREFIX)libbinding.a

|

||||

$(MAKE) -C go-llama BUILD_TYPE=$(BUILD_TYPE) libbinding.a

|

||||

|

||||

replace:

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-llama.cpp=$(shell pwd)/go-llama

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-gpt4all-j.cpp=$(shell pwd)/go-gpt4all-j

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-gpt2.cpp=$(shell pwd)/go-gpt2

|

||||

$(GOCMD) mod edit -replace github.com/nomic-ai/gpt4all/gpt4all-bindings/golang=$(shell pwd)/gpt4all/gpt4all-bindings/golang

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-ggml-transformers.cpp=$(shell pwd)/go-ggml-transformers

|

||||

$(GOCMD) mod edit -replace github.com/donomii/go-rwkv.cpp=$(shell pwd)/go-rwkv

|

||||

$(GOCMD) mod edit -replace github.com/ggerganov/whisper.cpp=$(shell pwd)/whisper.cpp

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/go-bert.cpp=$(shell pwd)/go-bert

|

||||

$(GOCMD) mod edit -replace github.com/go-skynet/bloomz.cpp=$(shell pwd)/bloomz

|

||||

$(GOCMD) mod edit -replace github.com/mudler/go-stable-diffusion=$(shell pwd)/go-stable-diffusion

|

||||

|

||||

prepare-sources: go-llama go-gpt2 go-gpt4all-j go-rwkv

|

||||

prepare-sources: go-llama go-ggml-transformers gpt4all go-rwkv whisper.cpp go-bert bloomz go-stable-diffusion replace

|

||||

$(GOCMD) mod download

|

||||

|

||||

## GENERIC

|

||||

rebuild: ## Rebuilds the project

|

||||

$(MAKE) -C go-llama clean

|

||||

$(MAKE) -C go-gpt4all-j clean

|

||||

$(MAKE) -C go-gpt2 clean

|

||||

$(MAKE) -C gpt4all/gpt4all-bindings/golang/ clean

|

||||

$(MAKE) -C go-ggml-transformers clean

|

||||

$(MAKE) -C go-rwkv clean

|

||||

$(MAKE) -C whisper.cpp clean

|

||||

$(MAKE) -C go-stable-diffusion clean

|

||||

$(MAKE) -C go-bert clean

|

||||

$(MAKE) -C bloomz clean

|

||||

$(MAKE) build

|

||||

|

||||

prepare: prepare-sources go-llama/libbinding.a go-gpt4all-j/libgptj.a go-gpt2/libgpt2.a go-rwkv/librwkv.a replace ## Prepares for building

|

||||

prepare: prepare-sources gpt4all/gpt4all-bindings/golang/libgpt4all.a $(OPTIONAL_TARGETS) go-llama/libbinding.a go-bert/libgobert.a go-ggml-transformers/libtransformers.a go-rwkv/librwkv.a whisper.cpp/libwhisper.a bloomz/libbloomz.a ## Prepares for building

|

||||

|

||||

clean: ## Remove build related file

|

||||

rm -fr ./go-llama

|

||||

rm -rf ./go-gpt4all-j

|

||||

rm -rf ./go-gpt2

|

||||

rm -rf ./gpt4all

|

||||

rm -rf ./go-stable-diffusion

|

||||

rm -rf ./go-ggml-transformers

|

||||

rm -rf ./go-rwkv

|

||||

rm -rf ./go-bert

|

||||

rm -rf ./bloomz

|

||||

rm -rf ./whisper.cpp

|

||||

rm -rf $(BINARY_NAME)

|

||||

rm -rf release/

|

||||

|

||||

## Build:

|

||||

|

||||

build: prepare ## Build the project

|

||||

$(info ${GREEN}I local-ai build info:${RESET})

|

||||

$(info ${GREEN}I BUILD_TYPE: ${YELLOW}$(BUILD_TYPE)${RESET})

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} $(GOCMD) build -o $(BINARY_NAME) ./

|

||||

$(info ${GREEN}I GO_TAGS: ${YELLOW}$(GO_TAGS)${RESET})

|

||||

CGO_LDFLAGS="$(CGO_LDFLAGS)" C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} $(GOCMD) build -ldflags "$(LD_FLAGS)" -tags "$(GO_TAGS)" -o $(BINARY_NAME) ./

|

||||

|

||||

dist: build

|

||||

mkdir -p release

|

||||

cp $(BINARY_NAME) release/$(BINARY_NAME)-$(BUILD_ID)-$(OS)-$(ARCH)

|

||||

|

||||

generic-build: ## Build the project using generic

|

||||

BUILD_TYPE="generic" $(MAKE) build

|

||||

|

||||

## Run

|

||||

run: prepare ## run local-ai

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} $(GOCMD) run ./main.go

|

||||

CGO_LDFLAGS="$(CGO_LDFLAGS)" C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} $(GOCMD) run ./main.go

|

||||

|

||||

test-models/testmodel:

|

||||

mkdir test-models

|

||||

wget https://huggingface.co/concedo/cerebras-111M-ggml/resolve/main/cerberas-111m-q4_0.bin -O test-models/testmodel

|

||||

cp tests/fixtures/* test-models

|

||||

mkdir test-dir

|

||||

wget https://huggingface.co/nnakasato/ggml-model-test/resolve/main/ggml-model-q4.bin -O test-models/testmodel

|

||||

wget https://huggingface.co/ggerganov/whisper.cpp/resolve/main/ggml-base.en.bin -O test-models/whisper-en

|

||||

wget https://huggingface.co/skeskinen/ggml/resolve/main/all-MiniLM-L6-v2/ggml-model-q4_0.bin -O test-models/bert

|

||||

wget https://cdn.openai.com/whisper/draft-20220913a/micro-machines.wav -O test-dir/audio.wav

|

||||

wget https://huggingface.co/mudler/rwkv-4-raven-1.5B-ggml/resolve/main/RWKV-4-Raven-1B5-v11-Eng99%2525-Other1%2525-20230425-ctx4096_Q4_0.bin -O test-models/rwkv

|

||||

wget https://raw.githubusercontent.com/saharNooby/rwkv.cpp/5eb8f09c146ea8124633ab041d9ea0b1f1db4459/rwkv/20B_tokenizer.json -O test-models/rwkv.tokenizer.json

|

||||

cp tests/models_fixtures/* test-models

|

||||

|

||||

test: prepare test-models/testmodel

|

||||

cp tests/fixtures/* test-models

|

||||

@C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) test -v -timeout 30m ./...

|

||||

cp tests/models_fixtures/* test-models

|

||||

C_INCLUDE_PATH=${C_INCLUDE_PATH} LIBRARY_PATH=${LIBRARY_PATH} TEST_DIR=$(abspath ./)/test-dir/ FIXTURES=$(abspath ./)/tests/fixtures CONFIG_FILE=$(abspath ./)/test-models/config.yaml MODELS_PATH=$(abspath ./)/test-models $(GOCMD) run github.com/onsi/ginkgo/v2/ginkgo --flakeAttempts 5 -v -r ./api ./pkg

|

||||

|

||||

## Help:

|

||||

help: ## Show this help.

|

||||

|

||||

588

README.md

588

README.md

@@ -9,30 +9,77 @@

|

||||

|

||||

[](https://discord.gg/uJAeKSAGDy)

|

||||

|

||||

**LocalAI** is a drop-in replacement REST API compatible with OpenAI for local CPU inferencing. It allows to run models locally or on-prem with consumer grade hardware. It is based on [llama.cpp](https://github.com/ggerganov/llama.cpp), [gpt4all](https://github.com/nomic-ai/gpt4all), [rwkv.cpp](https://github.com/saharNooby/rwkv.cpp) and [ggml](https://github.com/ggerganov/ggml), including support GPT4ALL-J which is licensed under Apache 2.0.

|

||||

**LocalAI** is a drop-in replacement REST API that's compatible with OpenAI API specifications for local inferencing. It allows you to run models locally or on-prem with consumer grade hardware, supporting multiple model families that are compatible with the ggml format.

|

||||

|

||||

- OpenAI compatible API

|

||||

- Supports multiple-models

|

||||

For a list of the supported model families, please see [the model compatibility table below](https://github.com/go-skynet/LocalAI#model-compatibility-table).

|

||||

|

||||

In a nutshell:

|

||||

|

||||

- Local, OpenAI drop-in alternative REST API. You own your data.

|

||||

- NO GPU required. NO Internet access is required either. Optional, GPU Acceleration is available in `llama.cpp`-compatible LLMs. [See building instructions](https://github.com/go-skynet/LocalAI#cublas).

|

||||

- Supports multiple models, Audio transcription, Text generation with GPTs, Image generation with stable diffusion (experimental)

|

||||

- Once loaded the first time, it keep models loaded in memory for faster inference

|

||||

- Support for prompt templates

|

||||

- Doesn't shell-out, but uses C bindings for a faster inference and better performance.

|

||||

- Doesn't shell-out, but uses C++ bindings for a faster inference and better performance.

|

||||

|

||||

LocalAI is a community-driven project, focused on making the AI accessible to anyone. Any contribution, feedback and PR is welcome! It was initially created by [mudler](https://github.com/mudler/) at the [SpectroCloud OSS Office](https://github.com/spectrocloud).

|

||||

|

||||

### News

|

||||

See the [usage](https://github.com/go-skynet/LocalAI#usage) and [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/) sections to learn how to use LocalAI. For a list of curated models check out the [model gallery](https://github.com/go-skynet/model-gallery).

|

||||

|

||||

### How does it work?

|

||||

|

||||

<details>

|

||||

|

||||

LocalAI is an API written in Go that serves as an OpenAI shim, enabling software already developed with OpenAI SDKs to seamlessly integrate with LocalAI. It can be effortlessly implemented as a substitute, even on consumer-grade hardware. This capability is achieved by employing various C++ backends, including [ggml](https://github.com/ggerganov/ggml), to perform inference on LLMs using both CPU and, if desired, GPU.

|

||||

|

||||

LocalAI uses C++ bindings for optimizing speed. It is based on [llama.cpp](https://github.com/ggerganov/llama.cpp), [gpt4all](https://github.com/nomic-ai/gpt4all), [rwkv.cpp](https://github.com/saharNooby/rwkv.cpp), [ggml](https://github.com/ggerganov/ggml), [whisper.cpp](https://github.com/ggerganov/whisper.cpp) for audio transcriptions, [bert.cpp](https://github.com/skeskinen/bert.cpp) for embedding and [StableDiffusion-NCN](https://github.com/EdVince/Stable-Diffusion-NCNN) for image generation. See [the model compatibility table](https://github.com/go-skynet/LocalAI#model-compatibility-table) to learn about all the components of LocalAI.

|

||||

|

||||

|

||||

|

||||

</details>

|

||||

|

||||

## News

|

||||

|

||||

- 23-05-2023: __v1.15.0__ released. `go-gpt2.cpp` backend got renamed to `go-ggml-transformers.cpp` updated including https://github.com/ggerganov/llama.cpp/pull/1508 which breaks compatibility with older models. This impacts RedPajama, GptNeoX, MPT(not `gpt4all-mpt`), Dolly, GPT2 and Starcoder based models. [Binary releases available](https://github.com/go-skynet/LocalAI/releases), various fixes, including https://github.com/go-skynet/LocalAI/pull/341 .

|

||||

- 21-05-2023: __v1.14.0__ released. Minor updates to the `/models/apply` endpoint, `llama.cpp` backend updated including https://github.com/ggerganov/llama.cpp/pull/1508 which breaks compatibility with older models. `gpt4all` is still compatible with the old format.

|

||||

- 19-05-2023: __v1.13.0__ released! 🔥🔥 updates to the `gpt4all` and `llama` backend, consolidated CUDA support ( https://github.com/go-skynet/LocalAI/pull/310 thanks to @bubthegreat and @Thireus ), preliminar support for [installing models via API](https://github.com/go-skynet/LocalAI#advanced-prepare-models-using-the-api).

|

||||

- 17-05-2023: __v1.12.0__ released! 🔥🔥 Minor fixes, plus CUDA (https://github.com/go-skynet/LocalAI/pull/258) support for `llama.cpp`-compatible models and image generation (https://github.com/go-skynet/LocalAI/pull/272).

|

||||

- 16-05-2023: 🔥🔥🔥 Experimental support for CUDA (https://github.com/go-skynet/LocalAI/pull/258) in the `llama.cpp` backend and Stable diffusion CPU image generation (https://github.com/go-skynet/LocalAI/pull/272) in `master`.

|

||||

|

||||

Now LocalAI can generate images too:

|

||||

|

||||

| mode=0 | mode=1 (winograd/sgemm) |

|

||||

|------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------|

|

||||

|  |  |

|

||||

|

||||

- 14-05-2023: __v1.11.1__ released! `rwkv` backend patch release

|

||||

- 13-05-2023: __v1.11.0__ released! 🔥 Updated `llama.cpp` bindings: This update includes a breaking change in the model files ( https://github.com/ggerganov/llama.cpp/pull/1405 ) - old models should still work with the `gpt4all-llama` backend.

|

||||

- 12-05-2023: __v1.10.0__ released! 🔥🔥 Updated `gpt4all` bindings. Added support for GPTNeox (experimental), RedPajama (experimental), Starcoder (experimental), Replit (experimental), MosaicML MPT. Also now `embeddings` endpoint supports tokens arrays. See the [langchain-chroma](https://github.com/go-skynet/LocalAI/tree/master/examples/langchain-chroma) example! Note - this update does NOT include https://github.com/ggerganov/llama.cpp/pull/1405 which makes models incompatible.

|

||||

- 11-05-2023: __v1.9.0__ released! 🔥 Important whisper updates ( https://github.com/go-skynet/LocalAI/pull/233 https://github.com/go-skynet/LocalAI/pull/229 ) and extended gpt4all model families support ( https://github.com/go-skynet/LocalAI/pull/232 ). Redpajama/dolly experimental ( https://github.com/go-skynet/LocalAI/pull/214 )

|

||||

- 10-05-2023: __v1.8.0__ released! 🔥 Added support for fast and accurate embeddings with `bert.cpp` ( https://github.com/go-skynet/LocalAI/pull/222 )

|

||||

- 09-05-2023: Added experimental support for transcriptions endpoint ( https://github.com/go-skynet/LocalAI/pull/211 )

|

||||

- 08-05-2023: Support for embeddings with models using the `llama.cpp` backend ( https://github.com/go-skynet/LocalAI/pull/207 )

|

||||

- 02-05-2023: Support for `rwkv.cpp` models ( https://github.com/go-skynet/LocalAI/pull/158 ) and for `/edits` endpoint

|

||||

- 01-05-2023: Support for SSE stream of tokens in `llama.cpp` backends ( https://github.com/go-skynet/LocalAI/pull/152 )

|

||||

|

||||

### Socials and community chatter

|

||||

Twitter: [@LocalAI_API](https://twitter.com/LocalAI_API) and [@mudler_it](https://twitter.com/mudler_it)

|

||||

|

||||

- Follow [@LocalAI_API](https://twitter.com/LocalAI_API) on twitter.

|

||||

### Blogs, articles, media

|

||||

|

||||

- [Reddit post](https://www.reddit.com/r/selfhosted/comments/12w4p2f/localai_openai_compatible_api_to_run_llm_models/) about LocalAI.

|

||||

- [LocalAI meets k8sgpt](https://www.youtube.com/watch?v=PKrDNuJ_dfE) - CNCF Webinar showcasing LocalAI and k8sgpt.

|

||||

- [Question Answering on Documents locally with LangChain, LocalAI, Chroma, and GPT4All](https://mudler.pm/posts/localai-question-answering/) by Ettore Di Giacinto

|

||||

- [Tutorial to use k8sgpt with LocalAI](https://medium.com/@tyler_97636/k8sgpt-localai-unlock-kubernetes-superpowers-for-free-584790de9b65) - excellent usecase for localAI, using AI to analyse Kubernetes clusters. by Tyller Gillson

|

||||

|

||||

## Contribute and help

|

||||

|

||||

To help the project you can:

|

||||

|

||||

- Upvote the [Reddit post](https://www.reddit.com/r/selfhosted/comments/12w4p2f/localai_openai_compatible_api_to_run_llm_models/) about LocalAI.

|

||||

|

||||

- [Hacker news post](https://news.ycombinator.com/item?id=35726934) - help us out by voting if you like this project.

|

||||

|

||||

- [Tutorial to use k8sgpt with LocalAI](https://medium.com/@tyler_97636/k8sgpt-localai-unlock-kubernetes-superpowers-for-free-584790de9b65) - excellent usecase for localAI, using AI to analyse Kubernetes clusters.

|

||||

- If you have technological skills and want to contribute to development, have a look at the open issues. If you are new you can have a look at the [good-first-issue](https://github.com/go-skynet/LocalAI/issues?q=is%3Aissue+is%3Aopen+label%3A%22good+first+issue%22) and [help-wanted](https://github.com/go-skynet/LocalAI/issues?q=is%3Aissue+is%3Aopen+label%3A%22help+wanted%22) labels.

|

||||

|

||||

- If you don't have technological skills you can still help improving documentation or add examples or share your user-stories with our community, any help and contribution is welcome!

|

||||

|

||||

## Model compatibility

|

||||

|

||||

@@ -41,24 +88,14 @@ It is compatible with the models supported by [llama.cpp](https://github.com/gge

|

||||

Tested with:

|

||||

- Vicuna

|

||||

- Alpaca

|

||||

- [GPT4ALL](https://github.com/nomic-ai/gpt4all)

|

||||

- [GPT4ALL-J](https://gpt4all.io/models/ggml-gpt4all-j.bin)

|

||||

- [GPT4ALL](https://gpt4all.io)

|

||||

- [GPT4ALL-J](https://gpt4all.io/models/ggml-gpt4all-j.bin) (no changes required)

|

||||

- Koala

|

||||

- [cerebras-GPT with ggml](https://huggingface.co/lxe/Cerebras-GPT-2.7B-Alpaca-SP-ggml)

|

||||

- WizardLM

|

||||

- [RWKV](https://github.com/BlinkDL/RWKV-LM) models with [rwkv.cpp](https://github.com/saharNooby/rwkv.cpp)

|

||||

|

||||

### Vicuna, Alpaca, LLaMa...

|

||||

|

||||

[llama.cpp](https://github.com/ggerganov/llama.cpp) based models are compatible

|

||||

|

||||

### GPT4ALL

|

||||

|

||||

Note: You might need to convert older models to the new format, see [here](https://github.com/ggerganov/llama.cpp#using-gpt4all) for instance to run `gpt4all`.

|

||||

|

||||

### GPT4ALL-J

|

||||

|

||||

No changes required to the model.

|

||||

Note: You might need to convert some models from older models to the new format, for indications, see [the README in llama.cpp](https://github.com/ggerganov/llama.cpp#using-gpt4all) for instance to run `gpt4all`.

|

||||

|

||||

### RWKV

|

||||

|

||||

@@ -66,7 +103,7 @@ No changes required to the model.

|

||||

|

||||

A full example on how to run a rwkv model is in the [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/rwkv).

|

||||

|

||||

Note: rwkv models have an associated tokenizer along that needs to be provided with it:

|

||||

Note: rwkv models needs to specify the backend `rwkv` in the YAML config files and have an associated tokenizer along that needs to be provided with it:

|

||||

|

||||

```

|

||||

36464540 -rw-r--r-- 1 mudler mudler 1.2G May 3 10:51 rwkv_small

|

||||

@@ -84,11 +121,35 @@ It should also be compatible with StableLM and GPTNeoX ggml models (untested).

|

||||

Depending on the model you are attempting to run might need more RAM or CPU resources. Check out also [here](https://github.com/ggerganov/llama.cpp#memorydisk-requirements) for `ggml` based backends. `rwkv` is less expensive on resources.

|

||||

|

||||

|

||||

### Model compatibility table

|

||||

|

||||

<details>

|

||||

|

||||

| Backend and Bindings | Compatible models | Completion/Chat endpoint | Audio transcription/Image | Embeddings support | Token stream support |

|

||||

|----------------------------------------------------------------------------------|-----------------------|--------------------------|---------------------------|-----------------------------------|----------------------|

|

||||

| [llama](https://github.com/ggerganov/llama.cpp) ([binding](https://github.com/go-skynet/go-llama.cpp)) | Vicuna, Alpaca, LLaMa | yes | no | yes (doesn't seem to be accurate) | yes |

|

||||

| [gpt4all-llama](https://github.com/nomic-ai/gpt4all) | Vicuna, Alpaca, LLaMa | yes | no | no | yes |

|

||||

| [gpt4all-mpt](https://github.com/nomic-ai/gpt4all) | MPT | yes | no | no | yes |

|

||||

| [gpt4all-j](https://github.com/nomic-ai/gpt4all) | GPT4ALL-J | yes | no | no | yes |

|

||||

| [gpt2](https://github.com/ggerganov/ggml) ([binding](https://github.com/go-skynet/go-ggml-transformers.cpp)) | GPT2, Cerebras | yes | no | no | no |

|

||||

| [dolly](https://github.com/ggerganov/ggml) ([binding](https://github.com/go-skynet/go-ggml-transformers.cpp)) | Dolly | yes | no | no | no |

|

||||

| [gptj](https://github.com/ggerganov/ggml) ([binding](https://github.com/go-skynet/go-ggml-transformers.cpp)) | GPTJ | yes | no | no | no |

|

||||

| [mpt](https://github.com/ggerganov/ggml) ([binding](https://github.com/go-skynet/go-ggml-transformers.cpp)) | MPT | yes | no | no | no |

|

||||

| [replit](https://github.com/ggerganov/ggml) ([binding](https://github.com/go-skynet/go-ggml-transformers.cpp)) | Replit | yes | no | no | no |

|

||||

| [gptneox](https://github.com/ggerganov/ggml) ([binding](https://github.com/go-skynet/go-ggml-transformers.cpp)) | GPT NeoX, RedPajama, StableLM | yes | no | no | no |

|

||||

| [starcoder](https://github.com/ggerganov/ggml) ([binding](https://github.com/go-skynet/go-ggml-transformers.cpp)) | Starcoder | yes | no | no | no |

|

||||

| [bloomz](https://github.com/NouamaneTazi/bloomz.cpp) ([binding](https://github.com/go-skynet/bloomz.cpp)) | Bloom | yes | no | no | no |

|

||||

| [rwkv](https://github.com/saharNooby/rwkv.cpp) ([binding](https://github.com/donomii/go-rw)) | rwkv | yes | no | no | yes |

|

||||

| [bert](https://github.com/skeskinen/bert.cpp) ([binding](https://github.com/go-skynet/go-bert.cpp) | bert | no | no | yes | no |

|

||||

| [whisper](https://github.com/ggerganov/whisper.cpp) | whisper | no | Audio | no | no |

|

||||

| [stablediffusion](https://github.com/EdVince/Stable-Diffusion-NCNN) ([binding](https://github.com/mudler/go-stable-diffusion)) | stablediffusion | no | Image | no | no |

|

||||

</details>

|

||||

|

||||

## Usage

|

||||

|

||||

> `LocalAI` comes by default as a container image. You can check out all the available images with corresponding tags [here](https://quay.io/repository/go-skynet/local-ai?tab=tags&tag=latest).

|

||||

|

||||

The easiest way to run LocalAI is by using `docker-compose`:

|

||||

The easiest way to run LocalAI is by using `docker-compose` (to build locally, see [building LocalAI](https://github.com/go-skynet/LocalAI/tree/master#setup)):

|

||||

|

||||

```bash

|

||||

|

||||

@@ -106,7 +167,9 @@ cp your-model.bin models/

|

||||

# vim .env

|

||||

|

||||

# start with docker-compose

|

||||

docker-compose up -d --build

|

||||

docker-compose up -d --pull always

|

||||

# or you can build the images with:

|

||||

# docker-compose up -d --build

|

||||

|

||||

# Now API is accessible at localhost:8080

|

||||

curl http://localhost:8080/v1/models

|

||||

@@ -142,8 +205,9 @@ cp -rf prompt-templates/ggml-gpt4all-j.tmpl models/

|

||||

# vim .env

|

||||

|

||||

# start with docker-compose

|

||||

docker-compose up -d --build

|

||||

|

||||

docker-compose up -d --pull always

|

||||

# or you can build the images with:

|

||||

# docker-compose up -d --build

|

||||

# Now API is accessible at localhost:8080

|

||||

curl http://localhost:8080/v1/models

|

||||

# {"object":"list","data":[{"id":"ggml-gpt4all-j","object":"model"}]}

|

||||

@@ -158,11 +222,31 @@ curl http://localhost:8080/v1/chat/completions -H "Content-Type: application/jso

|

||||

```

|

||||

</details>

|

||||

|

||||

To build locally, run `make build` (see below).

|

||||

### Advanced: prepare models using the API

|

||||

|

||||

Instead of installing models manually, you can use the LocalAI API endpoints and a model definition to install programmatically via API models in runtime.

|

||||

|

||||

<details>

|

||||

|

||||

A curated collection of model files is in the [model-gallery](https://github.com/go-skynet/model-gallery) (work in progress!).

|

||||

|

||||

To install for example `gpt4all-j`, you can send a POST call to the `/models/apply` endpoint with the model definition url (`url`) and the name of the model should have in LocalAI (`name`, optional):

|

||||

|

||||

```

|

||||

curl http://localhost:8080/models/apply -H "Content-Type: application/json" -d '{

|

||||

"url": "https://raw.githubusercontent.com/go-skynet/model-gallery/main/gpt4all-j.yaml",

|

||||

"name": "gpt4all-j"

|

||||

}'

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

|

||||

### Other examples

|

||||

|

||||

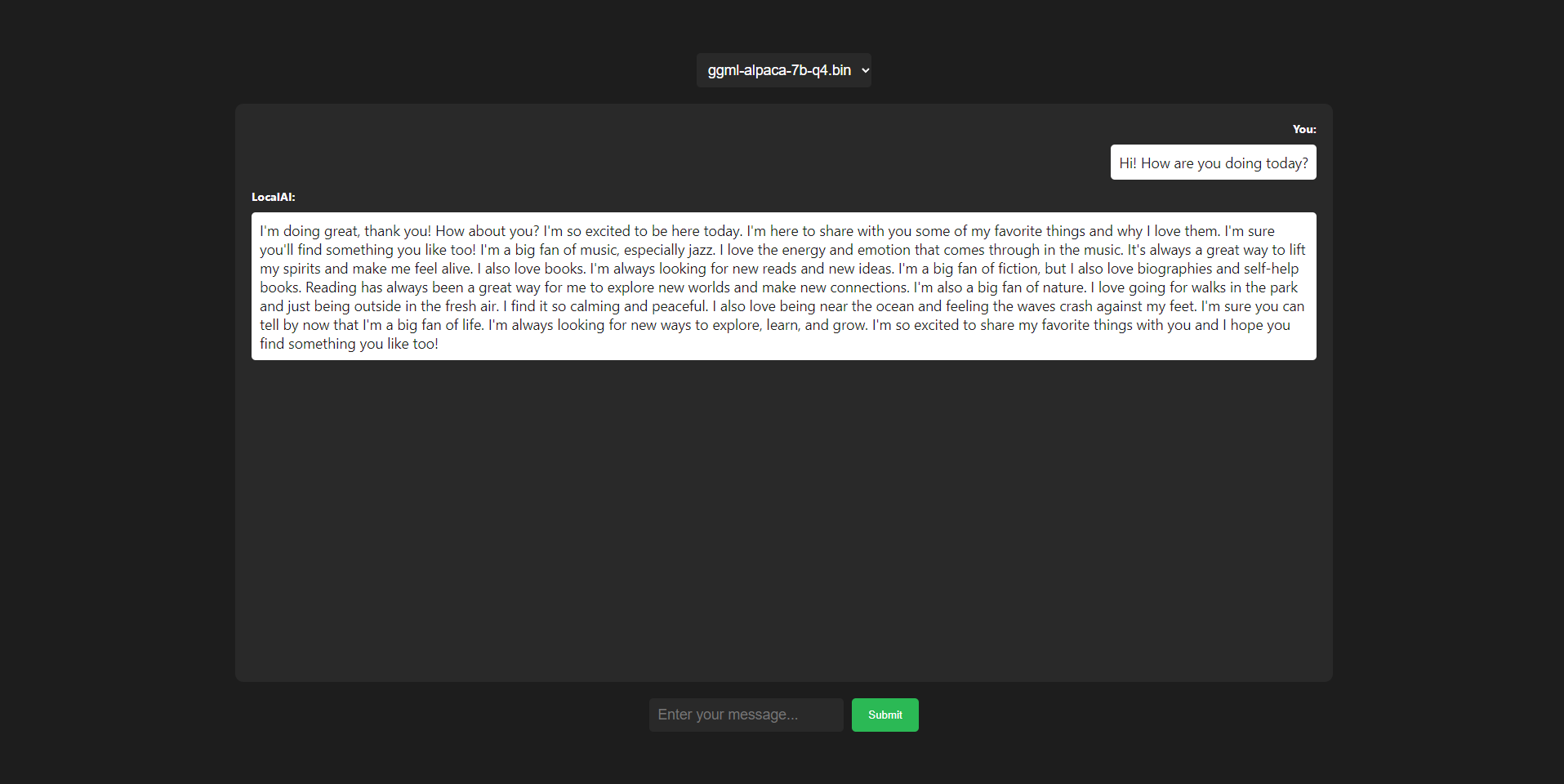

To see other examples on how to integrate with other projects for instance chatbot-ui, see: [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

|

||||

|

||||

To see other examples on how to integrate with other projects for instance for question answering or for using it with chatbot-ui, see: [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/).

|

||||

|

||||

|

||||

### Advanced configuration

|

||||

@@ -252,6 +336,73 @@ Specifying a `config-file` via CLI allows to declare models in a single file as

|

||||

|

||||

See also [chatbot-ui](https://github.com/go-skynet/LocalAI/tree/master/examples/chatbot-ui) as an example on how to use config files.

|

||||

|

||||

### Full config model file reference

|

||||

|

||||

```yaml

|

||||

name: gpt-3.5-turbo

|

||||

|

||||

# Default model parameters

|

||||

parameters:

|

||||

# Relative to the models path

|

||||

model: ggml-gpt4all-j

|

||||

# temperature

|

||||

temperature: 0.3

|

||||

# all the OpenAI request options here..

|

||||

top_k:

|

||||

top_p:

|

||||

max_tokens:

|

||||

batch:

|

||||

f16: true

|

||||

ignore_eos: true

|

||||

n_keep: 10

|

||||

seed:

|

||||

mode:

|

||||

step:

|

||||

|

||||

# Default context size

|

||||

context_size: 512

|

||||

# Default number of threads

|

||||

threads: 10

|

||||

# Define a backend (optional). By default it will try to guess the backend the first time the model is interacted with.

|

||||

backend: gptj # available: llama, stablelm, gpt2, gptj rwkv

|

||||

# stopwords (if supported by the backend)

|

||||

stopwords:

|

||||

- "HUMAN:"

|

||||

- "### Response:"

|

||||

# string to trim space to

|

||||

trimspace: