* content: added support for cache of own writes Thi keeps track of which blobs (n and m) have been written by the local repository client, so that even if the storage listing is eventually consistent (as in S3), we get somewhat sane behavior. Note that this is still assumming read-after-create semantics, which S3 also guarantees, otherwise it's very hard to do anything useful. * compaction: support for compaction logs Instead of compaction immediately deleting source index blobs, we now write log entries (with `m` prefix) which are merged on reads and applied only if the blob list includes all inputs and outputs, in which case the inputs are discarded since they are known to have been superseded by the outputs. This addresses eventual consistency issues in stores such as S3, which don't guarantee list-after-put or list-after-delete. With such stores the repository is ultimately eventually consistent and there's not much that can be done about it, unless we use second strongly consistent storage (such as GCS) for the index only. * content: updated list cache to cache both `n` and `m` * repo: fixed cache clear on windows Clearing cache requires closing repository first, as Windows is holding the files locked. This requires ability to close the repository twice. * content: refactored index blob management into indexBlobManager * testing: fixed blobtesting.Map storage to allow overwrites * blob: added debug output String() to blob.Metadata * testing: added indexBlobManager stress test This works by using N parallel "actors", each repeatedly performing operations on indexBlobManagers all sharing single eventually consistent storage. Each actor runs in a loop and randomly selects between: - *reading* all contents in indexes and verifying that it includes all contents written by the actor so far and that contents are correctly marked as deleted - *creating* new contents - *deleting* one of previously-created contents (by the same actor) - *compacting* all index files into one The test runs on accelerated time (every read of time moves it by 0.1 seconds) and simulates several hours of running. In case of a failure, the log should provide enough debugging information to trace the exact sequence of events leading up to the failure - each log line is prefixed with actorID and all storage access is logged. * makefile: increase test timeout * content: fixed index blob manager race The race is where if we delete compaction log too early, it may lead to previously deleted contents becoming temporarily live again to an outside observer. Added test case that reproduces the issue, verified that it fails without the fix and passed with one. * testing: improvements to TestIndexBlobManagerStress test - better logging to be able to trace the root cause in case of a failure - prevented concurrent compaction which is unsafe: The sequence: 1. A creates contentA1 in INDEX-1 2. B creates contentB1 in INDEX-2 3. A deletes contentA1 in INDEX-3 4. B does compaction, but is not seeing INDEX-3 (due to EC or simply because B started read before #3 completed), so it writes INDEX-4==merge(INDEX-1,INDEX-2) * INDEX-4 has contentA1 as active 5. A does compaction but it's not seeing INDEX-4 yet (due to EC or because read started before #4), so it drops contentA1, writes INDEX-5=merge(INDEX-1,INDEX-2,INDEX-3) * INDEX-5 does not have contentA1 7. C sees INDEX-5 and INDEX-5 and merge(INDEX-4,INDEX-5) contains contentA1 which is wrong, because A has been deleted (and there's no record of it anywhere in the system) * content: when building pack index ensure index bytes are different each time by adding 32 random bytes

Kopia

n.

Kopia is a simple, cross-platform tool for managing encrypted backups in the cloud. It provides fast, incremental backups, secure, client-side end-to-end encryption, compression and data deduplication.

Unlike other cloud backup solutions, the user is in full control of the backup storage and responsible for purchasing one of the cloud storage products (such as Google Cloud Storage), which offer great durability and availability for the data.

Kopia in action

Using kopia command line tool:

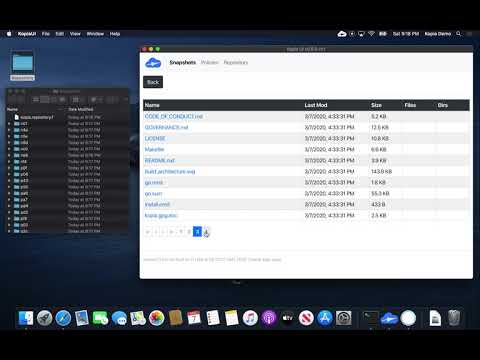

Kopia UI - experimental user interface

Getting Started

See Documentation for more information.

Building Kopia

See Build Infrastructure for more information on building Kopia and working with the source code.

Licensing

Kopia is licensed under the Apache License, Version 2.0. See LICENSE for the full license text.

Contribution Guidelines

Kopia is open source and contributions are welcome. For more information on how to contribute see the Contribution Guidelines.

Reporting Security Issues

If you find a security issue you'd like to disclose privately, please contact kopia-pmc@googlegroups.com or via direct message to maintainers on Slack.

Disclaimer

Kopia is a personal project and is not affiliated with, supported or endorsed by Google.

Cryptography Notice

This distribution includes cryptographic software. The country in which you currently reside may have restrictions on the import, possession, use, and/or re-export to another country, of encryption software. BEFORE using any encryption software, please check your country's laws, regulations and policies concerning the import, possession, or use, and re-export of encryption software, to see if this is permitted. See http://www.wassenaar.org/ for more information.

The U.S. Government Department of Commerce, Bureau of Industry and Security (BIS), has classified this software as Export Commodity Control Number (ECCN) 5D002.C.1, which includes information security software using or performing cryptographic functions with symmetric algorithms. The form and manner of this distribution makes it eligible for export under the License Exception ENC Technology Software Unrestricted (TSU) exception (see the BIS Export Administration Regulations, Section 740.13) for both object code and source code.